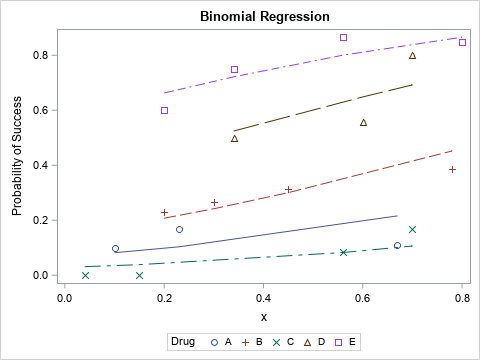

In a binomial regression model, the response variable is the proportion of successes for a given number of trials. In SAS regression procedures, you specify a binomial model by using the EVENTS/TRIALS syntax on the MODEL statement. Many analysts use the LOGISTIC or GENMOD procedures to fit binomial models. Visualizing