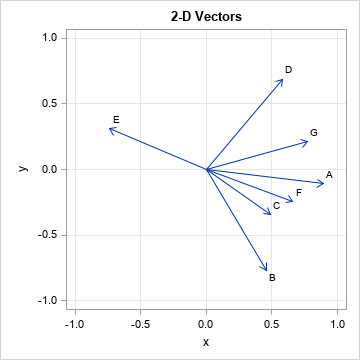

Order matters. The order of variables in tables and rows of a correlation matrix can make a big difference in how easy it is to observed correlations between variables or groups of variables. There are many ways to order the variables, but this article shows how to display the variables