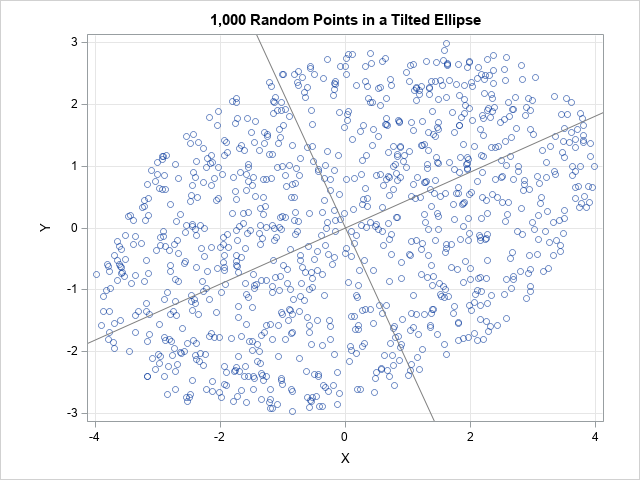

I have previously written about how to efficiently generate points uniformly at random inside a sphere (often called a ball by mathematicians). The method uses a mathematical fact from multivariate statistics: If X is drawn from the uncorrelated multivariate normal distribution in dimensiond, then S = r*X / ||X|| has