The SAS Dummy

A SAS® blog for the rest of us

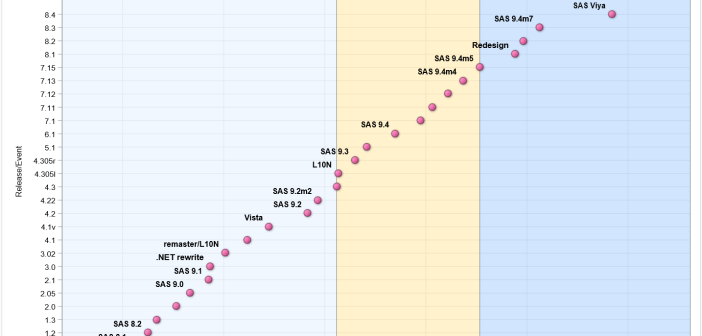

My colleague Rick Wicklin maintains a nifty chart that shows the timeline of SAS releases since Version 8. A few of you asked if I could post a similar chart for SAS Enterprise Guide. Here it is. Like Rick, I used new features in SAS 9.4 to produce this chart

SAS announces continued support and releases for SAS 9 and a new role for SAS Enterprise Guide with SAS Viya.

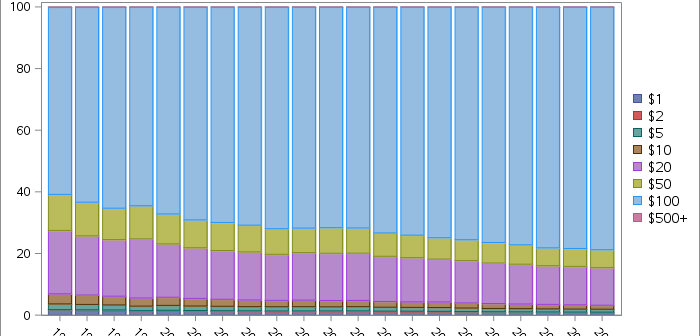

This phenomenon has been in the news recently, so I've updated this article that I originally published in 2017. The paper currency in circulation in the US is mostly $100 bills. And not just by a little bit -- these account for 34% of the notes by denomination and nearly

How to calculate a leap year in SAS - the easy way!

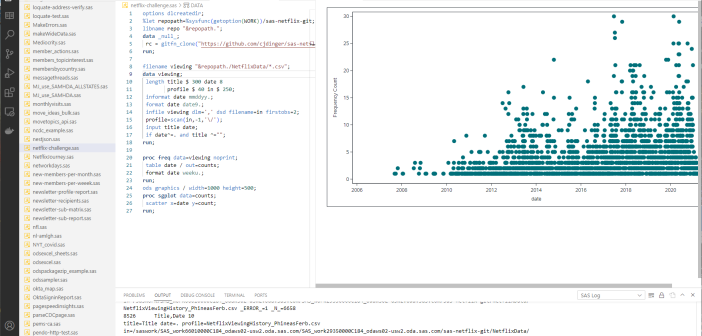

The SAS extension for VS Code supports SAS syntax and programming, and can connect to almost all SAS environments.

Use SAS DATA step to split a large binary file into smaller pieces, which can help with file upload operations,