About 5 years ago I first shared techniques for accessing and publishing Microsoft 365 content (in OneDrive and SharePoint) using SAS and Microsoft APIs. Many people have used those tips successfully, but a few eventually run into a limitation with large files -- especially when they need to upload a large file (many gigabytes) from SAS into SharePoint. It turns out you cannot send a huge file across the ether with just one PROC HTTP step. Instead, you must create an "upload session" and send a file in "digestible pieces" that the API can handle. It's a simple concept, but to implement in SAS we first need some scaffolding; we need a way to break a large file into smaller chunks for the upload operation -- like disassembling a bookshelf before you transport it in your Toyota Corolla.

In this article I share a SAS coding technique to split any file into several "chunks". The Box.com API and Microsoft Graph API are two examples of services that require/support this piecemeal file upload for large files. If you have another use case for "making little ones out of big ones", please tell me in the comments!

Note: If your ultimate goal is to upload a large file from SAS to Microsoft Teams or SharePoint or OneDrive, I've already built the technique into this GitHub repository: https://github.com/sascommunities/sas-microsoft-graph-api. There is an %uploadFile macro that now supports large files, and it works by splitting the source file first.

How to use the %splitFile macro

The %splitFile macro is a simple routine that allows you specify a single file to split, specify where you want the pieces to be stored, and what maximum size you need for each of those pieces. The code also produces an output data set that includes a record for each chunk with the byte-range of content that the file contains. You can use this information directly in the Box.com and Microsoft Graph APIs.

Sample usage (after including the macro code available here):

%splitFile( sourceFile=/home/my-user-id/STAT1/data/spending2011.sas7bdat, maxsize=%sysevalf(1024*60), chunkLoc=/home/my-user-id/splitchunks, metadataOut=work._metaout ); |

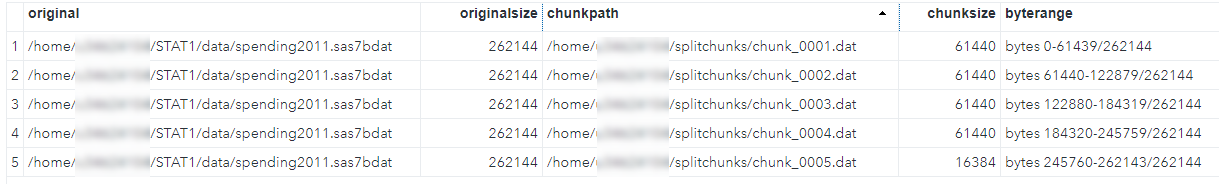

In this example, we're splitting a sas7bdat file into 60Kb-sized chunks and storing those chunks in a folder named ./splitchunks. The _METAOUT data set summarizes the output and looks something like this:

The maxsize= argument is optional; it's 320Kb by default. (Why 320Kb? Because the Microsoft Graph API documentation says that for its upload API, file chunks must be sized in multiples of 320Kb.) Also, chunkLoc= will default to your WORK location unless you specify a different path.

The algorithm for creating the file pieces is straightforward. The DATA step code uses file functions like FREAD and FGET to stream the source file into a buffer, then uses FPUT and FWRITE to write that content to a series of output files, starting a new file when the size of the current file exceeds the target file chunk size. The method relies on block I/O operations that work similar to their analogies in other programming languages, such as C (fread, fgetc, fputc, fwrite). I'm always amazed (and grateful) that in addition to its many high-level capabilities, the SAS programming language supports system programming tasks such these.

I've described the macro in more details -- with complete code -- in this article on SAS Communities. I've also added it as a standalone SAS program in this GitHub repository.

1 Comment

Nice post, Chris!

Here is a complementary way (and a macro) of splitting a dataset into several smaller ones: Splitting a data set into smaller data sets. It allows splitting datasets by number of pieces or by number of observations in each piece. It also describes splitting datasets sequentially or randomly ...