Editor's note: This article follows Curious about ChatGPT: Exploring the origins of generative AI and natural language processing.

As ChatGPT has entered the scene, many fears and uncertainties have been expressed by those working in education at all levels. Educators worry about cheating and rightly so. ChatGPT can do everything from writing an essay in iambic pentameter to solving algebraic equations and explaining the solution. We see a portion of schools rushing to ban the use of ChatGPT. Some educators take this tool so seriously that they’re turning away from online assignments and returning to strict paper and pencil. What’s an educator to do? First, let’s look past all the hype and hysteria and understand what ChatGPT does and where its merits are.

In the evenings, I teach Duke University undergrads a natural language processing (NLP) focused class called Computational Approaches to Human Language. I dedicated the second class of the semester to the elephant in the room—ChatGPT. With this huge disruptor hitting the scene, I’ve never been so thankful to be in the NLP space as I am today. I normally wouldn’t get into large language models like GPT until closer to the semester's midpoint after better understanding how the landscape has evolved. However, this isn’t ordinary times, so jumping ahead was important. Most of my students had heard of ChatGPT and many had already played around with it.

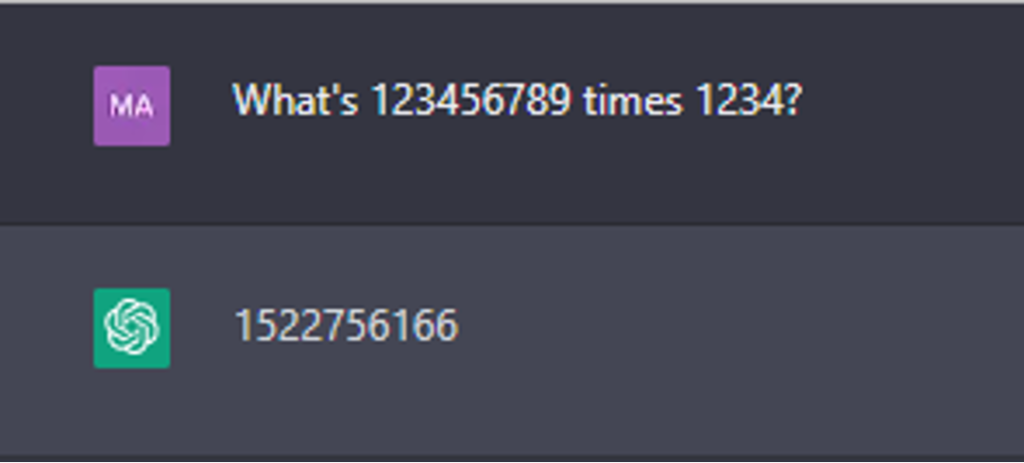

First, I walked through the evolution of NLP that has culminated in ChatGPT, including the content from the first article in this series, “Curious about ChatGPT: Exploring the origins of generative AI and natural language processing.” Areas ChatGPT tends to work best in are communicating in human language, maintaining memory of previous interactions in the same conversation, referencing physical, emotional and cultural experiences contained in the data it was trained with, and being able to draw dynamically from a store of scientific and technical expertise to answer questions. Then we got into things it wasn’t as good at such as basic arithmetic. It can write you a program to perform the arithmetic, but it isn’t great at basic math beyond working with 2 or 3-digit numbers.

I asked for a solution to a lengthy (but not too crazy) multiplication problem. What’s 123456789 times 1234? It returned 1,522,756,166. It’s not even close—the real answer is 152,345,677,626. To me, that’s understandable. It’s not a calculator. It’s a language model, and it’s improving its ability to solve equations. Sometimes those who are good with words aren’t so good with numbers! We all have our strengths. However, if your 5th grader intends to ask ChatGPT to do his or her long division homework, they may want to check ChatGPT’s work.

ChatGPT won’t ask follow-up questions or ask for clarification, and it definitely won’t admit when it doesn’t know something (see multiplication above). It just confidently responds with an answer, and you could be in trouble if you don’t check the answer.

AI Exploration Assignment

ChatGPT is well-trained in writing code—especially Python, so I gave my students an assignment.

- Create an account with OpenAI for ChatGPT.

- Ask ChatGPT to generate Python code to perform an action of your choice.

- Run the code and note any challenges or issues.

- Ask ChatGPT to refine or make modifications (at least 3 iterations).

- Submit your code and a brief write-up (no more than a page) of your experience, challenges, and issues.

Games and basic operations

The 60 students in the class had a mix of positive and not-so-positive experiences. Students who asked ChatGPT to do straightforward things, like create a hangman or tic tac toe game, generate sort algorithms or create art using ASCII characters had pretty good experiences. ChatGPT generated nearly flawless code or at least created code that required very little intervention. Games like 2048, FizzBuzz, and Sudoku were handled fairly well, though they required some fine-tuning to get them right.

Asking for more complex games, like recreating the dinosaur game on Google led to more frustration—mainly with a buffer limitation. It seems that ChatGPT has a buffer of around 75 lines when generating code. Once it hits that, the generated code cuts off—sometimes mid-line. That caused frustration because there wasn’t a great way to get ChatGPT to generate the rest of the code needed to finish.

Graphics

Students who asked ChatGPT to render graphics had less luck, and after thinking about it it’s not surprising. They essentially asked the bot to render something graphically that humans can recognize. The renderings (except for the dog below) were at least recognizable, but not quite right. ChatGPT isn’t a human, so stands to reason that it wouldn’t understand some of the nuances in things that we find instantly recognizable on sight.

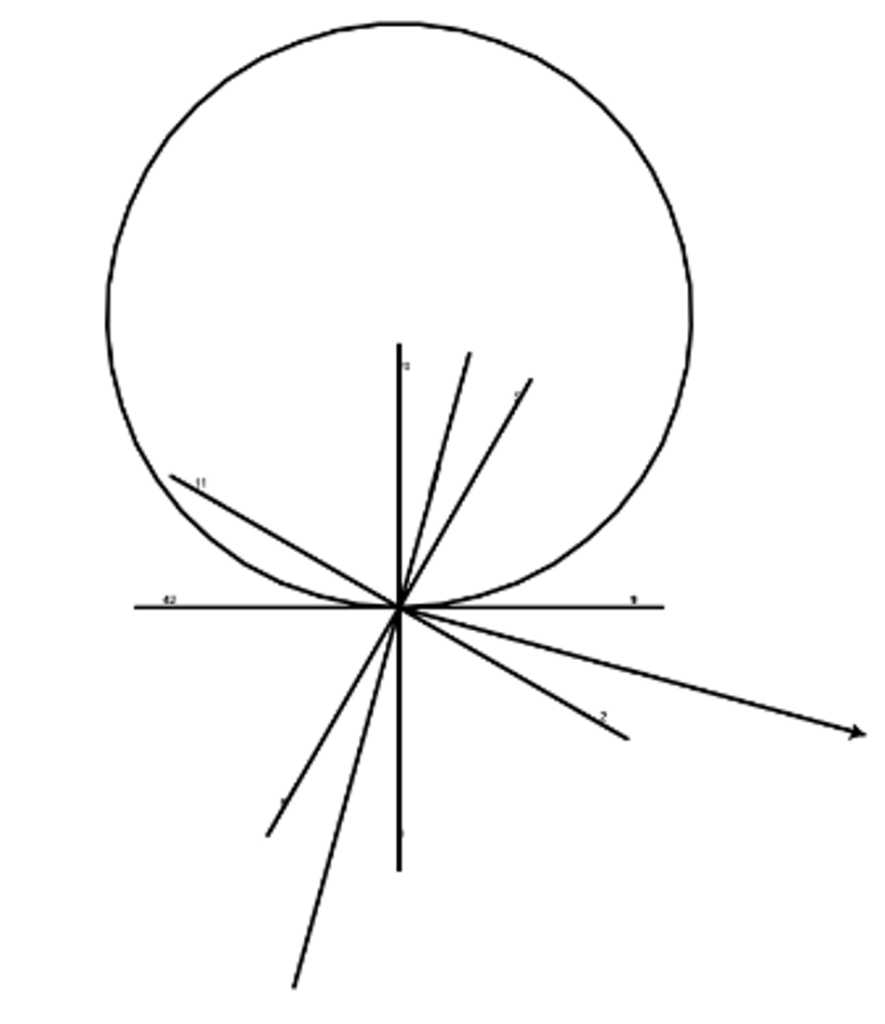

It generated this clock using the turtle package for one student. She added a layer of complexity by using Italian for all her prompts. ChatGPT handled the Italian prompts well, but the clock rendering needed help.

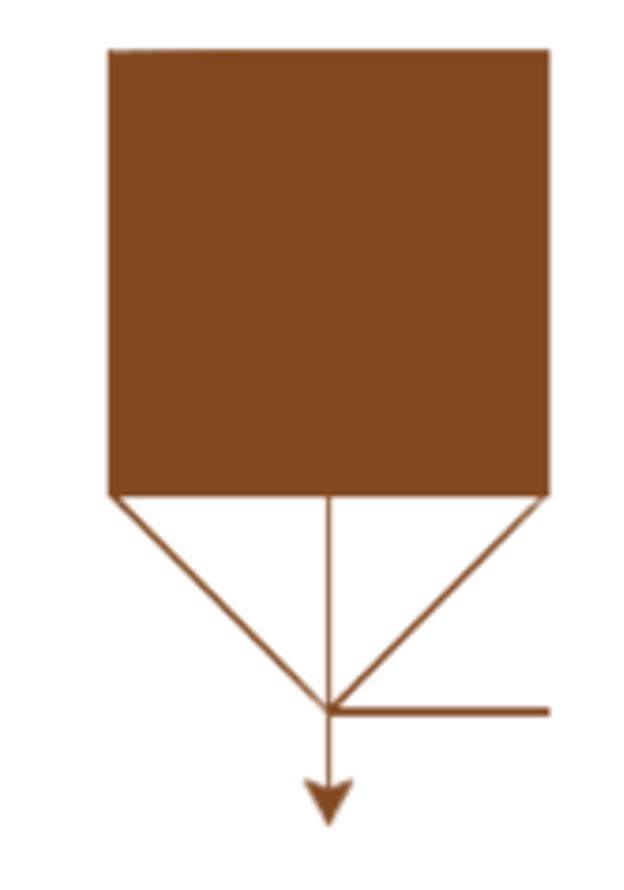

One student started with code that would prompt a user to draw a basic shape (circle, triangle, square). That worked respectably. When they moved on to harder shapes (tree, house, car), the best code generated by ChatGPT to draw a house resulted in this:

A back-and-forth asking ChatGPT to generate a card for a fantasy card trading game returned this. It looks like a card and could now be further refined.

Asking for code to generate a turtle did create a surprisingly good turtle using the turtle package, then did another decent job when asked to use the turtle to draw a square.

|

|

It didn’t do quite well when asked to have the turtle draw a dog (left) or a more realistic dog (right).

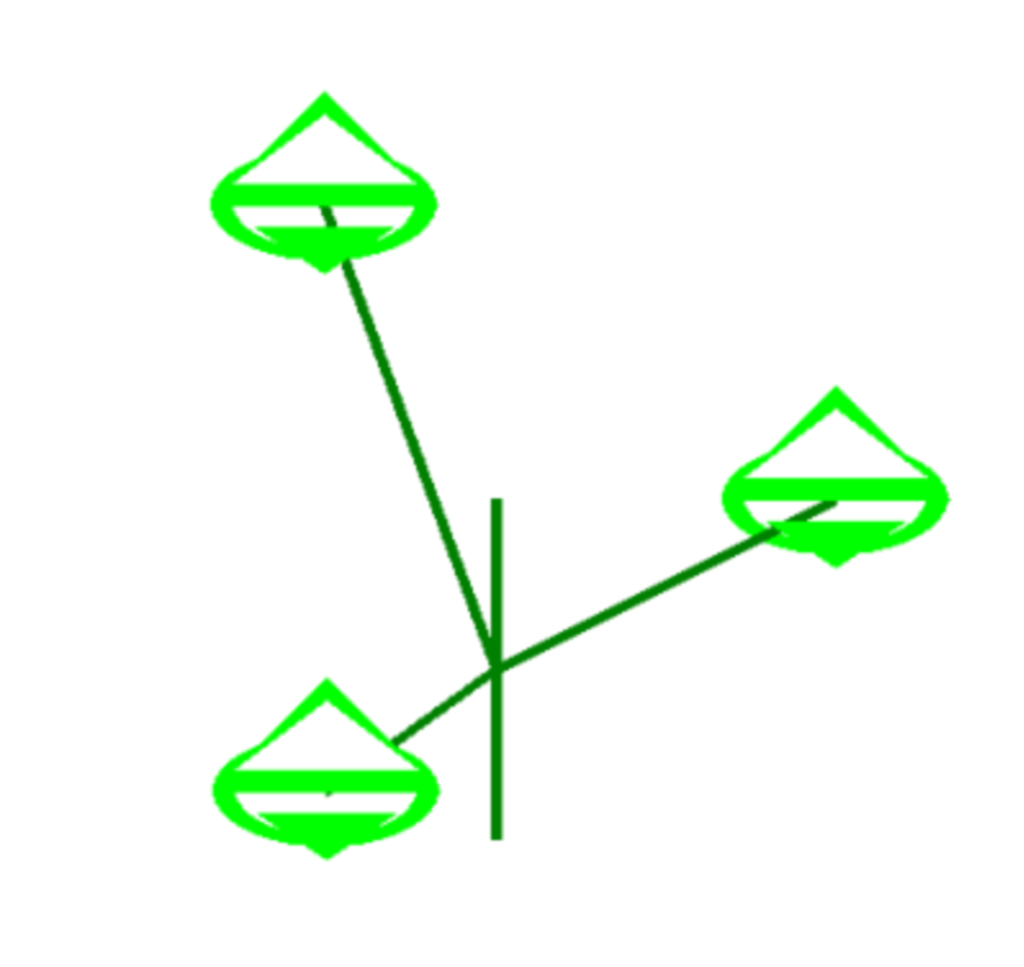

This is output was rendered after asking ChatGPT to generate code to draw a flower, then a more realistic flower—a clover. The result wasn't spectacular even after asking for the clover leaves to be joined to the stem.

|

|

|

Audio, app building, and more

Code generated to handle audio files had mixed results. ChatGPT generated decent starting code but required a bit of debugging and documentation to fulfill the requirements.

One student had it walk them through building a mobile app. ChatGPT provided good guidance by helping with brainstorming to nail down the content for an app, then went further to help illustrate the steps to create a job and implement the code.

Still other students tried to get ChatGPT to generate code to extract text and found that ChatGPT embellished and added text that wasn’t requested or requested for (in machine learning lingo, this is called a hallucination.)

Attempts to get ChatGPT to generate a chatbot weren’t terribly successful.

Several students had it generate code to solve problems presented on LeetCode, a website where people can practice solving coding problems and prepare for technical interviews. Interestingly, ChatGPT handled most of these types of requests well with few interventions required.

Two students found that ChatGPT can generate haikus, but they aren’t as clever as the haikus generated by real humans.

Ethical data acquisition

Attempts to have ChatGPT generate code to scrape websites (both government and commercial) for a few different applications were successful, but students received content policy warnings. I’ve explained in my class that just because you can scrape sites doesn’t mean it’s legal or acceptable, and that this is what the content policy warning was trying to convey. Data is valuable and scraping is generally outlined as a violation in terms of service on many websites.

Student Takeaways

Beyond the generated code, these students came away from this exercise understanding that using ChatGPT to do their work without putting some of their own work in isn’t advisable. Many remarked that ChatGPT would return wrong answers, then apologize and return the same wrong answer when challenged on the wrong answer. Most of the code generated did require some level of human intervention to run—sometimes it was as simple as the code missing package import statements, though sometimes it was significant syntax issues. By asking them to have ChatGPT make at least three revisions, many students noted that the more revisions they went through, the worse the code got. Sometimes, ChatGPT invented code or seemed to just make things up.

There is value in having ChatGPT help with coding—especially with basic operations. It’s always easier to start with something than start with nothing. However, some students noted that for more complex tasks, it took them longer to get ChatGPT’s code to run than it would have if they had built it from scratch themselves.

The future of AI in Education

AI is here to stay. As educators, we have a responsibility to help our students understand the pros and perils of all available technology. We are not yet at a point where any of us, student or otherwise, should trust AI to do all the work for us, but it can be a remarkably useful tool given the right set of circumstances. Instead of being quick to ban technologies, we should view this as a unique opportunity to influence the future of AI and the next generation of users.

Learn more

- Explore ChatGPT on OpenAI.com

- Google AI updates: Bard and new AI features in Search (blog.google)

- Check out this Natural Language Processing e-book or visit Visual Text Analytics

2 Comments

Wow! What an awesome post! Thank you for doing this in your class and reporting on it. You answered so many questions I had thanks to your careful field research. After reading your post, I can imagine the attributes of the ChatGPT training dataset much more clearly - like you said, it must have had a lot of Python in it. I am so glad you included this observation: "...many students noted that the more revisions they went through, the worse the code got." I was wondering if that would happen! Thanks also for providing us all a use-case we can emulate in our teaching.

Any concerns with and discussions of AI sites owning content developed with their AI tools? Ditto for properly attributing credit to AI tools when developing code or other content?