Are you looking for a Data Science easy button? The Data Science Pilot Action Set comes pretty close.

Are you looking for a Data Science easy button? The Data Science Pilot Action Set comes pretty close.

Validating and testing our supervised machine learning models is essential to ensuring that they generalize well. SAS Viya makes it easy to train, validate, and test our machine learning models.

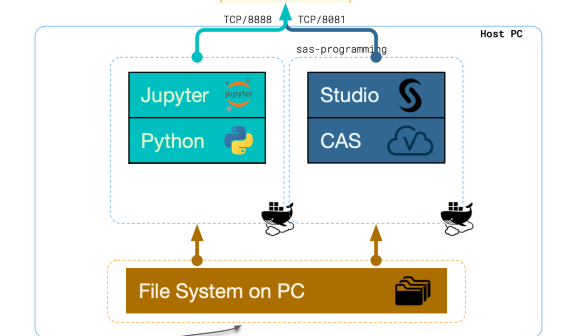

This series of videos spotlights a very powerful API that lets you use Python while also having access to the power of SAS Deep Learning.

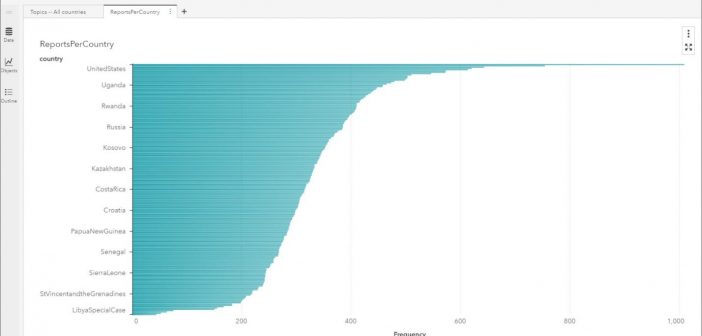

In this blog, I use data from the U. S. Department of State Trafficking in Persons (TIP) reports for the years 2013-2017 to accomplish these objectives: 1) To determine what are the main themes in TIP reports, and 2) to show how to work with ASTORE code to deploy models using SAS Viya 3.4 Visual Text Analytics 8.4.

The machine learning autogenerated concept and fact rules in VTA 8.4 facilitate the process of developing LITI rules to extract and find information in text documents. There are many important problems where the use of Text Analytics provides valuable insights such as with Human Trafficking.

A simple example of how you can combine SAS and open-source technologies to solve real business issues.

Neural networks, particularly convolutional neural networks, have become more and more popular in the field of computer vision. What are convolutional neural networks and what are they used for?

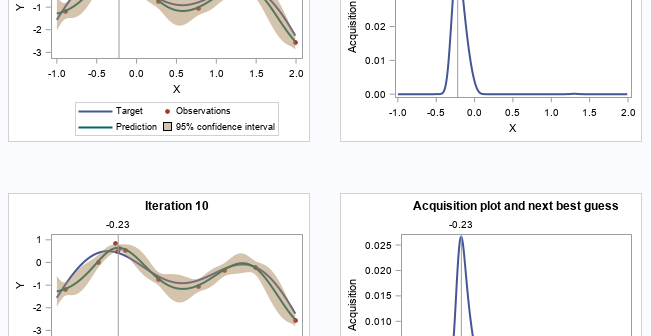

Learn how Bayesian optimization works through a simple demo.

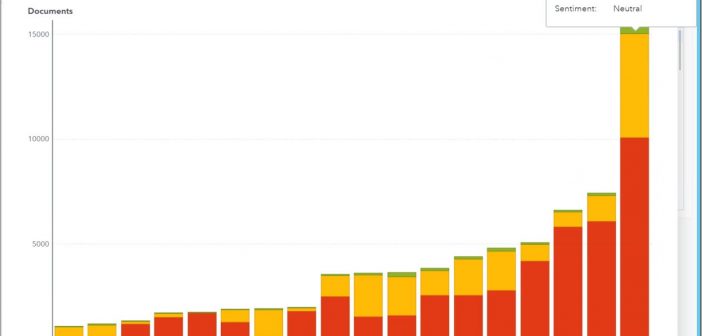

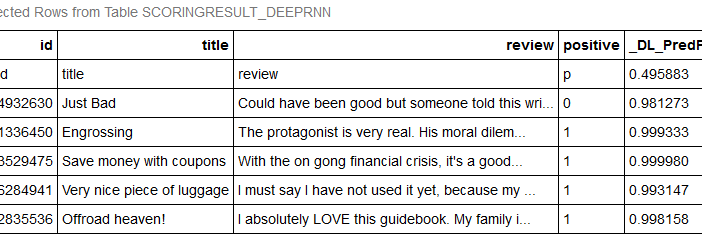

In this blog, I use a Recurrent Neural Network (RNN) to predict whether opinions for a given review will be positive or negative. This prediction is treated as a text classification example. The Sentiment Classification Model is trained using deepRNN algorithms and the resulting model is used to predict if new reviews are positive or negative.

Customer risk rating models play a crucial role in complying with the Know Your Customer (KYC) and Customer Due Diligence (CDD) requirements, which are designed to assess customer risk and prevent fraud. Today, the most common form of the Customer Risk Rating model is a score-based risk rating model. This