Regarding natural language processing and text analytics, we can accomplish tasks quickly, rigorously, and without fuss by using SAS Visual Text Analytics. It offers out-of-the-box options like topic modeling, sentiment analysis, and linguistic rules-based approaches.

Likewise, many data science goals can be achieved by using any programmatic or interface-based models available in SAS® Viya®. This post will review a popular manufacturing optimization use case that can be solved by using a SAS Optimization model. Using natural language conversation, we will demonstrate how we can use generative AI to build a digital assistant that interacts with the model.

Generative AI (GenAI) has enabled many advancements in how everyone interacts with data. With the rise in popularity of large language models (LLMs), such as GPT-3, Llama2, Bard, and so on, there has been an increasing demand to use GenAI in conjunction with our already robust SAS Viya toolkit. One of the reasons these tools and many others available through SAS Viya are so successful is that, while many have the domain knowledge and technical acumen to perform such tasks, development or improvements require programming knowledge and time. But what if we could use natural language (spoken or typed human language) to interface with preexisting models? Leveraging LLMs to interpret natural language and trigger SAS programs on command is an efficient way to interact with SAS Viya. They provide a no-code way for users to get easily interpretable results quickly and with SAS' ethical rigor and trustworthiness.

Use case

Let’s consider a recipe optimization use case. It’s used by several manufacturing customers to determine the optimal amount of ingredients while maintaining quality and cost-related constraints. Figure 1 demonstrates one such use case for a wallboard manufacturing company. Stucco, water, and some additives are mixed at a high level, and the mixture is baked in an oven, where excess water evaporates. The quality of the wallboard directly depends on the amount of input ingredients that constitute the wallboard mixture and the extent of water evaporation during the baking process. The company would want to maximize the manufacturing process yield to reduce input ingredients' wastage, thereby reducing overall costs. The problem can be formulated as a mathematical optimization model by defining the lower and upper bounds of the input ingredients.

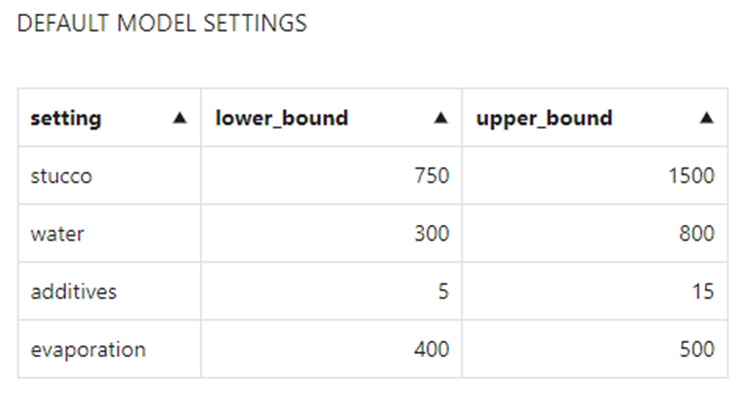

Figure 2 provides sample values of the lower and upper bounds of the input settings for the wallboard recipe optimization. By using board strength as the quality constraint, we can use PROC OPTMODEL to solve this optimization problem. We can find the optimal values of the input settings to maximize the plant's production yield.

If we understand the typical life cycle of such an optimization model, customers usually request interaction with the model to enable them to easily change the model parameters and get updated results. For example, a customer might want to rerun the optimization model with a different set of bounds of input parameters and find the updated yield of the model. An AI-based digital assistant makes for a powerful feature that enables customers to achieve this regardless of their technical expertise. By using this assistant, you can use natural conversation queries to rerun the model, make changes to the input parameters, get updated results, and so on.

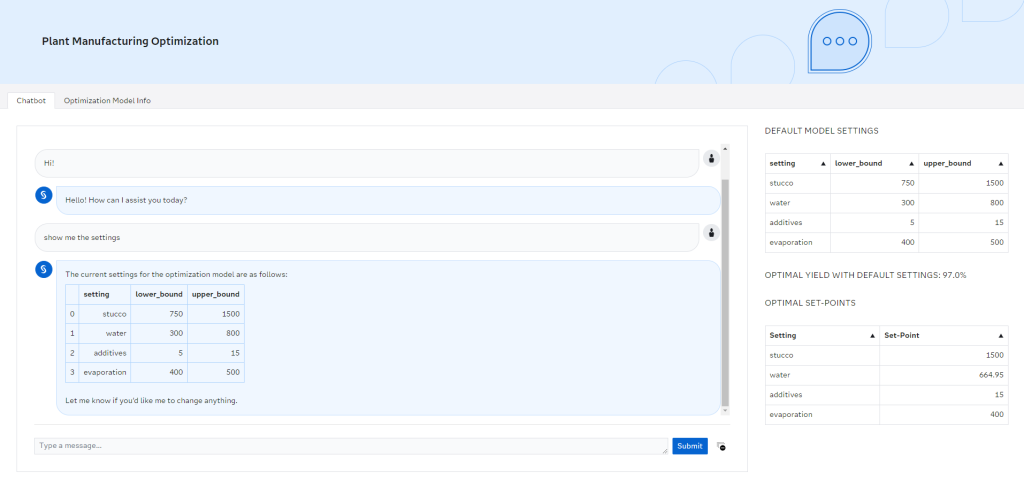

Figure 3 shows the assistant's layout. The setting bounds are displayed on the right when the user interacts with the chatbot, and the user can interact with the chatbot on the left. The optimal values of each set are also shown, along with the maximum yield as returned by the model.

Users can ask basic questions about recipe optimization and run scenarios by changing the input settings when interacting with the chatbot. The user has the following fixed set of options to interact with the data and optimization model:

- Run the optimization model with current settings and summarize the results.

- Request to change the input settings' lower or upper bounds.

- View the current settings table.

- Reset to default settings, run the optimization model, and view results.

Project architecture

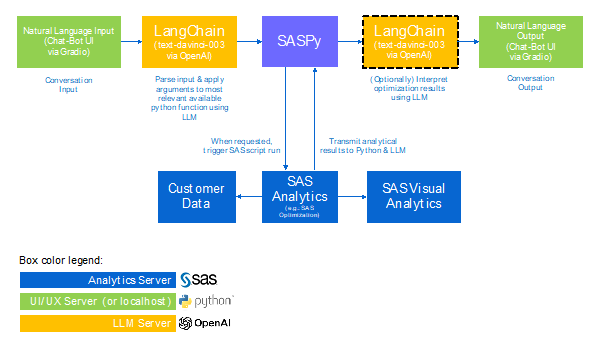

This project uses a combination of Python and SAS with different components distributed across three separate servers, as shown in Figure 4.

- UI/UX Server (green boxes):

- The chatbot interface is built by using the Gradio library in Python. This server (or localhost) runs Python along with UI/UX elements and handles LLM-based interactions by using the LangChain library.

- Analytics Server (blue boxes):

- This server hosts the customer data, runs SAS Viya, and can also host an SAS Visual Analytics dashboard if desired. The recipe optimization problem is coded using PROC OPTMODEL and run on the analytics server.

- LLM Server (orange boxes):

- This server hosts the LLM, which is ‘text-davinci-003’ in our case. The LangChain agent is initialized in the UI/UX server, and API calls are made from the agent to this server to understand which tool needs to be executed.

Here is an overview of the information flow when a user makes a query such as: “Change the lower bounds of stucco to 600”:

- The user inputs the request via the user interface.

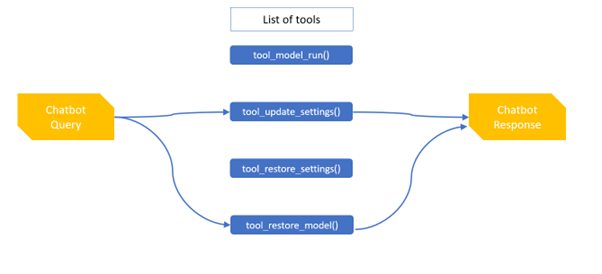

- The natural language input is sent to the LangChain Agent. An agent is a way of interacting with an LLM, in this case, text-davinci-003, with extra prompting provided by the LangChain Python library. This agent has been prompted or instructed on how to react to user input. LangChain gives it a list of tools or Python functions that enable it to perform actions, such as updating the settings if requested. The input (above) is parsed by the agent using prompts with LangChain. The agent then intelligently applies the correct arguments to the relevant Python function.

- Because this input requires a table to be updated in SAS, the selected tool interacts with SASpy to update the table with the correct upper bounds of stucco on SAS Viya. The table remains in the work library for this use case and will be wiped after our CAS session.

- The new table is returned to our LangChain agent via the Python function that the LLM selected in step 2. The agent parses this table and repeats it for the user.

- The user views the results in the user interface and can request another.

LangChain: Agents, prompts, and custom tools

We use LangChain Agents to interact with OpenAI’s flagship GPT and custom tools to execute SAS code and update our setting parameters. Without getting too technical, we’ll focus here on how the LLM ‘learns’ to follow instructions, fulfill user requests, and behave accordingly due to our prompt engineering.

Prompt Engineering

We have used prompts in the chatbot in several ways to ensure consistency in performance and reduce LLM hallucinations. For this, we have written extensive information into variables provided via LangChain: the prefix, format instructions, suffix, and tool descriptions.

Prefix:

This short description provided to the LLM tells the LLM what kind of assistant it is. The prefix introduces the assistant to the user and explains its capabilities. The chatbot will use this line of text if the user asks what it can do. It is prepended to the conversation to set the context for the LLM as it responds to the user.

Example:

“You are a helpful assistant who can help with manufacturing questions and recipe optimization. Based on your input, you can generate humanlike text responses, enabling you to engage in natural conversation and provide coherent and relevant responses to the task at hand.”

Format Instructions:

This aids the LLM in formatting responses back to the user. It illustrates two possible outcomes to the LLM: when a tool is required to execute the user request and when a tool is not required in the response.

Example:

“To use a tool, please use the following format:

Thought: Do I need to use a tool? Yes

Action: The action to take must be one of [tool names]

Action input: The input to the action in JSON format

Observation: The result of the action

When you have a response to say to the human, or if you do not need to use a tool, you must use the following format:

Thought: Do I need to use a tool? No.

AI: [your response here]“

Suffix:

This provides the LLM with any final information that can be explained to the user. It details any tools at the LLM’s disposal and is the last place to reinforce high-importance instructions. It is appended to the LLM’s instructions to provide additional context.

Example:

“You are a manufacturing and recipe optimization assistant. You are strict because you can only use tools to discuss recipe optimization.

Begin!

[chat history]

New input: [input]

Since you are a text-based language model, you must use tools to execute requests when necessary.

Thought: Do I need to use a tool? Let’s think step by step.”

Tool descriptions:

Tool descriptions are strings that are passed to the LLM that explain what the tool is used for, how to execute the tool (for example, are any parameters required? How do you map those parameters based on user input?), and what is the output of the tool. This enables the LLM to select the desired tool more accurately, if necessary, for the user’s request.

Example:

“Use this tool when changing the lower or upper bounds of one of the settings. The parameters for this tool are ‘direction’, whether it’s the lower or upper bounds that need to be changed, ‘setting’, the name of the setting to be changed, i.e. stucco, water, evaporation, or temperature, and ‘new_value’ the new value to be changed.

For example, if the user asks ‘change the lower bounds of stucco to 100‘, the input into the tool should be:

(‘lower_bounds’, ‘stucco’, 100)”

These different types of prompts are passed to the LLM when the agent is instantiated, so they are actively used in the chatbot user interface.

Summary

This project is a scalable, reproducible solution that can be reiterated as a digital assistant for nearly any SAS Viya task. The architecture, now set in place, has already been repurposed for customer use cases, such as warehouse space optimization, and continues to grow. The current infrastructure could be used for various future use cases: fraud detection, warehouse optimization, text analytics, document summarization, and more, all achieved through adapting the SAS script development and prompt engineering.