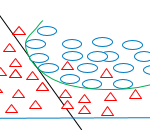

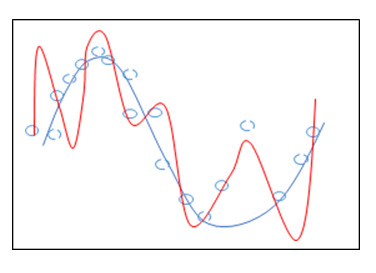

El popular dicho español ‘El que mucho abarca poco aprieta’ ha cobrado gran relevancia en la era del análisis de datos. Cuando los data scientists, data analysts, y analistas en general realizan modelos para predecir comportamientos, tendencias, patrones, etc. se enfrentan ante el desafío de abarcar lo suficiente para apretar