This is the third post in my series of machine learning techniques and best practices. If you missed the earlier posts, read the first one now, or review the whole machine learning best practices series.

Data scientists commonly use machine learning algorithms, such as gradient boosting and decision forests, that automatically build lots of models for you. The individual models are then combined to form a potentially stronger solution. One of the most accurate machine learning classifiers is gradient boosting trees. In my own supervised learning efforts, I almost always try each of these models as challengers.

When using random forest, be careful not to set the tree depth too shallow. The goal of decision forests is to grow at random many large, deep trees (think forests, not bushes). Deep trees certainly tend to overfit the data and not generalize well, but a combination of these will capture the nuances of the space in a generalized fashion.

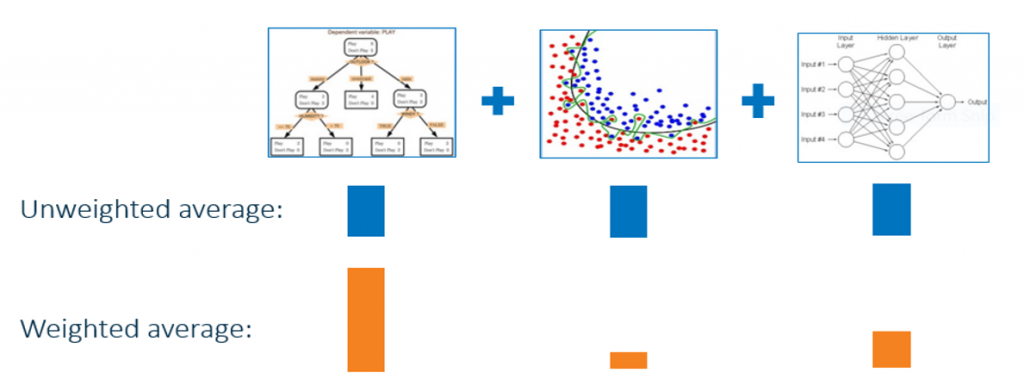

Some algorithms fit better than others within specific regions or boundaries of the data. A best practice is to combine different modeling algorithms. You may also want to place more emphasis or weight on the modeling method that has the overall best classification or fit on the validation data. Sometimes two weak classifiers can do a better job than one strong classifier in specific spaces of your training data.

As you become experienced with machine learning and master more techniques, you’ll find yourself continuing to address rare event modeling problems by combining techniques.

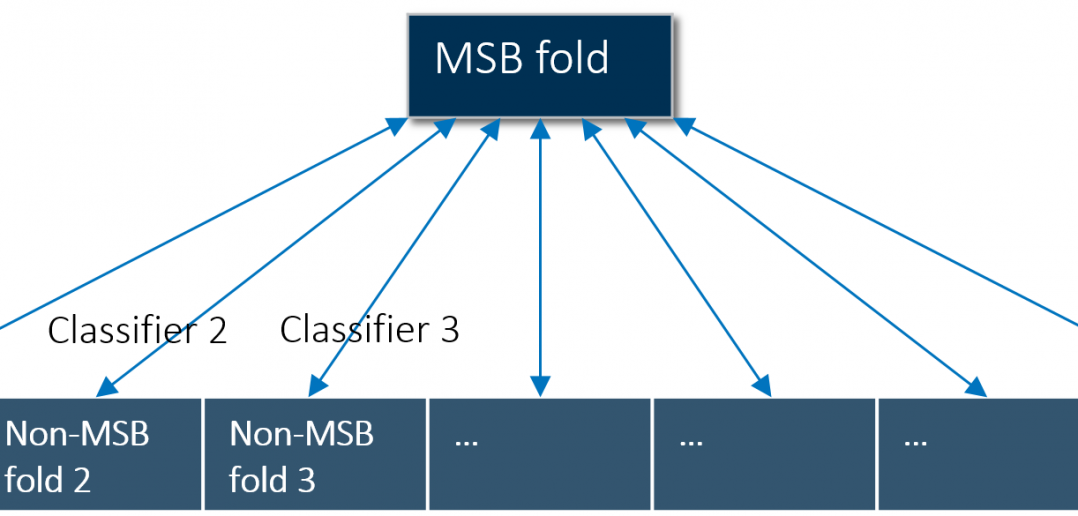

Recently, one of my colleagues developed a model to identify unlicensed money service businesses. The event level was about 0.09%. To solve the problem, he used multiple techniques:

- First, he developed k-fold samples by randomly selecting a subsample of nonevents in each of his 200 folds, while making sure he kept all the events in each fold.

- He then built a random forest model in each fold.

- Lastly, he ensembled the 200 random forest, which ended up being the best classifier among all the models he developed.

This is a pretty big computational problem so it's important to be able to build the models in parallel across several data nodes so that the models train quickly.

If there are other tips you want me to cover, or if you have tips of your own to share, leave a comment on this post.

My next post will be about model deployment, and you can click the image below to read all 10 machine learning best practices.

1 Comment

Pingback: Machine learning best practices: detecting rare events - Subconscious Musings