Welcome to the continuation of my series Getting Started with Python Integration to SAS Viya. In previous posts, I discussed how to connect to the CAS server, how to execute CAS actions, and how your data is organized on the CAS server. In this post I'll discuss loading client-side CSV files into CAS.

Loading data from a client-side file is appropriate for smaller data. If the data sets become larger, it is more efficient to use a server-side load with the loadTable action to access and load the data. For more information check out my previous post loading server-side files into memory,

In this example, I'm using Python on my laptop to connect to the CAS server. Client-side files consist of files on my laptop (client). In these examples I'll load the heart.csv file from the SAS® Viya® Example Data Sets webpage to the CAS server. There are multiple ways to load client-side CSV files into CAS. I'll show you how to do it using the familiar Pandas API in the SWAT package with the read_csv method. I'll also show you using the upload_file and upload_frame SWAT methods.

Using the Pandas API in the SWAT package - read_csv

First, I'll begin with the familiar read_csv method from the SWAT package and store the results in castbl. The SWAT read_csv method calls the Pandas read_csv method, creates a client-side DataFrame, then uploads the DataFrame to the CAS server as an in-memory table. The main difference is the casout parameter. The casout parameter is specific to the SWAT package. It enables you to specify the output CAS table information like the name of the new distributed table and the in-memory location. Here, I'll name the CAS table heart_read_csv and place it in the Casuser caslib. I'll also specify the replace parameter to replace the CAS table if it already exists.

castbl = conn.read_csv(r'https://support.sas.com/documentation/onlinedoc/viya/exampledatasets/heart.csv', casout = {'name':'heart_read_csv', 'caslib':'casuser', 'replace':True}) # and the results NOTE: Cloud Analytic Services made the uploaded file available as table HEART_READ_CSV in caslib CASUSER(Peter). NOTE: The table HEART_READ_CSV has been created in caslib CASUSER(Peter) from binary data uploaded to Cloud Analytic Services. |

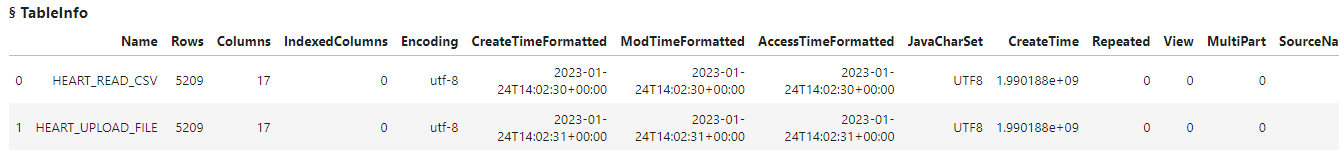

Next, I'll execute the tableInfo CAS action to view available CAS tables in the Casuser caslib.

conn.tableInfo(caslib = 'casuser') |

The results above show that the table was loaded to the CAS server.

Let's view the type and value of the castbl object.

display(type(castbl), castbl) # and the results swat.cas.table.CASTable CASTable('HEART_READ_CSV', caslib='CASUSER(Peter)') |

The results show that castbl is a CASTable object and simply references the CAS table on the CAS server.

Lastly, I'll preview the CAS table with the SWAT head method.

castbl.head() |

The SWAT head method returns 5 rows from the CAS table to the client.

The read_csv method enables you to use all of the available Pandas parsers through the SWAT package to upload data to the distributed CAS server. The read_csv method parses the data on the client into a DataFrame, and then uploads the DataFrame to the CAS server.

Using the upload_file method in the SWAT package

Instead of using the Pandas API through the SWAT package you can also use the upload_file SWAT method. The upload_file method transfers the file to CAS server and then all parsing is done on the server. The upload_file method is not as robust as the read_csv method, but can be a bit faster than client-side parsing. Upload_file can be used to upload other file types to the CAS server, not just CSV files.

Here, I'll load the same CSV file to the CAS server as in the previous example. This time I'll use upload_file method and name the CAS table heart_upload_file. I'll store the results in castbl2.

castbl2 = conn.upload_file(r'https://support.sas.com/documentation/onlinedoc/viya/exampledatasets/heart.csv', casout = {'name':'heart_upload_file', 'caslib':'casuser', 'replace':True}) # and the results NOTE: Cloud Analytic Services made the uploaded file available as table HEART_UPLOAD_FILE in caslib CASUSER(Peter). NOTE: The table HEART_UPLOAD_FILE has been created in caslib CASUSER(Peter) from binary data uploaded to Cloud Analytic Services. |

The results show that the CSV file was uploaded to CAS successfully.

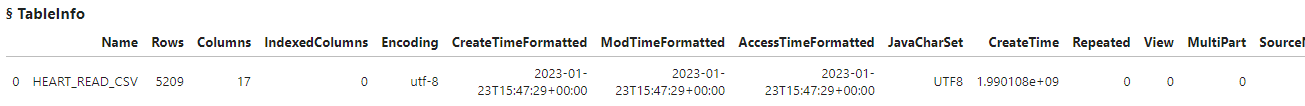

I'll view the available CAS table using the tableInfo action.

conn.tableInfo(caslib = 'casuser') |

The results show that now I have two CAS tables in memory.

Using the SWAT upload_frame method

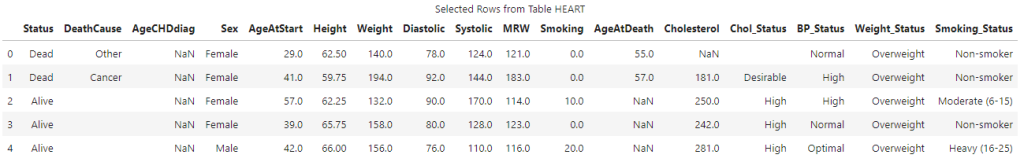

Another useful SWAT method is the upload_frame method. I like using this method if I'm preparing a DataFrame on my client using Pandas, then need to transfer the Pandas DataFrame to the CAS server for additional processing or to use another SAS Viya application like SAS Visual Analytics.

For example, here is some traditional Pandas code to read the same CSV file as before and then prepare it using traditional Pandas. The code is renaming columns, creating calculated columns and dropping a column.

conn.tableInfo(caslib = 'casuser') ## Read the data into a DataFrame df_raw = pd.read_csv(r'https://support.sas.com/documentation/onlinedoc/viya/exampledatasets/heart.csv') ## Prepare the DataFrame df = (df_raw .rename(columns = lambda colName: colName.upper()) .assign( STATUS = lambda _df: _df.STATUS.str.upper(), DEATHCAUSE = lambda _df: _df.DEATHCAUSE.fillna('Still Alive').str.lower() ) .drop('AGEATSTART', axis=1) ) df.head() |

Now that I have the final DataFrame, I can simply upload it to the CAS server using the upload_frame method. Again, the casout parameter specifies output CAS table information.

castbl3 = conn.upload_frame(df, casout = {'name':'heart_upload_frame', 'caslib':'casuser', 'replace':True}) # and the results NOTE: Cloud Analytic Services made the uploaded file available as table HEART_UPLOAD_FRAME in caslib CASUSER(Peter). NOTE: The table HEART_UPLOAD_FRAME has been created in caslib CASUSER(Peter) from binary data uploaded to Cloud Analytic Services. |

The results show that the Pandas DataFrame was successfully uploaded to the CAS server.

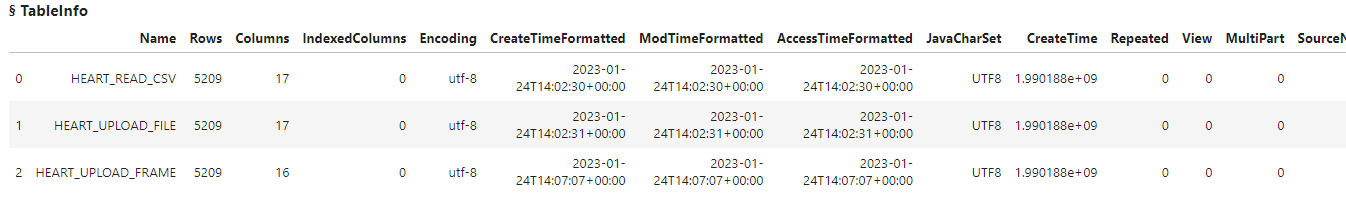

Lastly I'll view available CAS tables.

Summary

In this post I discussed using the read_csv, upload_file and upload_frame methods for loading client-side CSV files into CAS. Depending on the size of your data and your parsing needs, you may consider one method over the other.

Loading data from the client side into memory onto the CAS server will be slower than loading server-side files into memory. Remember, server-side files are data sources that the CAS server has direct access to like a network path or database. Client-side files are available on your Python client. Client-side data loading is intended for smaller data sets. For more information check out the Client-Side Data Files and Sources section in the SWAT documentation page.

Additional and related resources

- Getting Started with Python Integration to SAS® Viya® - Index

- SWAT API Reference

- Client-Side Data Files and Sources

- SAS Course - SAS® Viya® and Python Integration Fundamentals

- SAS® Cloud Analytic Services: Fundamentals

- CAS Action Documentation

- CAS Action! - a series on fundamentals

- Two Simple Ways to Import Local Files with Python in CAS (Viya 3.5)

2 Comments

Great question.

By default all session scope CAS tables (temporary tables) are cleared from memory when you disconnect from the CAS server. The same thing happens when using Pandas. All DataFrames are cleared from memory when you close out of Python. This is the default behavior.

You have multiple options. You can save the table to disk, or you can promote the table to global scope:

- First you can save the table back to disk. To do that use the table.save CAS action: https://go.documentation.sas.com/doc/en/pgmsascdc/default/caspg/cas-table-save.htm. This is similar to saving a DataFrame using a to_method in pandas.

Example: castbl3.save(name='myfilesaved.csv', caslib='casuser')

You can specify any file extension, not just CSV.

- You can also promote a CAS table as a global scope table. That means that table will live in memory as a permanent table. One way to do this is to add the 'promote':True parameter in casout.

castbl3 = conn.upload_frame(df, casout = {'name':'heart_upload_frame',

'caslib':'casuser',

'promote':True})

https://go.documentation.sas.com/doc/en/pgmsascdc/default/caspg/cas-table-loadtable.htm#SAS.cas-table-loadtable-casout

More information on session scope (temp tables) vs global scope tables: https://go.documentation.sas.com/doc/en/pgmsascdc/default/casfun/n09ssmi0ko8uyfn1022ezo2hv0pm.htm#p0mbflp8ta6iq3n1aswoae5s5g76

Is there a way to permanently save those table in cas library even after the connection is closed?

It looks like once the connection is closed, it will remove the table from memory in viya.