Navigating the interpretability paradox of autonomous AI: Can we maintain trust and transparency without sacrificing performance?

AI has rapidly evolved from simple, rule-based systems into sophisticated autonomous agents capable of making decisions without direct human oversight. These advanced systems, known as "agentic AI," go beyond basic automation to independently sense environments, evaluate options, and take actions to achieve specified objectives. However, as AI autonomy expands, a critical question emerges: Can AI systems that act autonomously still be explainable, and more importantly, how do we govern them responsibly?

In this article, we explore this interpretability paradox, identify the unique challenges posed by agentic AI, and uncover promising solutions that balance performance, autonomy, and transparency.

Understanding the black box dilemma

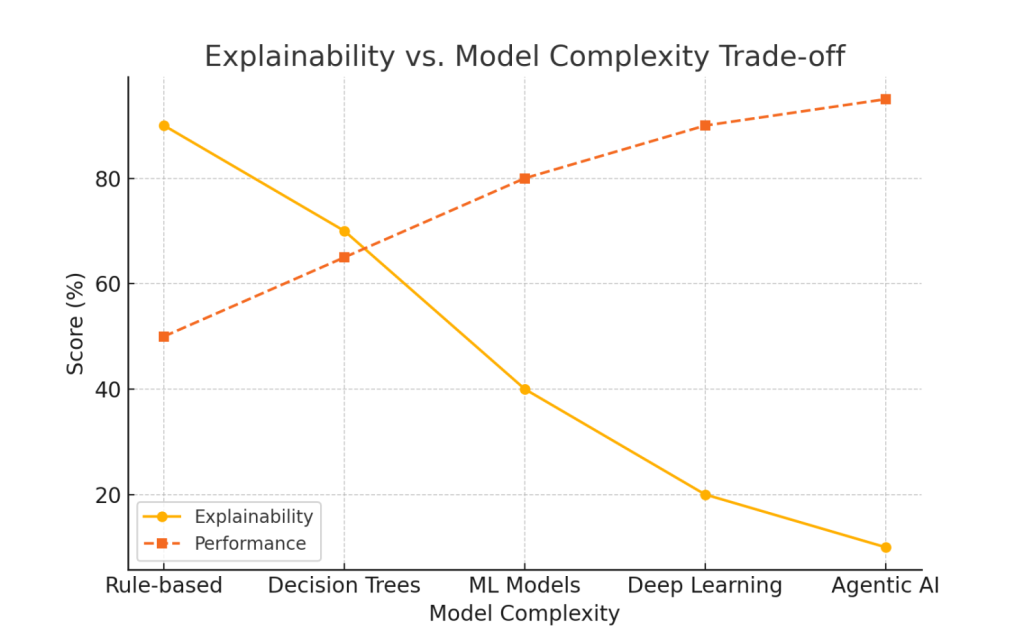

Traditional machine learning models, like decision trees or linear regressions, are inherently interpretable because their decision-making processes are clear and transparent. However, modern agentic AI often leverages deep neural networks and reinforcement learning techniques, creating powerful but opaque "black box" systems.

The smarter AI gets, the less we seem to understand its decisions. This is the interpretability paradox of agentic AI.

The black box dilemma arises because advanced AI models involve numerous layers of computation and complex interactions that make their decisions difficult—sometimes impossible—to track in human-understandable terms. While these models excel at complex tasks, their lack of transparency creates risks around accountability, ethics, compliance and trust.

Why explainability and governance matter more than ever

Agentic AI isn't just automating routine tasks; it's actively participating in critical decision-making processes in finance, health care, law enforcement, marketing and autonomous vehicles. The higher the stakes, the greater the necessity for not only clear explanations of how and why AI makes particular decisions but also strong governance frameworks to ensure compliance, fairness, and trust.

Explainability fosters trust among stakeholders—from end-users and regulators to executives. When an AI-driven recommendation influences medical diagnoses or investment strategies, stakeholders demand transparency not only to assess accuracy but also to validate fairness, compliance, and ethical considerations. Additionally, governance mechanisms ensure AI operates within structured decisioning frameworks that maintain consistency, auditability, and compliance with regulations.

In sectors like healthcare and finance, explainability isn't a feature, it's a fundamental requirement. And without governance, explainability alone is not enough.

Challenges of explainability in agentic AI

While explainability is critical, achieving it in agentic AI systems presents unique challenges:

- Complexity of decision processes: Agentic AI often leverages deep reinforcement learning, enabling it to learn optimal actions through interactions with complex environments. Unlike supervised models that map inputs directly to outputs, agentic AI decisions involve sequential, long-term planning and context-driven decisions.

- Dynamic adaptation: Agentic AI continuously adapts its strategies based on new data and feedback. Its decision logic evolves, complicating explanations that must remain consistent and reliable over time.

- Governance and compliance complexity: AI decisions must be explainable and auditable to comply with industry regulations and organizational policies. Governing AI effectively requires structured decisioning platforms, which enable transparent, rule-based, and model-driven orchestration of AI actions.

The self-evolving nature of agentic AI means yesterday's explanation might not apply tomorrow, but robust governance ensures accountability remains constant.

Strategies for enhancing explainability and governance in agentic AI

To navigate these challenges, researchers and practitioners are exploring several promising approaches:

1. Model-agnostic interpretability techniques

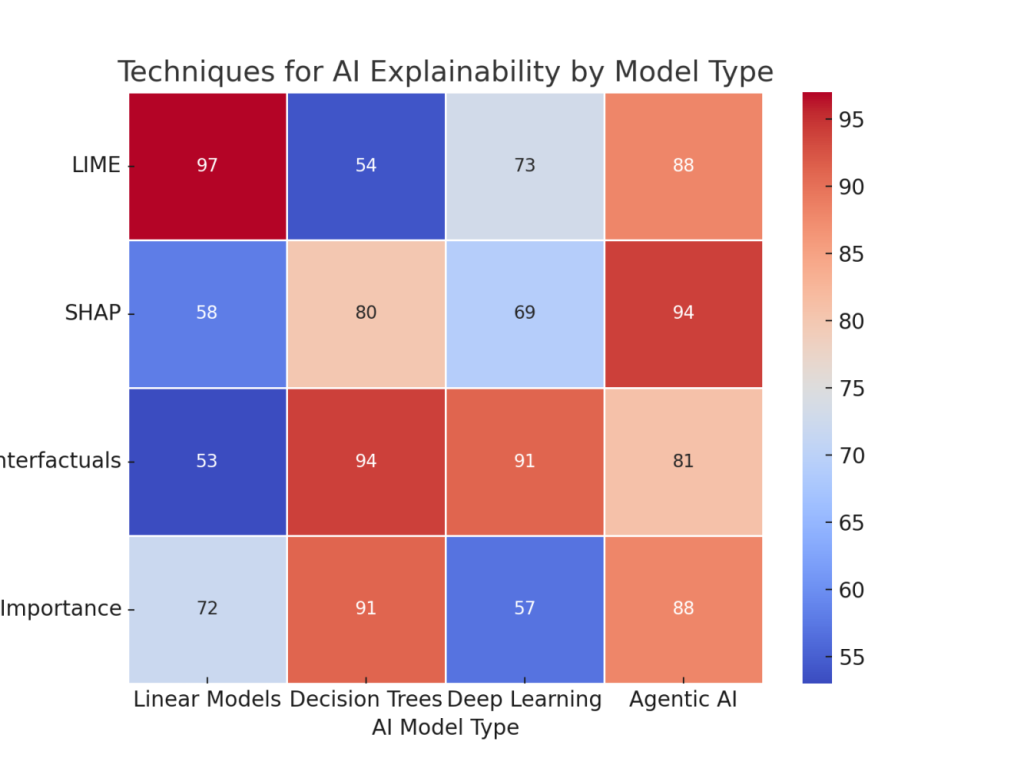

These methods, such as Local Interpretable Model-Agnostic Explanations (LIME) and SHAP (SHapley Additive exPlanations), provide insights by approximating complex model decisions through simpler, interpretable models locally around specific predictions. Although originally designed for supervised models, they can offer valuable insights when adapted for agentic AI by focusing explanations on critical decision points.

2. Explainable reinforcement learning (XRL)

Emerging frameworks in XRL aim to embed explainability into reinforcement learning algorithms, creating intrinsic transparency. Techniques include hierarchical decision modeling and attention mechanisms that highlight critical factors in decisions, thus providing clearer insights into the agent's reasoning.

3. Counterfactual simulations

Counterfactual explanations clarify decisions by demonstrating what would have happened if different actions had been taken. Developing advanced simulation environments allows stakeholders to visualize alternative scenarios, enabling them to better understand agentic AI decision pathways.

4. AI governance and structured decisioning frameworks

Governance platforms enable AI systems to integrate explainability into structured, governed decision-making pipelines. These frameworks help organizations maintain transparency, compliance, and auditability while leveraging AI for high-value decisions.

Counterfactual explanations bridge the interpretability gap by illustrating alternative outcomes clearly and compellingly. But governance ensures AI remains aligned with business and regulatory standards.

Balancing transparency, performance and governance

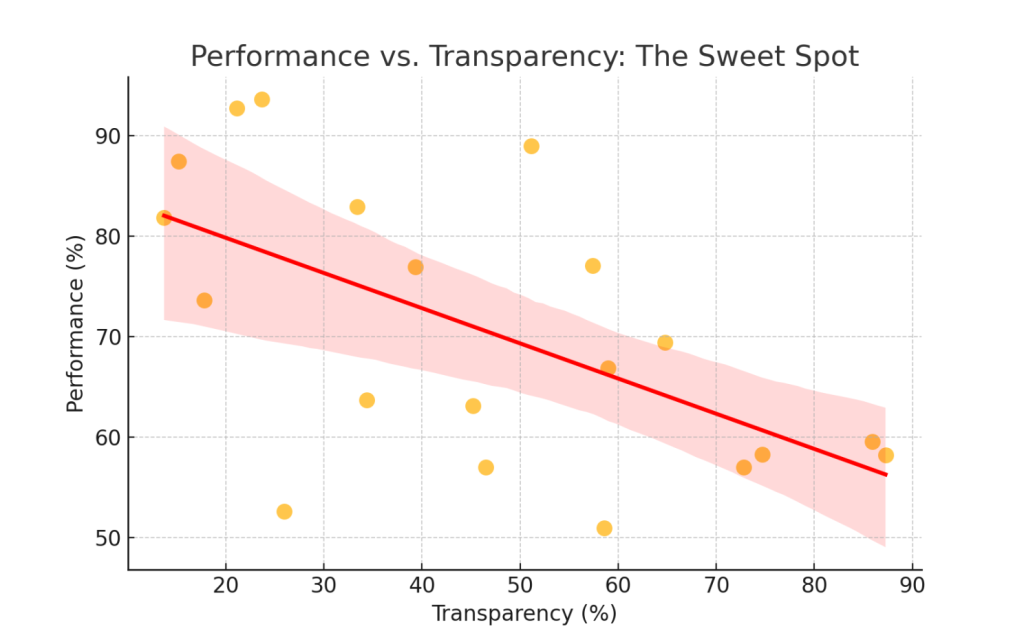

A common misconception is that interpretability inevitably compromises performance. However, explainability and performance are not always mutually exclusive. With thoughtful design, agentic AI can maintain high performance while providing sufficient transparency and strong governance mechanisms:

- Hybrid models: Combining transparent rule-based components with deep-learning-driven decision-making components provides the best of both worlds. Decisions remain interpretable without sacrificing sophisticated reasoning capabilities.

- Decision intelligence platforms: Allow organizations to manage AI decision logic, ensuring explainability and governance are embedded into AI workflows at scale.

Real-world examples: The path forward

Several pioneering industries have begun adopting these interpretability and governance techniques successfully:

- Health care: AI-driven diagnostic systems increasingly provide clinicians with visual explanations highlighting critical biomarkers influencing predictions, enhancing trust and acceptance.

- Finance: Investment recommendation algorithms frequently use SHAP values to highlight key factors behind portfolio decisions, satisfying regulatory compliance and transparency requirements while integrating structured decisioning frameworks.

- Marketing: Autonomous AI agents guiding customer interactions transparently showcase decision factors to marketers, ensuring explainable personalization strategies within a governed AI workflow.

Final thoughts: A responsible AI future

As AI continues to evolve into increasingly autonomous and agentic forms, transparency and governance must evolve with it. Bridging the gap between performance, explainability, and governance is not merely a technical challenge but a necessity for responsible AI deployment. By embracing and advancing innovative explainability techniques alongside structured decision intelligence frameworks, organizations can confidently deploy agentic AI systems, secure in their understanding of how decisions are made and why they are trustworthy.

The future of AI isn't just intelligent, it's transparent, accountable, governed, and responsibly autonomous

Learn how to use SAS® Intelligent Decisioning in this on-demand webinar