In today's world, data-driven systems make significant decisions across industries. While these systems can bring many benefits, they can also foster distrust by obscuring how decisions are made. Therefore, transparency within data driven systems is critical to responsible innovation.

Transparency requires clear, explainable communication. Since transparency helps people understand how decisions are made, organizations should communicate models' intended scope and outcomes, identify potential biases and foster user trust. In addition to fostering trust, transparency about data driven systems may also become legally required. This legal requirement means that organizations need to be able to communicate what, why and how their data-driven systems work.

Transparency in practice

Credit lending algorithms need the ability to explain to a user why their application was accepted or rejected. Failure to communicate the reason for the decision hurts vulnerable populations and may lead to discriminatory outcomes.

Transparent credit lending practices help organizations notice potential issues and quickly remedy concerns.

Also, doctors use data-driven systems to inform clinical decisions and should understand why the system recommends a specific treatment to their patients. With transparency within the healthcare process, doctors can provide their patients with the highest standards of care and patients can make truly informed decisions about their well-being.

While the context for these two data-driven systems is significantly different, the methods used to achieve transparency are similar.

Keep reading to understand why transparency is a crucial principle of responsible innovation and how organizations can achieve it.

How organizations can achieve transparency

Organizations can use strategies like model cards, data lineage, model explainability and natural language processing (NLP) to achieve transparency. These strategies help users to understand what use cases are appropriate for the model and which are more likely to be concerning. Understanding the source and context of data used to train the model helps understand any limitations embedded into the system and any assumptions introduced during the data chain of custody. Building transparency mechanisms also give users an understanding of the possible adverse impact of decisions made by the system.

To make your solutions transparent, consider asking the following questions.

- Can the responses of the data driven tool be interpreted and explained by human experts in the organization?

- Would it be clear that people are interacting with a data driven system?

- What testing satisfies expectations for audit standards [FAT-AI, FEAT, ISO, etc.]?

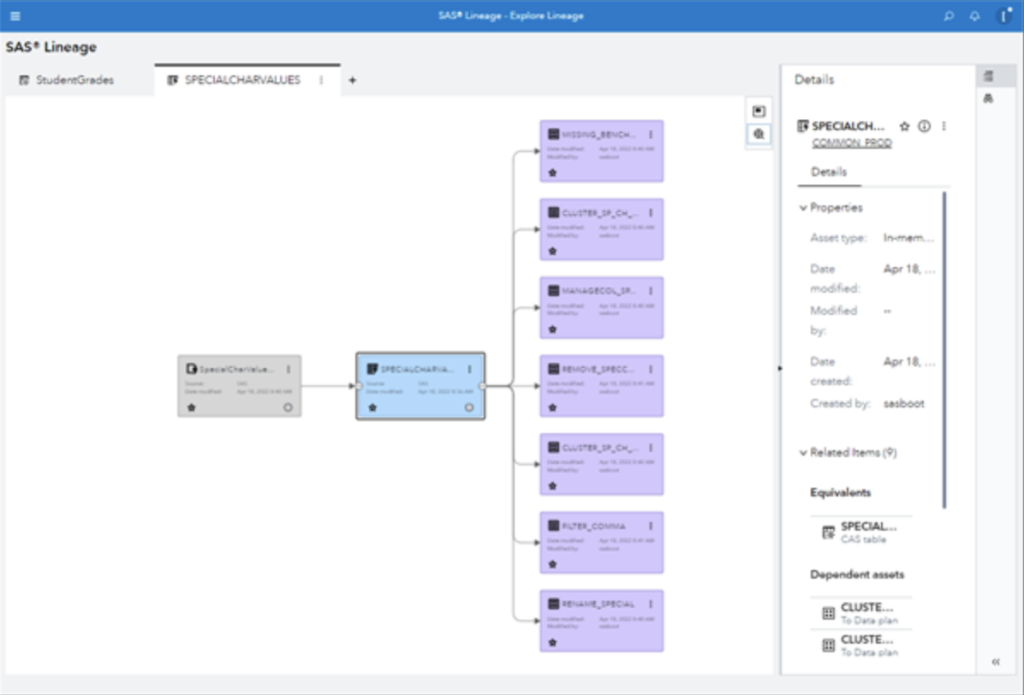

Know the source and understand the data lineage

Data lineage tracks data as it moves through an organization’s data ecosystem and is essential to achieving transparency. Data lineage provides a transparent view of how an organization stores, processes, and analyzes data. Data lineage also helps organizations understand the suitability of their system for different uses. Organizations can better understand which data assets are relevant and appropriate for different uses by documenting the inputs, transformations and outputs. For instance, an organization may use data lineage to predict which data sources are best suited to predict customer demand, allowing them to optimize data collection and storage processes.

By providing this transparent view of how data is stored, processed and analyzed, organizations can assess the potential impacts of data driven systems.

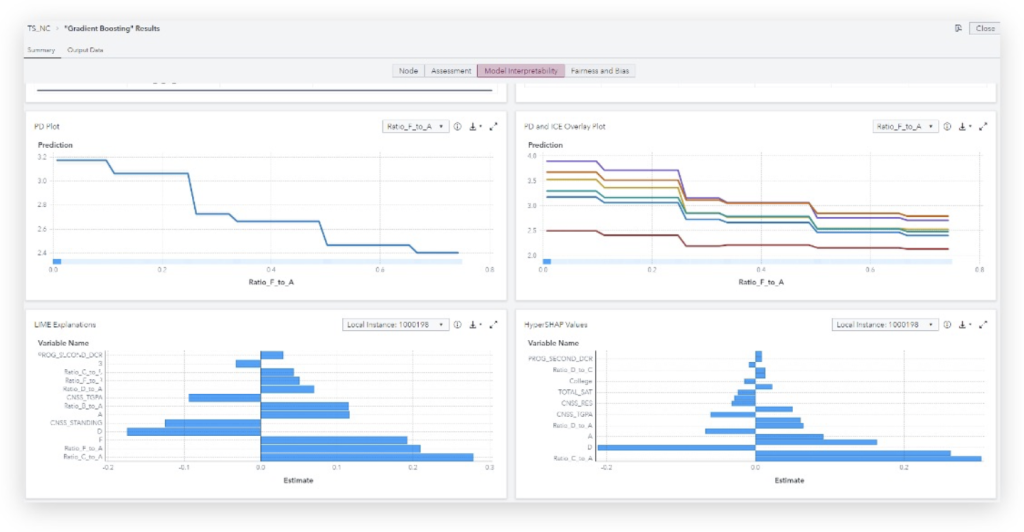

Why model explainability is vital to transparency

Another important part of transparency is model explainability or the necessity to disclose the basis for systems and decisions. Model explainability helps users translate the results of AI models with techniques like partial dependence (PD) plots, individual conditional expectation (ICE) plots, local interpretable model-agnostic explanations (LIME), and HyperSHAP. These (and other) techniques disclose to the users how a decision was made within the system.

Explainability helps us understand the why of the decision taken by the model. For example, if you ever use a navigation app to drive to a restaurant, model explainability would enable the app to explain why it recommended route one on the freeway instead of taking route two on the surface streets. Or what if a simple image classification algorithm classifies a husky as a wolf? Model explainability helps to understand that the model learned that snow in the background equals wolf in the image.

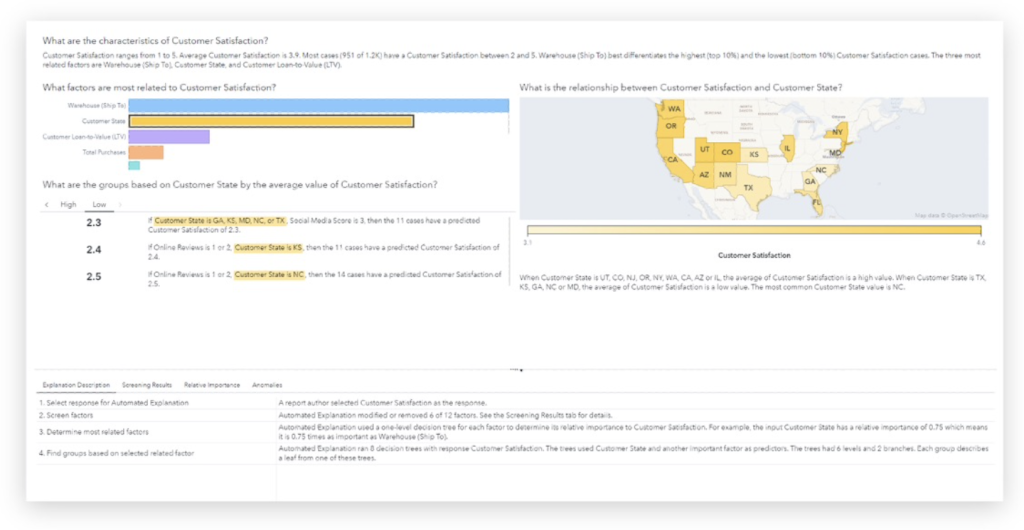

Narrating complex insights using NLP

The complexity of models can make it challenging for those involved to understand how decisions are made and what factors are considered.

To address this, organizations can use NLP as a powerful tool to help explain the models. NLP can generate text explanations for data driven decisions as the decisions are made. Organizations should consider developing descriptions of data models written in a simple business language understood by any audience through natural language insights. This means that stakeholders and impacted community members can interpret and understand the results of these systems without needing a background in data science or computer programming.

For example, imagine a healthcare organization using a data driven system to determine which treatments to recommend for patients with a particular condition. NLP could generate text explanations outlining factors considered in the recommendation, such as patient medical history, the latest research on the condition and even the success rate of different treatments. This would enable patients to understand why a particular treatment was recommended and be confident in the decision. By making this decision making process more visible, organizations can identify any biases or assumptions influencing outcomes and work to address them.

Ultimately, when it comes to developing data driven systems, being transparent is critical to responsible innovation. And to promote transparency, communication is key. Organizations can ensure their data driven decision systems are transparent by implanting measures such as model cards, model explainability, data lineage and natural language insights. By doing so, organizations can foster trust in these systems and ensure they are used responsibly and effectively.

Read more stories from SAS bloggers about equity and responsibility

Vrushali Sawant and Kristi Boyd made contributions to this article