By now you’ve seen the headlines and the hype proclaiming data as the new oil. The well-meaning intent of these proclamations is to cast data in the role of primary economic driver for the 21st century, just as oil was for the 20th century. As analogies go, it’s not too bad, but it doesn't really hit the mark.

If we were to stick with the oil/data analogy, data would not be a replacement for oil, but a co-equal partner. Allow me to offer an alternative analogy: Life.

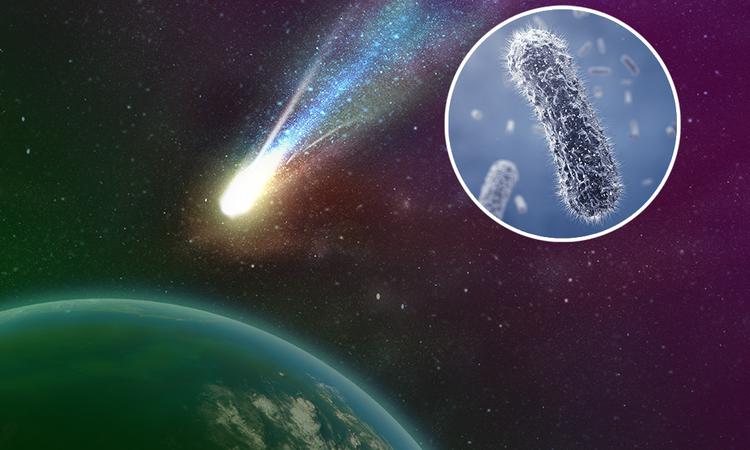

In an attempt to assess whether or not life could have evolved elsewhere in the galaxy and migrated to Earth through interstellar space, a theory known as panspermia, scientists have chilled simple, single-celled organisms down to 3 degrees above absolute zero – the temperature of empty interstellar space. At 3 degrees Kelvin, it’s not just that the basic life processes of reproduction, metabolism and respiration have stopped – for all intents and purposes, all motion, even down to atomic level vibrations, have ceased as well. ‘Dead’ is not quite the right word for such a state, and neither is ‘bacteria’ – ‘frozen crystal’ might be a more appropriate description.

In an attempt to assess whether or not life could have evolved elsewhere in the galaxy and migrated to Earth through interstellar space, a theory known as panspermia, scientists have chilled simple, single-celled organisms down to 3 degrees above absolute zero – the temperature of empty interstellar space. At 3 degrees Kelvin, it’s not just that the basic life processes of reproduction, metabolism and respiration have stopped – for all intents and purposes, all motion, even down to atomic level vibrations, have ceased as well. ‘Dead’ is not quite the right word for such a state, and neither is ‘bacteria’ – ‘frozen crystal’ might be a more appropriate description.

Or is it?

Amazingly, when heated and rejuvenated, scientists found that these bacteria can resume normal functioning. This has caused some of them to call for a redefinition of what it means to be a living thing, a simpler definition than what currently prevails, which involves reproduction, metabolism and evolution. Their revised definition?

Life = Structure + Energy

At 3 degrees above absolute zero, these organisms essentially lost all of their energy, but did retain their structure. And when the energy was replaced, its persistent structure, and its retained information via that persistent structure, permitted it to resume its previous, living behavior.

What has this got to do with data? Or with oil?

Energy comes in many forms – chemical, mechanical, electrical, nuclear, gravitational. Oil is energy encoded as hydrocarbon chemical bonds.

Likewise, information comes in many forms – system states, rates of change, processes or messages. Data is information encoded as binary sequences (this assumes today’s prevalent digital technology – data could also be encoded as qubits, as electromagnetic waves, or as letters on a page).

Oil is to energy as data is to information (or structure).

The first thing to note is that it’s the information that’s the important entity – the data is just its encoding. The second noteworthy point is that, when it comes time to decode the data back into actionable information, you can only get back the information which was properly encoded. No amount of back-end processing can recover information lost in the encoding stage. Importantly, this includes the metadata about the data – it’s units and attributes of measure, time, location, product, amount, customer, device, species, material, and on and on and on ...

Put another way: Data quality matters -- life depends on it.

Four primarily processes can occur while information is in the data stage: Transmission, storage, transformation and processing. Then, to create value from this data, it has to be decoded back into actionable information. That’s where the fun begins, utilizing techniques and capabilities as simple as trends and ANOVA, to more complex recurrent neural networks and reinforcement learning.

Sometimes this encoding, transmission, processing and decoding happens all within a single device – think of a thermostat with its temperature sensor and feedback control mechanism to the HVAC system. But more often, multiple sources and streams of data are integrated and recombined to create information and value that wasn't present in any of the individual sources alone.

There are five basic uses of this transformed, processed, integrated and decoded information:

- Reporting

- Root cause analysis

- Decision support

- Operations

- Exploration, discovery and insight

REPORTING gives you the who, what, when and where. It’s not the decision itself, but it suggests where decisions need to be made. Think of the green/yellow/red traffic signals and gauges on a typical BI dashboard. A thousand items being monitored, but perhaps only a couple dozen flagged for attention, review and action.

ROOT CAUSE analysis addresses the why questions sparked by the what/where/when information originating from basic reporting.

DECISION SUPPORT is what we typically think of when we think of information and analysis – the analytical divas that get top billing: Forecasting, optimization, prediction, plus a supporting cast of a hundred more. Once reporting and root cause analysis have identified where decisions need to be made, decision support provides viable options and their associated risks, sometimes along with recommended or optimal choices.

OPERATIONS is a hybrid user of reporting and decision support (often automated) in a feedback/control mechanism similar to the thermostat. Your car’s ECM monitors temperature, RPMs and fuel/oxygen ratios to optimize performance, emissions and consumption. Self-driving vehicles use GPS, radar and visual cues to keep the car safely in its lane. Or a human might be in the loop, adjusting the settings on a milling machine or a fermentation process, or landing a spacecraft on the Moon.

EXPLORATION involves insights and answers to questions yet unasked by the above four domains. The unknown-unknowns. Innovation. Tell me something I don’t know.

Overall, the process looks like this: Information → Encoding → Data → Transmit, Store, Transform, Process → Decode/Analyze → Information → Execution → Value

Excluding those last two components, execution and value, there’s a name for what comes between the two 'information' bookends inclusively – data management. If you’re looking to understand the goal and strategy of a proper data management effort, it would be to manage the entire end-to-end process with the objective of maximizing the value of the information being decoded, analyzed and acted upon at the back end, which in addition to data modelling also includes data quality, integration and governance.

Ensuring that your data management process leads to tangible value means starting from the information users and working backwards towards the sources. It means knowing how that information is going to be used and for what purposes - what decisions will be made based on which data sources and analyses. Each of the five basic user types will require different data sources, data types, latency, quality, integration and formats, all of which need to be addressed as part of the overall data management process.

No, data is not oil, it’s not the energy of the process, not the fuel, it doesn’t get consumed. Instead, it's the complement of oil; it’s the encoded information of your organization, a distinct yet equally critical element. Manage it with the same intensity and sense of purpose with which you manage your energy sources – people, materiel and capital. Combined with that energy, it will give life to your enterprise and your ideas; without it, your enterprise, like life without structure, dies.