I hate my title, but I’m going to stick with it.

In spite of it, I hope I can encourage you to pay attention to the current automation revolution and actively contribute to augmention-fueled innovation. If everyone understands those terms and actively tries to stay on top of how they evolve, I think we’ll be all right in terms of the Elon Musk-Mark Zuckerberg AI doomsday debate.

Eating up artificial intelligence

Before I get into augmentation, allow me to (ahem) set the table...with some breakfast insights.

Next to my oatmeal this morning, my newspaper (the Belgian and quite mainstream De Standaard ) was positively dripping with data and artificial intelligence:

- The cover story title was (translated), "Robots Create New Jobs".

- Page two: "Tomorrowland screens attendees last 6 years’ addresses data usage for security and wrestles with data privacy considerations."

- Another article describes the analysis of (yet another batch of) leaked emails from a prominent politician.

- Another about 1300 Belgians not receiving the correct order from webshops.

- A large infographic about a huge iceberg about to break off from Antarctica and how global warming is negatively impacting air travel.

- In the culture section: an article about data scientist Seth Stephens-Davidowitz’ book, Everybody lies. What the internet can tell us about who we really are.

- Another infographic about the turnover for Durex condoms in Belgium over the last 7 years.

That’s a lot of focus on data, analysis, algorithms and increased automation.

Now what strikes me most about all these articles is the complete lack of methodology considerations. Not a single mention about HOW computers, robots, systems learn or how we learn from them. Artificial intelligence is taken as a given as computers are getting smarter and smarter.

Machine learning viewed as commodity

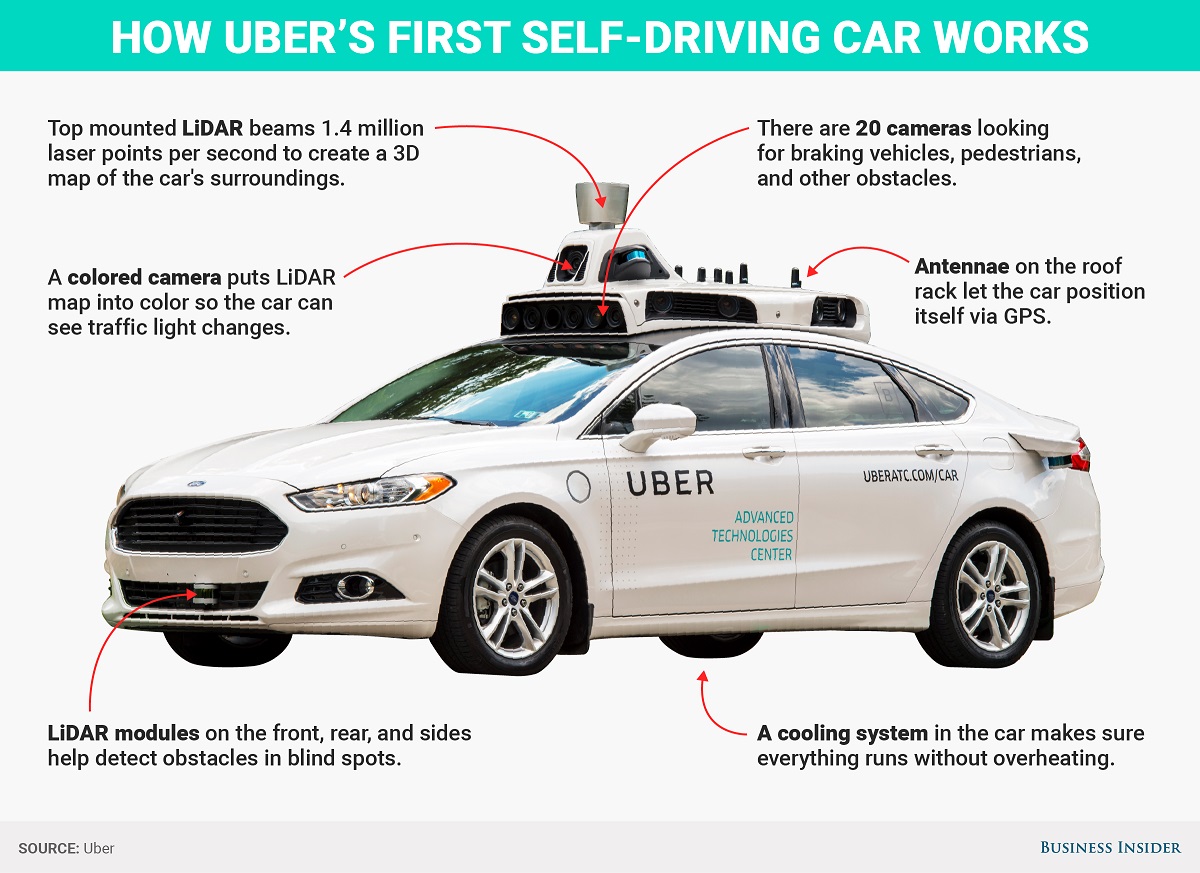

In the following slide, Uber profiles the technologies involved in getting the driverless car up to speed (I deeply regret that joke).

Now that’s a genuinely impressive list of technologies for collecting data and sensing the surrounding situations!

But machine learning is not mentioned at all:

- Nothing about the convolutional neural networks required for object recognition (eg: a detour sign).

- Nothing about the recursive learning algorithms for making the choice of what to do next (break slightly), and next (put on the turn signal), and next (give passengers a trajectory update).

In fact very rarely, do even somewhat specialized mainstream articles discuss how algorithms work and combine with data to make optimized, critical decisions.

Why is this? Is it faith in science? Simply a mainstream lethargy or extension of some kind or societal statistical trauma? Or a combination of both?

Artificial intelligence is a concept that’s been around for a while and has evolved significantly with the technological possibilities. Take the humble computer mouse. Invented in 1964 (!!!) By Douglas Englebart, it was so cutting edge at the time that most folks initially questioned its utility. We totally take the mouse for granted today and really don’t care how the thing works, just that it does.

But the big difference I see is that a mouse won’t take decisions without a human clicker. That’s a far step removed from an Uber car deciding whether to stay in the lane and kill 3 people or go on the sidewalk and kill 1.

Augmentation: Keeping artificial intelligence 'human relevant'

Staying on top of what algorithms and machine learning are doing in society is crucial to our survival, and not only from a watchdog perspective. It will also enable us to continue to evolve and bring added value on top of all the automated processes which computers and robots can efficiently take over. That added value has been described by Thomas Davenport as augmentation. The idea is that even in the face of a new industrial revolution where artificial intelligence and robots will replace many human jobs, humans will continue to provide artistic, creative and empathic added and complementary value. Technology will augment human abilities, not overcome and replace them.

Part of the societal responsibility rests with us as practitioners. Data scientists have a moral obligation to make methods transparent and as digestible to as many stakeholders as possible. Aside from the impending GDPR legislation which requires that all organizations make their use personal data transparent to the persons involved, data scientists should actively look to make their methodology fully digestible to all stakeholders (both within and external to the organization). That way everyone can participate to the greater ethical debates of whether the algorithm applications are indeed benefiting the greater good.

A few examples where we all need to pay attention:

- Credit scoring algorithms – not excessively excluding certain socio-demographic groups in a cold recitation of, "computer says no."

- Medical recommendations – making sure that next-best steps truly represent the most inclusive research and neither overemphasize overperscription (potentially leading to iatrogenics) nor hands-off therapies.

- Music or film suggestions – no disproportionate commercial influence.

This needs to be a two-way dialogue, but the first responsibility lies with the data scientists who need to keep the door open on methodological transparency.

Check out my recent presentation at the SAS Netherlands user forum for more on the topic, and read more about how artificial intelligence works.

1 Comment

As per McKinsey, Artificial Intelligence is adding to a change of society happening "ten times speedier and at 300 times the scale, or approximately 3,000 times the effect" of the Industrial Revolution.

The indications of that change are as of now surrounding us - Amazon suggests your next most loved book; Alexa reveals to you the climate; AI-driven autos sit still by us on the expressway; Netflix signs up your night's TV seeing.

As AI pervades the working environment, it's basic that organizations over each industry begins pondering what an AI-fueled business intends to them. As we start get ready for the canny working environment, four key inquiries must be replied: