The DO Loop

Statistical programming in SAS with an emphasis on SAS/IML programs

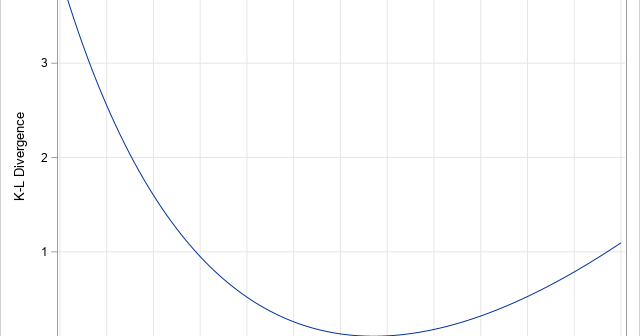

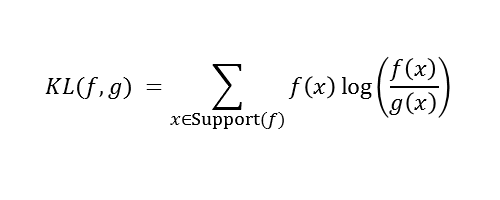

The Kullback–Leibler divergence is a measure of dissimilarity between two probability distributions. An application in machine learning is to measure how distributions in a parametric family differ from a data distribution. This article shows that if you minimize the Kullback–Leibler divergence over a set of parameters, you can find a

If you have been learning about machine learning or mathematical statistics, you might have heard about the Kullback–Leibler divergence. The Kullback–Leibler divergence is a measure of dissimilarity between two probability distributions. It measures how much one distribution differs from a reference distribution. This article explains the Kullback–Leibler divergence and shows

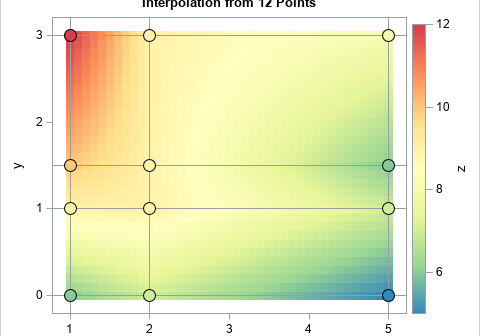

This article shows how to perform two-dimensional bilinear interpolation in SAS by using a SAS/IML function. It is assumed that you have observed the values of a response variable on a regular grid of locations. A previous article showed how to interpolate inside one rectangular cell. When you have a