The DO Loop

Statistical programming in SAS with an emphasis on SAS/IML programs

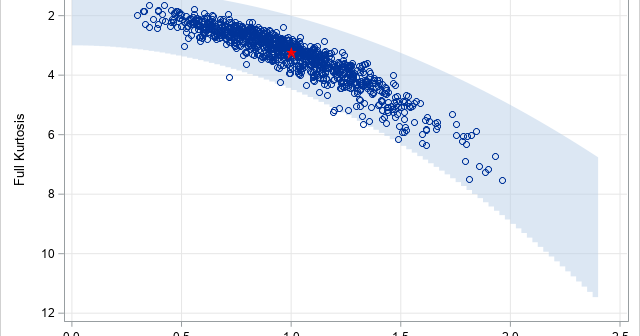

The moment-ratio diagram is a tool that is useful when choosing a distribution that models a sample of univariate data. As I show in my book (Simulating Data with SAS, Wicklin, 2013), you first plot the skewness and kurtosis of the sample on the moment-ratio diagram to see what common

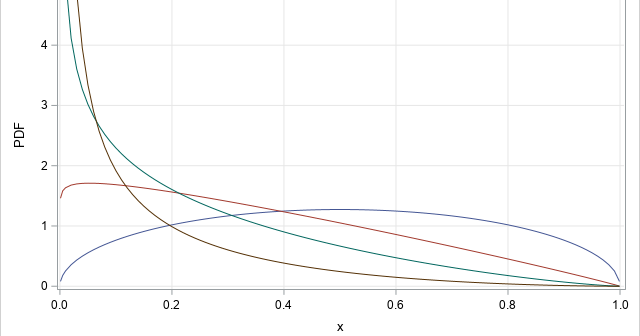

A SAS programmer wanted to simulate samples from a family of Beta(a,b) distributions for a simulation study. (Recall that a Beta random variable is bounded with values in the range [0,1].) She wanted to choose the parameters such that the skewness and kurtosis of the distributions varied over range of

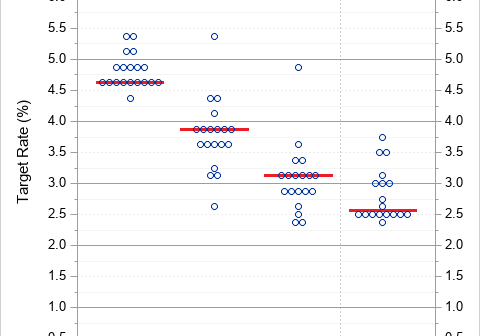

A dot plot is a standard statistical graphic that displays a statistic (often a mean) and the uncertainty of the statistic for one or more groups. Statisticians and data scientists use it in the analysis of group data. In late 2023, I started noticing headlines about "dot plots" in the

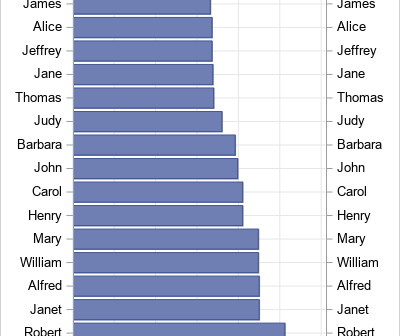

Recently, I saw a scatter plot that displayed the ticks, values, and labels for a vertical axis on the right side of a graph. In the SGPLOT procedure in SAS, you can use the Y2AXIS option to move an axis on the right side of a graph. Similarly, you can

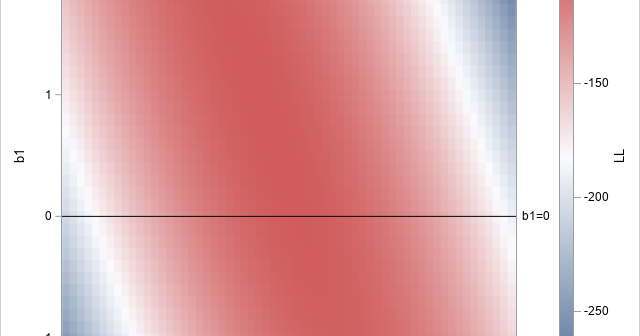

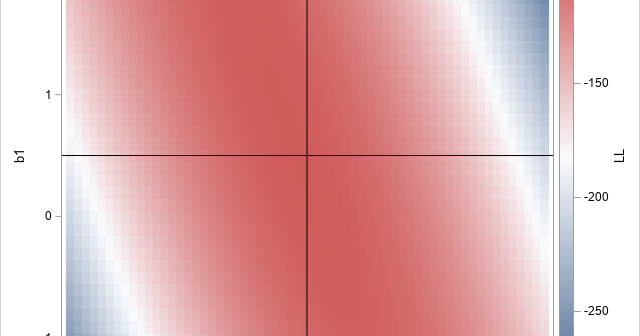

A recent article describes how to estimate coefficients in a simple linear regression model by using maximum likelihood estimation (MLE). One of the nice properties of an MLE formulation is that you can compare a large model with a nested submodel in a natural way. For example, if you can

A statistical analyst used the GENMOD procedure in SAS to fit a linear regression model. He noticed that the table of parameter estimates has an extra row (labeled "Scale") that is not a regression coefficient. The "scale parameter" is not part of the parameter estimates table produced by PROC REG