[Editor's note: this post was co-authored by Ali Dixon and Mary Osborne]

Corpus analysis is a technique widely used by data scientists because it provides an understanding of a document collection and provides insights into the text. It’s an apt methodology to consider as we come upon Charles Dickens’ 210th birthday on February 7th because of how frequently passages from his works have made their way into popular culture. “It was the best of times, it was the worst of times, it was the age of wisdom …” and 119 words later the sentence finally ends in this excerpt on page 4 from A Tale of Two Cities. Were you eager to know how many words that was? Have you ever wondered why he used such long sentences? As we explore what corpus analysis is you will understand more about the technique widely used by data scientists and learn two key ways to use it.

Corpus (a collection of documents) analysis using Natural Language Processing (NLP) can help provide insights about Dickens’ work by providing insights about a document collection before engaging in additional analysis. Corpus analysis provides understanding for corpus structure through easily accessible output statistics to leverage Natural Language Processing (NLP). NLP uses unstructured text data, unlike structured information that fits neatly into rows and columns. Data scientists use NLP for tasks such as data cleansing, separating out noise, sampling effectively, preparing data as input for further models (rules-based and machine learning) and strategizing modeling approaches.

Two ways data scientists can leverage corpus analysis

- Generate statistics about the text to better understand the content and structure of your document collection.

- Examples of use cases where data scientists use NLP include viewing and understand insights about:

- Information complexity

- Vocabulary diversity

- Information density

- Comparison metrics against a predetermined reference corpus

- Further analyze or visualize these statistics (using the counts) in reports created in Visual Analytics.

To begin corpus analysis using SAS Visual Text Analytics, you profile the data. An overview of the process starts by using a CAS action called Text profile, you can profile data for descriptive statistics that are relevant for understanding text data. This analysis informs model building, testing, and usage on specific data sets. Furthermore, this action can characterize a data set, identify differences between data sets, identify errors or noise and compare a data set to a reference data set.

A key element of corpus analysis are tokens which can be words, morphemes, or characters. The process of tokenization splits character sequences such as a sentence or document to turn them into useful units.

Was Dickens paid per word?

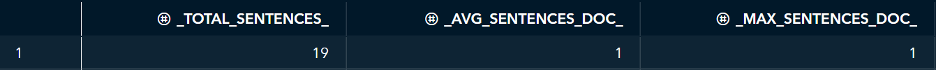

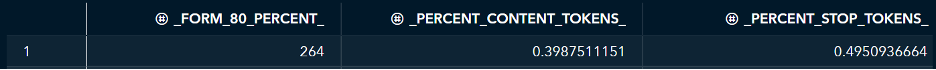

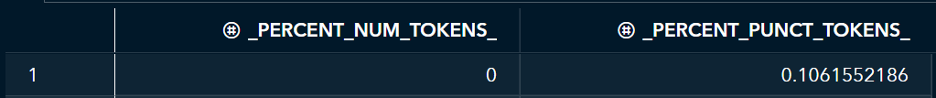

Check out the analysis video or the process below from the first six paragraphs of Charles Dickens in A Tale of Two Cities to see if you think the literary rumor is true that Dickens was paid by the word for his writings.

As you can see, it makes sense that Dickens wrote some very long sentences. In these six paragraphs, there were only 19 sentences! From literary works to legal documents, corpus analysis provides the ability to compare information across documents and corpora (more than one corpus). After seeing this analysis, I hope you’re inspired to continue exploring NLP!

Learn more

- Check out additional documentation on corpus analysis and for Visual Text Analytics.

- Keep exploring by checking out these resources for data scientists or visit Visual Text Analytics.