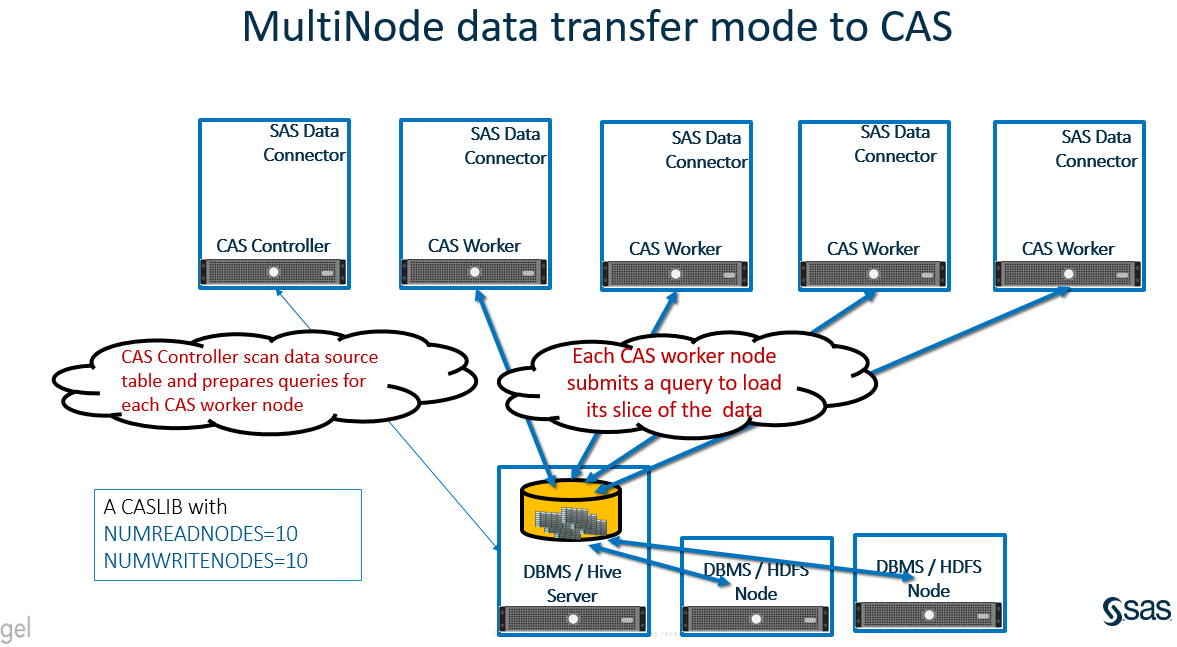

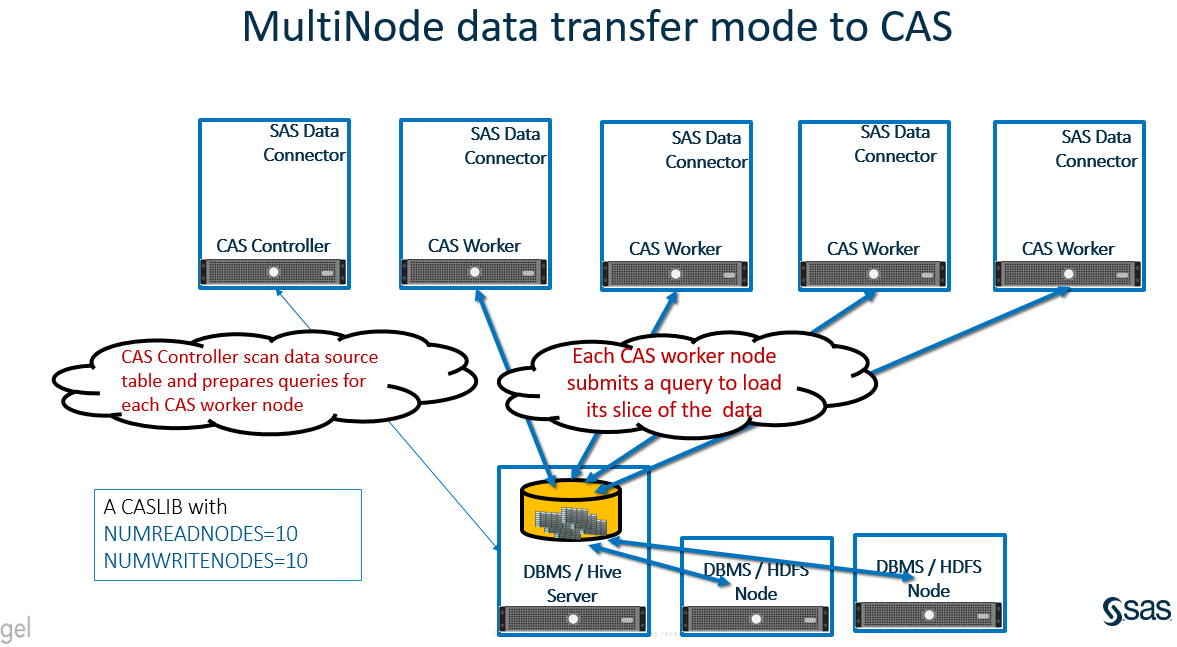

With SAS Viya 3.3, a new data transfer mechanism Multi Node Data Transfer has been introduced to transfer data between the data source and the SAS’ Cloud Analytics Services. Learn more about this feature.

With SAS Viya 3.3, a new data transfer mechanism Multi Node Data Transfer has been introduced to transfer data between the data source and the SAS’ Cloud Analytics Services. Learn more about this feature.

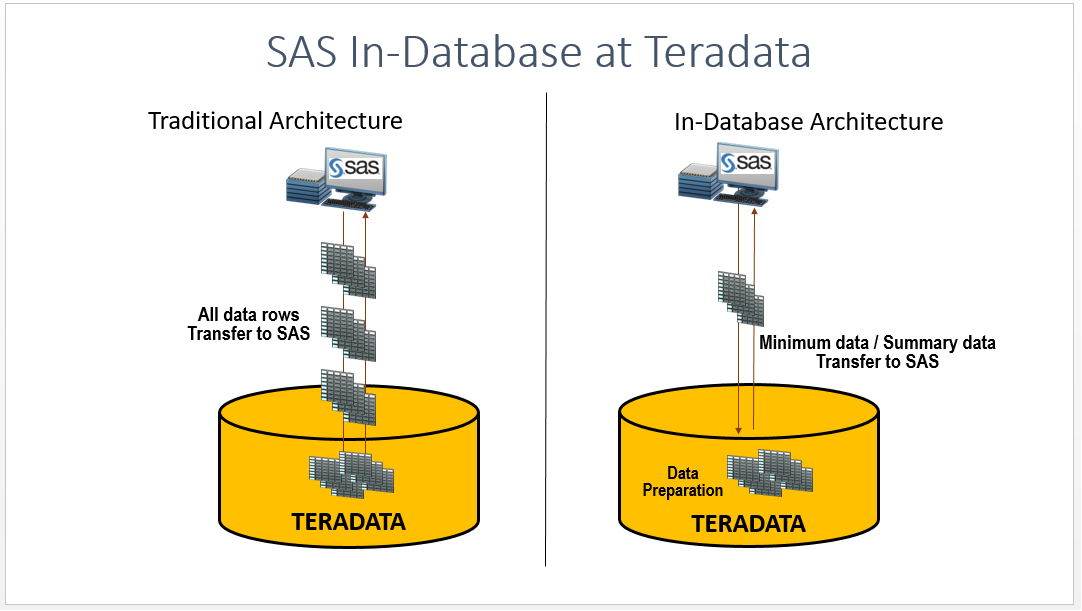

When using conventional methods to access and analyze data sets from Teradata tables, SAS brings all the rows from a Teradata table to SAS Workspace Server. As the number of rows in the table grows over time, it adds to the network latency to fetch the data from a database

As a SAS Viya user, you may be wondering whether it is possible to execute data append and data update concurrently to a global Cloud Analytic Services (CAS) table from two or more CAS sessions. (Learn more about CAS.) How would this impact the report view while data append or