Introducing the feature contribution index for model assessment

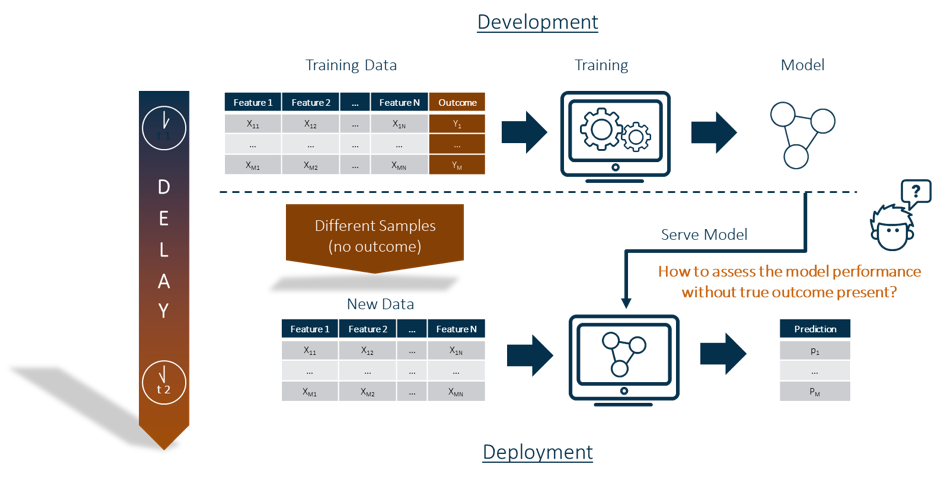

Most model assessment metrics, such as Lift, AUC, KS, ASE, require the presence of the target/label to be in the data. This is always the case at the time of model training. But how can I ensure that the developed model can be applied to new data for prediction?