Authors: Yongqiao Xiao, Maggie Cech, and Patrick Koch

Analytic Store, or ASTORE, is a SAS-developed format used to persist an analytic model after the model is built. This is so that the model can then be deployed to score new data in different environments. Open Neural Network Exchange (ONNX) is an open-source standard format for persisting machine learning models and is widely supported by many vendors. Model persistence and portability are vital to deploying data science pipelines consistently. This is evident by the commitment of SAS and the open-source community to develop and support such standards. ASTORE supports over 40 different model types. The ONNX Model Zoo contains a growing collection of models trained by the community. We will show how these two formats can be used together – how an ONNX model can be integrated into ASTORE and used within SAS environments. We’ll provide examples employing object detection and image classification.

Introduction to ASTORE and ONNX

This post illustrates how an ONNX model is integrated into ASTORE and then deployed as a regular analytic store in different SAS environments. These would include SAS Event Streaming Processing, in-database processing, CAS, CMP, SAS MVA Clients, and SAS Model Manager.

ASTORE is a framework that allows a user to save the state from a predictive analytic procedure/action and to score new data with the saved state. The state from a predictive analytic procedure/action is created using the results from the training phase of model development. It is usually a binary file, which is called an analytic store. In model deployment, the analytic store is restored to the state of the predictive model for scoring new data. This is done consistently, no matter where the scoring occurs.

ONNX defines a standard set of operators and data types for representing neural network models. For more details, see about ONNX and ONNX on GitHub. The operators, along with their inputs and outputs, form a computation graph. Each operator is a node in the graph, and the nodes are connected through their inputs and outputs. The graph is acyclic so that it can be computed in the order of dependence among the nodes. Convert a pipeline with ColumnTransformer shows an example for the logistic regression model.

The integration of an ONNX model into ASTORE involves saving the ONNX model to an analytic store – exactly as with any analytic store. In the analytic store, we save the ONNX model itself (intact), together with variable mappings, class labels, and other information necessary for scoring. The ONNX integration also supports checking the validity of an ONNX file, describing an ONNX file, and extracting the ONNX file from a saved ONNX analytic store.

ONNX/ASTORE integration

Several new actions were developed specifically for ONNX integration. Additional functionality was added to pre-existing actions in order to support ONNX. The CHECK statement allows a user to check whether a file contains a valid ONNX model or not. This saves users time trying to work with and debug code for a model that might not be a valid ONNX in the first place.

A user who knows an ONNX model is valid can essentially look inside the model using the pre-existing ASTORE DESCRIBE statement if it is specified that an ONNX model is being used. The DESCRIBE statement is powerful when used for ONNX models. It gives information describing the expected shape, value types, and element types of both the input and output data. It also provides basic information specific to ONNX models, such as producer name and version, model version, and domain. The DESCRIBE statement gives users a window into the ONNX model.

Syntax and example code can be found in SAS ASTORE documentation and the ONNX Integration GitHub repository.

Save an ONNX model to ASTORE

Saving an ONNX model as an ASTORE requires information obtained from the DESCRIBE statement. From DESCRIBE, we know that the YOLO V3 model takes two input tensors and outputs three tensors. See ONNX models and YOLOv3 on GitHub for more details. Although SAS does not support tensors directly, we need to map tensors to SAS variables.

The YOLO V3 model has two input tensors. The first one is for the resized image, which can be mapped to a SAS varbinary variable. The second one is for the height and width of the original image, which can be mapped to two SAS variables accordingly. The mapping for each input tensor is specified by the INPUTS sub-statement of the SAVEAS statement of PROC ASTORE. If an input tensor has a missing dimension, we need to specify the exact shape of the input tensor in the mapping. For an image tensor, we might also need to specify additional information such as the color order of the model and the input, the shape order of the model and the input, whether to normalize the pixels by a factor, and so on.

The output tensors can also be mapped to SAS variables. Since we explicitly specify modelType=YOLOV3 in the following code, the output will be automatically produced in the standard format for object detection.

The YOLO V3 model requires letterbox resizing, which can be done by the processImages action in the image action set. The complete SAS code for the image preprocessing and saving to ASTORE can be found in YOLOV3 example code. Shown below is the SAVEAS statement code.

%let labels = %str("person" "bicycle" "car" "motorbike" "aeroplane" "bus" "train" "truck" "boat" "traffic light" "fire hydrant" "stop sign" "parking meter" "bench" "bird" "cat" "dog" "horse" "sheep" "cow" "elephant" "bear" "zebra" "giraffe" "backpack" "umbrella" "handbag" "tie" "suitcase" "frisbee" "skis" "snowboard" "sports ball" "kite" "baseball bat" "baseball glove" "skateboard" "surfboard" "tennis racket" "bottle" "wine glass" "cup" "fork" "knife" "spoon" "bowl" "banana" "apple" "sandwich" "orange" "broccoli" "carrot" "hot dog" "pizza" "donut" "cake" "chair" "sofa" "pottedplant" "bed" "diningtable" "toilet" "tvmonitor" "laptop" "mouse" "remote" "keyboard" "cell phone" "microwave" "oven" "toaster" "sink" "refrigerator" "book" "clock" "vase" "scissors" "teddy bear" "hair drier" "toothbrush"); proc astore; saveas onnx="tiny-yolov3-11.onnx" rstore=sascas1.yolov3store data=sascas1.image_resized inputs=(var=_image_ varbinaryType=UINT8 inputShapeOrder=NHWC inputColorOrder=BGR preprocess=NORMALIZE normFactor=255 modelShapeOrder=NCHW modelColorOrder=RGB shape=(1 3 416 416) ) inputs=(vars=(_height_ _width_ ) shape=(1 2)) modelType=YOLOV3 maxObjects=6 labels=(&labels); run; |

Score with a saved ONNX analytic store

After the ONNX model is saved to an analytic store, we can score with the analytic store in any environment that supports ASTORE. The following code runs the scoring in CAS. The RSTORE= option specifies the model to be used for scoring – the ‘yolov3store’ analytic store produced in the SAVEAS code above. The data to be scored is specified in ‘data’ and the scoring results are written to ‘out’.

proc astore; score rstore=sascas1.yolov3store data=sascas1.image_resized out=sascas1.out copyvars=(_id_ _path_); run; |

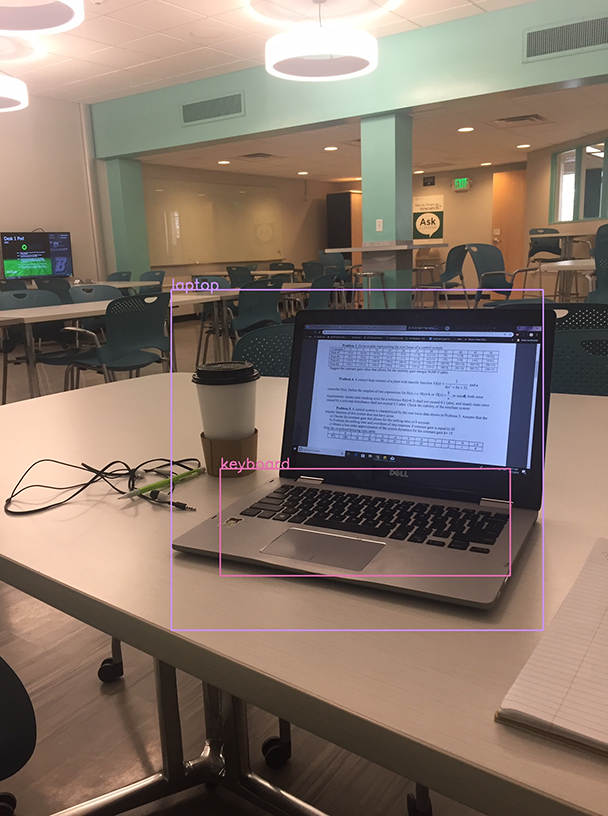

The image with the detected objects and their bounding boxes from the YOLO V3 prediction is shown below.

YOLO in Event Stream Processing

This new feature is also now supported in SAS Event Stream Processing (ESP). ESP allows users to use machine learning and streaming analytics to uncover insights at the edge and make real-time decisions in the cloud.

A user that saves a YOLOv2 or YOLOv3 ASTORE model can then score new data directly in ESP. This means users will be able to deploy an open-source ONNX model saved to ASTORE to score real-time data. This now gives users the capability to score with not only machine learning models they’ve built but any model from across the ONNX open-source community.

Example code and the XML file can be found on GitHub.

More examples

Although we currently only explicitly support saving YOLOv2 and YOLOv3 ONNX models to ASTORE, it is still possible to save any numeric ONNX model to ASTORE, with some customized post-processing in Python. Although post-processing varies for each model, generally they all follow a similar pattern, making it easy to use any ONNX model after learning how to work with one. This makes SAVEAS quite versatile and useful for various machine learning and computer vision tasks.

In future development, we will explicitly support other types of input so users can perform other tasks such as text generation/classification. Below is a table with additional examples of saving an unsupported ONNX model to ASTORE and using the new ASTORE to score data (as well as full YOLO examples):

| Model | Description |

|---|---|

| VGG16 | Deep CNN model that performs image classification, trained on ImageNet dataset which contains 1000 classes, larger model size with higher accuracy |

| Fast Neural Style Transfer | Artistic style transfer model that mixes the content of an image with the style of another image |

| Single Stage Detector (SSD) | Real-time CNN for object detection that detects 80 different classes |

| YOLOv2 YOLOv2 (SAS code) |

CNN model for real-time object detection can detect over 9000 object categories, trained with COCO dataset and contains 80 classes |

| YOLOv3 YOLOv3 (SAS code) |

Deep CNN model for real-time object detection, bigger than YOLOv2 but still fast, as accurate as SSD but 3x faster |

These examples can also be found on GitHub. Future examples will be available here as they are added.

Summary

We have shown how to describe an ONNX model in ASTORE. Then we demonstrated how to use the information to save an ONNX model to an analytic store. Once the ONNX model is saved to an analytic store, we can score new data with the analytic store in all environments that support ASTORE. We have illustrated the process with object detection and image classification examples in both SAS and Python. This integration greatly expands the predictive capabilities of SAS data science pipelines. It allows models, trained and shared by the open-source community using this new standard ONNX format, to be deployed and even coupled with other ASTORE models trained with SAS code.

LEARN MORE | SAS Data Science