When developing SAS applications, you can feed database tables into your application by using the libname access engine either by directly referring a database table, or via SAS or database views that themselves refer to one or more of the database tables.

When developing SAS applications, you can feed database tables into your application by using the libname access engine either by directly referring a database table, or via SAS or database views that themselves refer to one or more of the database tables.

More on Automation with SAS: Let SAS write batch scripts for you

However, such on-the-fly data access may not be efficient enough, especially for interactive SAS applications when system response time is critical. In case of distributed systems where a database might reside on one server, and the SAS Application server – on a different physical server, user wait time caused by delays in data access across networks and databases might become intolerable. In such cases, it makes perfect sense to extract database tables and load them into SAS tables in advance, preferably placing them on (or close to) the SAS Application server. That will ensure higher operational efficiency (responsiveness) of the interactive SAS application.

SAS Data Integration Studio provides a powerful visual design tool for building, implementing, and managing data integration processes across different data sources, applications, and platforms.

However, in case of multiple tables, loading them one by one using even such a powerful tool might become a bit tedious. In an automation paradigm, being “visual” is not necessarily a good thing; when we automate it implies that we want to get things done without visualizing or even seeing them.

Here is a SAS coding approach, which I recently used for a customer project, to automate the repetitive process.

SAS code example of loading multiple Oracle tables into SAS tables

There were several Oracle tables that needed to be extracted and loaded into SAS data tables on a different physical server. The Oracle tables contained multi-year data, but we were only interested in the latest year’s data. That is those tables that have datetime stamp EVENT_DT column needed to be subset to the latest rolling 365 days.

Step 1. Creating a driver table

In order to make this process data-driven, let’s first create a driver table containing a list of the table names that needed to be extracted and loaded:

/* --------------------------------------------------- */ /* create table list to extract & load, */ /* dt_flag indicates whether EVENT_DT variable present */ /* --------------------------------------------------- */ libname parmdl '/sas/data/parmdata'; data parmdl.tablelist; length tname $8 dt_flag $1; input tname dt_flag; datalines; ADDRESS N ACCOUNT Y BENEFIT Y EXCLUSN N FINANCE Y HOUSING Y ; |

This program runs just once to create the driver table parmdl.tablelist.

Step 2. Loading multiple tables

The following data-driven program runs every time when you need to reload Oracle tables into SAS:

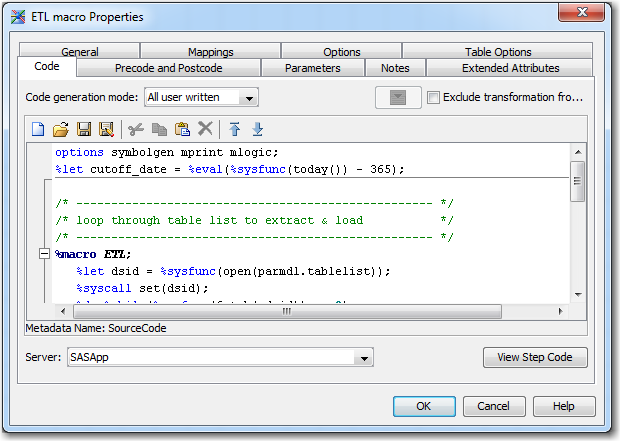

/* source ORACLE library */ libname oralib oracle path="xxx" schema="yyy" user="uuu" PASSWORD="{SAS002}D2DF612A161F7F874C4EF97F" access=readonly; /* target SAS library */ libname sasdl '/sas/data/appdata'; /* driver table SAS library */ libname parmdl '/sas/data/parmdata'; options symbolgen mprint mlogic; %let cutoff_date = %eval(%sysfunc(today()) - 365); /* --------------------------------------------------- */ /* loop through table list to extract & load */ /* --------------------------------------------------- */ %macro ETL; %let dsid = %sysfunc(open(parmdl.tablelist)); %syscall set(dsid); %do %while(%sysfunc(fetch(&dsid)) eq 0); data sasdl.&tname; set oralib.&tname; %if &dt_flag eq Y %then %str(where datepart(EVENT_DT) ge &cutoff_date;); run; %end; %let rc = %sysfunc(close(&dsid)); %mend ETL; %ETL |

In this code, we use a similar coding technique to that described in my earlier blog post Modifying variable attributes in all datasets of a SAS library. We loop through the initially created table parmdl.tablelist. in a macro %do-loop, and for each observation containing a single table name, implement a data step extracting one table at a time.

If macro variable &dt_flag=Y, then we generate and insert a where statement sub-setting the extracted table to the latest year’s data.

Note a very useful feature here – %syscall set(dsid) – that links SAS data set variables to macro variables that have the same name and data type. Since we have not defined those macro variables, in essence, it creates a namesake macro variable for each SAS data set variable.

Implementing data-driven load in SAS Data Integration Studio

The above SAS code is run on the SAS Application server – the same server where the SAS target library resides. To fully automate the ETL process, this code may be embedded into a SAS job in SAS Data Integration Studio and then scheduled via either Platform Suite for SAS or your operating system scheduler. Alternately, it can be run in batch mode or via script that that in turn can be scheduled by the operating system scheduler.

In SAS DI Studio:

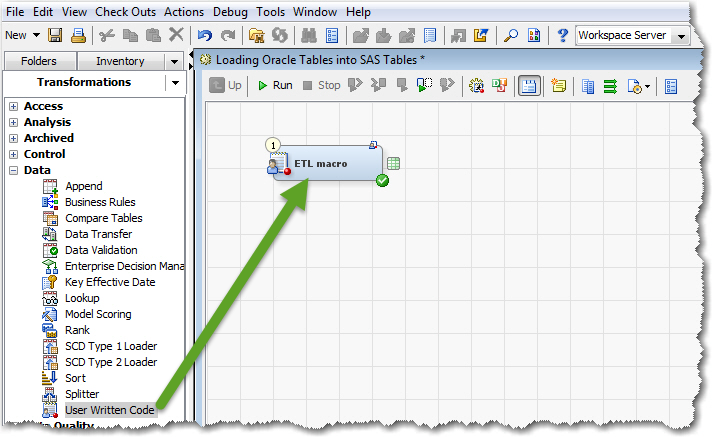

1. Create a New job and give it a meaningful name (e.g. Loading Oracle Tables into SAS Tables.)

2. Drag & drop User Written Code transformation onto the SAS Job canvas and give it a meaningful name (e.g. ETL macro):

3. Go to the Properties of this transformation and click on the Code tab, then paste your SAS code in the text entry area:

4. Click OK and Save your SAS Job.

Note, that we do not add Source and Target tables to the Job canvas, only User Written Code transformation. Also note, that the %ETL macro has no direct references to the database table names being extracted and loaded into SAS datasets. Instead, it receives the names of the tables to be loaded from the PARMDL.TABLELIST dataset. This makes it extremely flexible to the task of processing a completely different set of tables; all you need to do is update the table list in the driver table. No change to your SAS DI job is needed.

Now it's your turn

What is your experience with automating ETL processes? Please feel free to share it here in the Comments section.

More on Automation with SAS: Let SAS write batch scripts for you

8 Comments

Dear Leonid, I need your help to perform an automation in one of my task.

Could you assist me?

Hi Satish, could you please briefly describe your task? Briefly, but with enough details to understand your problem.

An alternative implementation of %ETL macro with CALL EXECUTE():

data _null_; set parmdl.tablelist; call execute( cat( "DATA SASDL.", left( tname ), ";", "SET ORALIB.", left( tname ), ";", ifc( dt_flag = "Y", "WHERE DATEPART( EVENT_DT ) GE &cutoff_date;", "" ), "RUN;" ) ); run;Anton, thank you for sharing your elegant solution, it's concise and easy to understand. Would you elaborate on its pros/cons compared with the "traditional" macro looping approach?

SAS DI is an ETL tool and since this is the case, would data lineage be lost the moment we introduce user written transformations? I believe in DI, data lineage is called impact analysis.

You are correct in that the User Written Transformation will not register its objects in the lineage. In this blog post I demoed the code-centric approach where previously developed code is simply embedded in the User Written Code transformation. A “classic” DI Studio approach would be to create this whole job in DI Studio using control table that feeds into an iterative job with the Loop and Loop End transformations that in turn executes a parameterized job. In the latter case, the lineage will be preserved.

Great post, thank you! I am a pretty novice DI Studio user, and sometimes struggle to find clear examples. Thank you for adding steps on how to do it. Many posts just tell me what is possible, but not how to do it.

Thank you, Kim, for your kind feedback. I am glad you find this post useful for you.