With DataFlux Data Management 2.7, the major component of SAS Data Quality and other SAS Data Management solutions, every job has a REST API automatically created once moved to the Data Management Server. This is a great feature and enables us to easily call Data Management jobs from programming languages like Python. We can then involve the Quality Knowledge Base (QKB), a pre-built set of data quality rules, and do other Data Quality work that is impossible or challenging to do when using only Python.

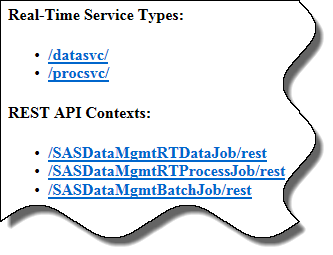

In order to make a RESTful call from Python we need to first get the REST API information for our Data Management job. The best way to get this information is to go to Data Management Server in your browser where you’ll find respective links for:

In order to make a RESTful call from Python we need to first get the REST API information for our Data Management job. The best way to get this information is to go to Data Management Server in your browser where you’ll find respective links for:

- Batch Jobs

- Real-Time Data Jobs

- Real-Time Process Jobs.

From here you can drill through to your job REST API.

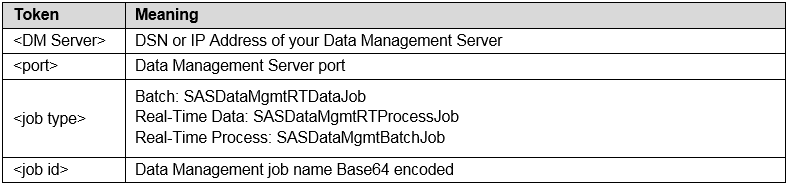

Alternatively, you can use a “shortcut” to get the information by calling the job’s REST API metadata URL directly. The URL looks like this:

http://<DM Server>:<port>/<job type>/rest/jobFlowDefns/<job id>/metadata

The <job id> is simply the job name (with subdirectory and extension) Base64 encoded. This is a common method to avoid issues with illegal URL characters like: # % & * { } \ : < > ? / + or space. You can go to this website to Base64 encode your job name.

If you have many jobs on the Data Management Server it might be quicker to use the “shortcut” instead of drilling through from the top.

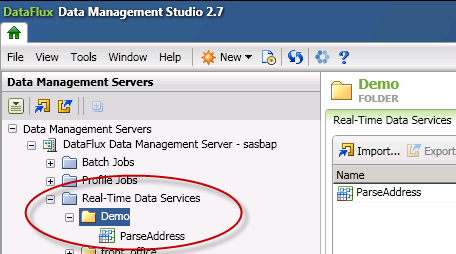

Here an example to get the REST API information for the Data Management job “ParseAddress.ddf” which is in the subdirectory Demo of Real-Time Data Services on DM Server:

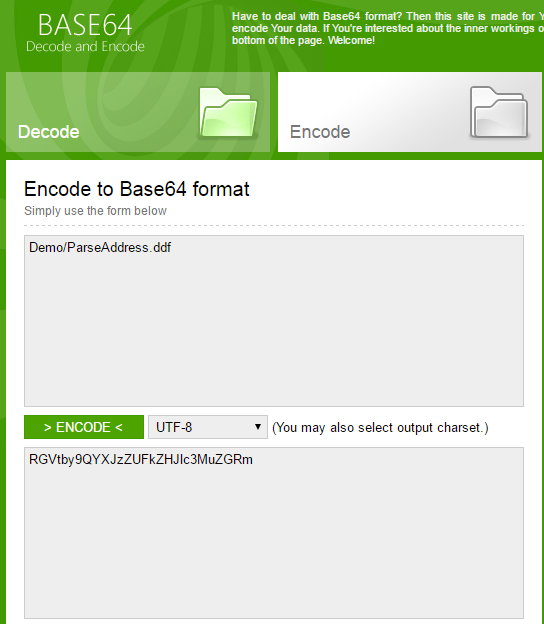

We Base64 encode the job name “Demo/ParseAddress.ddf” using the website mentioned above…

…and call the URL for the job’s REST API metadata:

http://DMServer:21036/SASDataMgmtRTDataJob/rest/jobFlowDefns/ RGVtby9QYXJzZUFkZHJlc3MuZGRm/metadata

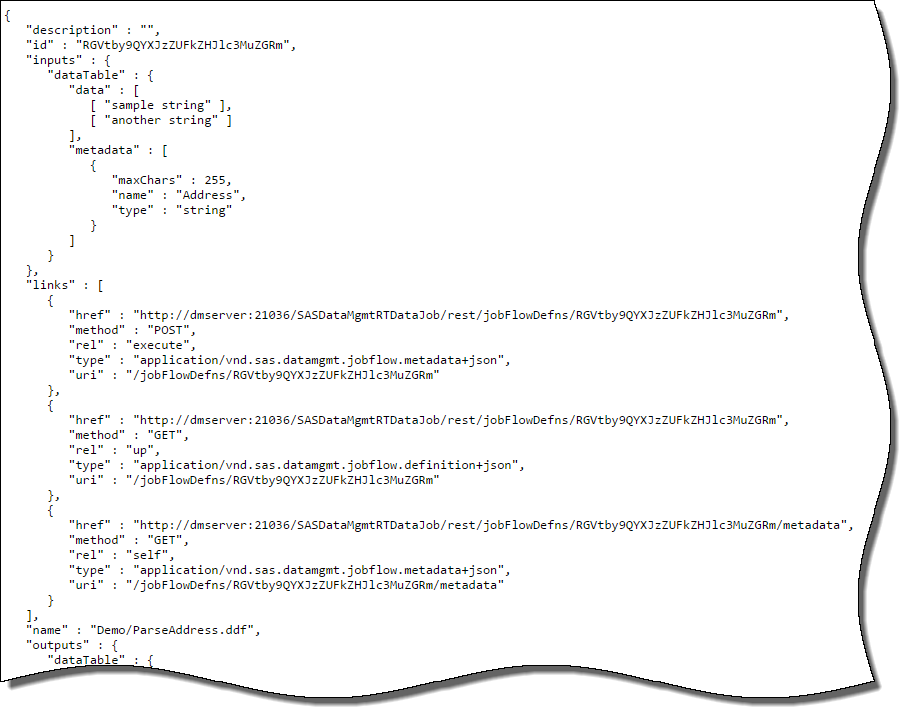

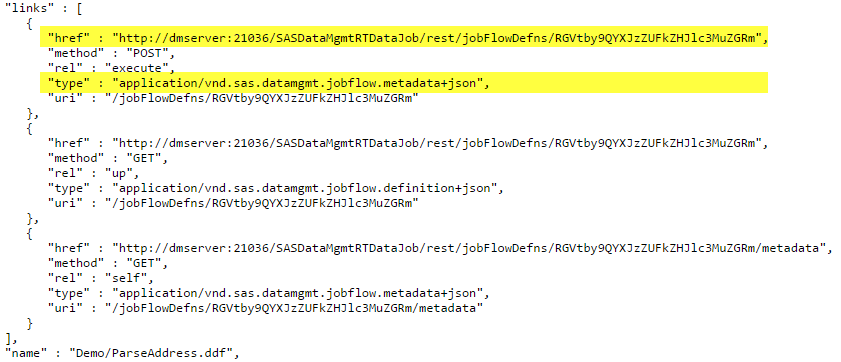

From here we collect the following information:

The REST API URL and Content-Type information…

…the JSON structure for input data…

…which we need in this format when calling the Data Management job from Python:

{"inputs" : {"dataTable" : {"data" : [[ "sample string" ],[ "another string" ]], "metadata" : [{"maxChars" : 255, "name" : "Address", "type" : "string"}]}}}

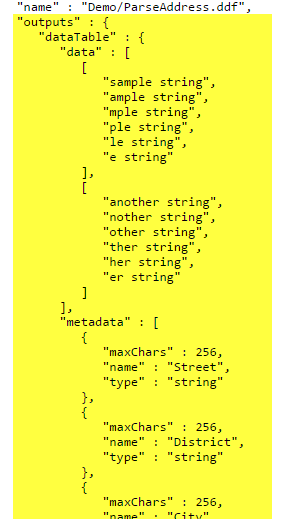

…and the JSON structure for the data returned by the Data Management job.

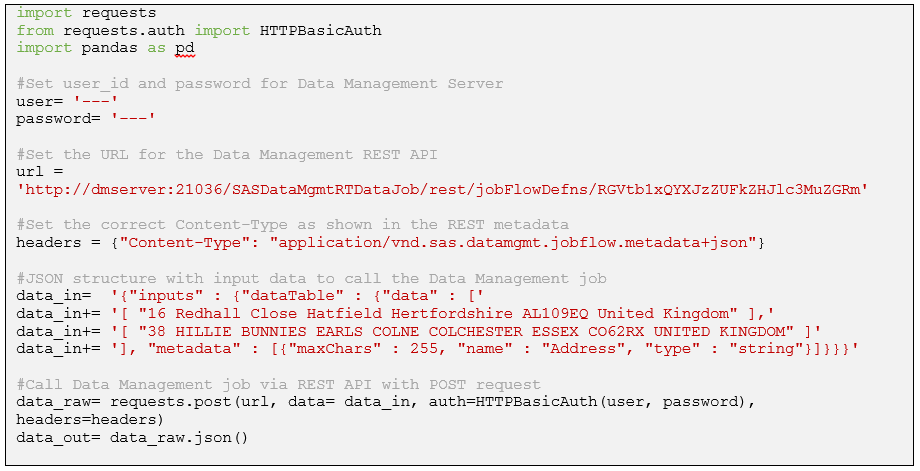

When you have this information, the Python code to call the Data Management job would look like this:

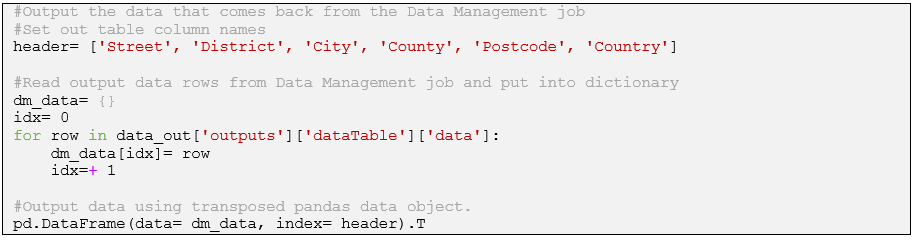

The output data from the Data Management job will be in data_raw. We call the built-in JSON decoder from the “request” module to move the output into a dictionary (data_out) from where we can access the data. The structure of the dictionary is according to the REST metadata. We can access the relevant output data via data_out[‘outputs’][‘dataTable’][‘data’]

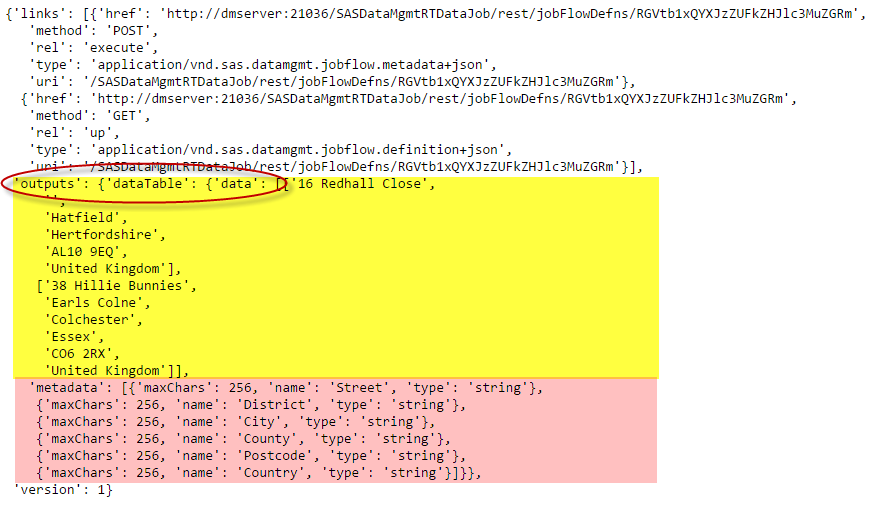

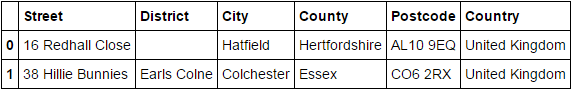

The Python program will produce an output like this…

You can find more information about the DataFlux Data Management REST API here.

Calling Data Management jobs from Python is straight forward and is a convenient way to augment your Python code with the more robust set of Data Quality rules and capabilities found in the SAS Data Quality solution.

Learn more about SAS Data Quality.