In this era of technology dominated by AI and rapid advancements, trust has emerged as a critical pillar of our interconnected world.

As Reggie Townsend, Vice President of the Data Ethics Practice at SAS, explains, we must understand that trust is essential for meaningful relationships and the functioning of civil societies. Townsend dove deeper into this point and more during a stop at SAS headquarters for the SAS Innovate on Tour event series

AI and the erosion of trust

In all areas of society, there is a need for trust. As a sometimes misunderstood technology, AI has the potential to erode trust at scale. Recent headlines have drawn attention to the darker side of AI and its potential for inaccuracies and biases.

It’s a common, unwritten principle in everyday life: it’s way easier to keep someone’s trust than to win it back once it’s gone. If you want to prevent an erosion of trust in your organization, now is a good time to prioritize trustworthiness to reinforce AI’s value.

“Trust in AI has to start before the first line of code is written,” Townsend said.

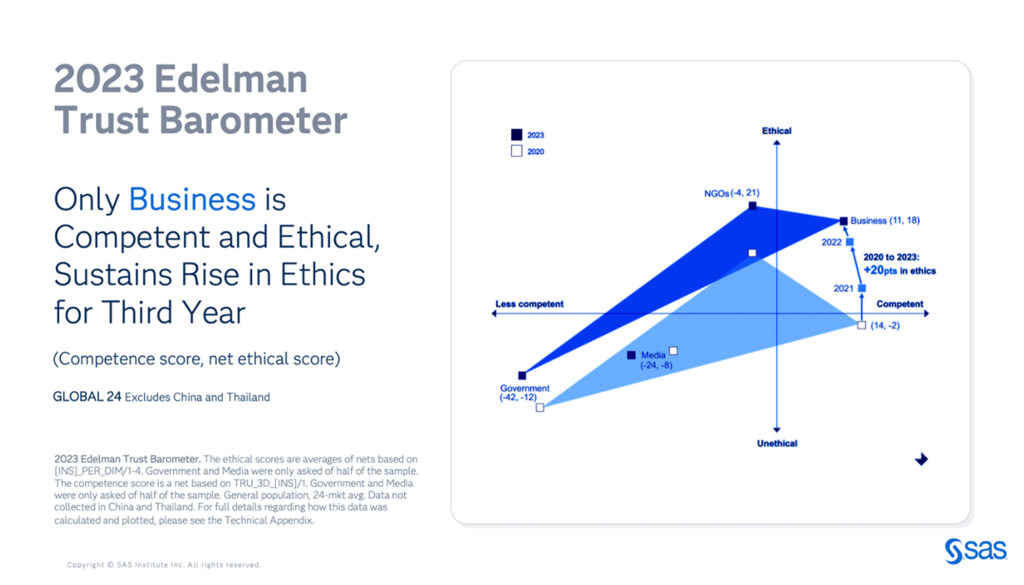

Unfortunately, as Townsend explains, governments and media face the uphill battle of rebuilding global trust. In contrast, businesses appear to be in a relatively favorable position to gain and maintain global trust. According to the latest Edelman Trust Barometer, organizations are currently considered competent and ethical – two critical trust attributes. If organizations were to lose this trust, our societies could be in trouble, Townsend emphasized.

A global response to AI trust challenges

Governments worldwide are aware of the challenges posed by AI. They recognize the tension between ubiquitous technology and the need for citizens to trust that AI benefits them. Because of that realization, governments have begun to act.

Initiatives like Australia’s inquiry into responsible AI, the EU’s AI Act and the United States’ coordination efforts with multiple agencies, including the National AI Advisory Committee, show that action is underway.

Across the board, the call is for comprehensive regulations, Townsend explained. This means setting technical standards for public and private sectors and ensuring AI use is trustworthy and ethical. Because SAS does business worldwide, we are monitoring the regulatory approaches to AI.

Townsend emphasized that a comprehensive approach is necessary and highlights the sociotechnical combination of people, processes and technology.

Trust in AI has to start before the first line of code is written.

Reggie Townsend

A holistic approach to trustworthy AI

To ensure trustworthy AI, SAS adopted a comprehensive approach. This includes a well-structured governance model consisting of four vital components, which Townsend explained in detail:

- Oversight: An inter-disciplinary executive committee that guides AI ethical dilemmas from sales opportunities to procurement decisions.

- Controls: Evaluate global AI compliance requirements and establish AI-specific risk management methods.

- Culture: Includes training and coaching employees in trustworthy AI principles, methods, and tools.

- Platform: This includes approaching trustworthy AI not only as a moral imperative and not only as a matter of compliance but also as a market in which we can do well financially and do good for those we serve.

“Being ethical by design and building trustworthiness into our platform enables our customers to build compliant, responsible AI with SAS,” Townsend said.

In essence, SAS doesn’t view trustworthy AI as a checkbox exercise; it’s a holistic philosophy that extends from executive oversight to cultural integration and market competitiveness.

Related: Talking AI in Washington, DC

Trustworthy AI in action

Erasmus Medical Center in the Netherlands is passionate about saving lives and helping patients return to society. Dr. Michel van Genderen, an intensive care physician at the facility, faces a familiar challenge: a surge in demand for medical services and a shortage of personnel. At Erasmus, the staff treats over 700 patients each year, and the average stay for a patient is six days, despite 65% of them requiring no further intervention after surgery. These factors have exerted immense pressure on their facility.

There are countless examples of AI in health care. However, Townsend acknowledges that while AI can help medical centers like Erasmus, health care professionals won’t use AI unless they can trust that it is accurate and explainable.

“It’s important to make sure these [AI] models are safe, reliable and do not harm patients,” Townsend said.

And that’s where Erasmus found a partner in SAS.

“We both understand that the true challenge is not about developing AI models but it is about implementing analytics at the bedside in a responsible fashion,” said Dr. Michel van Genderen in an interview with SAS.

In collaboration with SAS, Dr. van Genderen and his team pioneered creating an innovative DESIRE model. This model assists doctors in determining the safety of discharging patients after major oncology surgery.

With this, Erasmus physicians achieved a remarkable 50% reduction in re-admission rates. More importantly, this transformation helped optimize hospital resources by freeing up hospital beds for patients. This combination of ethical AI and analytics helped influence the cost and quality of care, demonstrating the potential of AI in health care innovation.

The success of models like DESIRE has created a vision for the future. SAS and Erasmus, in collaboration with TU Delft, the Netherlands’ oldest and largest public technical university, established the Innovation Center for Artificial Intelligence or the ICAI lab.

The lab aims to create a robust framework that translates responsible innovation principles into concrete clinical and technical guidelines. As Townsend explained, this will ensure the ethical development of human-centric AI technology. The lab currently awaits recognition from the World Health Organization. If granted, it will serve as a model for medical centers worldwide, illustrating how trust and ethical deployment of AI can revolutionize health care.

Our responsibility in AI

In the end, it all boils down to responsibility, which Townsend emphasized throughout his message. Trust is the foundation upon which we must build AI systems that reflect the best of humanity.

“As the founder and future of analytics and AI, we have a part to play in the AI ecosystem,” Townsend said. “Ultimately, it is our responsibility to make AI reflect the best of us rather than repeat the injustices of the past.”

SAS is on a mission to innovate for the better, and we see it as our duty to care – about AI, trust, and a brighter future.