It's almost impossible to go a day without hearing about the limitless potential or total existential threat (depending on your perspective) posed by the rapid emergence of generative artificial intelligence.

It’s difficult not to project dystopian images of the aftermath following AI’s instant of self-awareness provided by sci-fi series like Terminator and The Matrix. While generative AI (GenAI) holds great promise to be transformative for many industries, including health care, the downside is that fraudsters can also wield this new technology. That includes anything from generating fake medical records and diagnostic images in seconds to devising enhanced schemes to steal or fabricate patient identities

The intersection of generative AI and health care fraud

The intersection of GenAI and health care fraud demands immediate scrutiny. What does this new technology mean for health care fraud? Beginning with current threats, let’s consider one of GenAI's greatest strengths – immediately generating human-like narratives given a few simple prompts. It doesn’t take too much imagination to see how this can help a bad actor who wants to (falsely) substantiate medical services through record documentation.

I decided to give it a go myself. With a simple prompt, I asked ChatGPT to create a medical record describing a chiropractic patient experiencing subluxation of the C4 vertebra. The conversation is below.

The resulting output, barring minor errors like misspelling the singular “vertebra” as the plural “vertebrae,” is extremely impressive. Within a few seconds, I had a medical record describing the chief complaint, history of present illness, medical history, description of the exam, diagnosis, treatment plan with goal, and instructions for follow-up.

I praised ChatGPT and asked it to create five more records with varying diagnoses for each patient. Not one to turn down a user’s request, ChatGPT handily creates them on the fly.

Now consider the template medical record (aka boilerplate) fraud scheme. This is when a provider submits claims for services that did not occur, accompanied by the same medical record with minor variation for each submitted claim. The idea is that human eyes will never review all the medical records together – at least not thoroughly.

The emergence of GenAI enhances this fraud scheme because a large language model-based chatbot can effortlessly devise diverse medical records. This allows users to create substantially different medical records to document varying procedures and diagnoses with a few simple keystrokes.

Another strength of GenAI is image generation. Whether capturing the surrealist style of Salvador Dali or creating strikingly realistic images of Pope Francis donning a puffer jacket, the images generated by AI applications like DALL.E 2 can be awe-inspiring. Unfortunately, the capability to recreate very real-looking images has a perfect application in health care fraud.

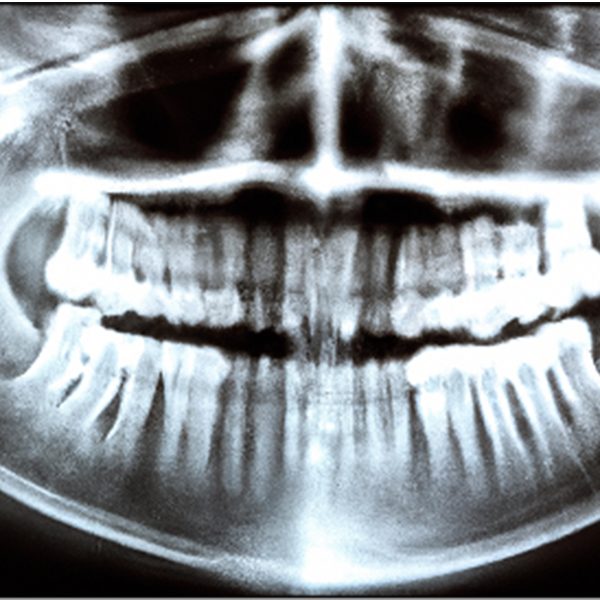

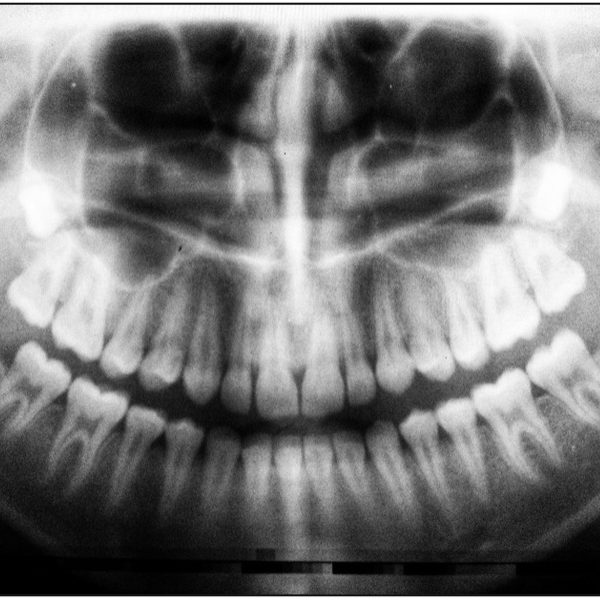

Below are images of two dental X-rays: one is real, and the other I created using generative AI.

The AI-generated X-ray becomes evident upon closely examining these images side-by-side, yet the distinction might not be immediately discernible to an untrained eye. To remove any lingering doubts, the image on the left is AI-generated.

A couple of additional points for consideration:

- First, I am a novice at using GenAI for creating images; and, I am not a dental clinician. With more experience in prompt engineering and possession of clinical expertise, I could generate better results.

- Second, GenAI image creation is still in its infancy and is rapidly evolving. Only months ago, it was easy to identify deepfakes by spotting superfluous digits and limbs. This is becoming less true as models use more training data and users become more sophisticated. This can be said of all generative AI.

The dawn of new challenges: Deepfake interviews?

We’re at the dawn of a new era. While prognostications are prone to wide margins of error, recent rapid advances indicate that generative technologies will only become more adept at what they already do. GenAI systems are currently capable of responding to people in real-time, generating sounds that mimic existing human voices, and creating new ones.

In other words, AI systems can speak. I’m unaware of a GenAI that can perform these actions simultaneously and convincingly hold a conversation over the phone, but it is conceivable that this is coming.

Imagine conducting an interview to confirm rendered services, only to fall victim to the deceit of a deepfake on the other end of the line. In the meantime, it is certainly possible to use GenAI to respond to verification of service letters.

Mitigating health care fraud in the future

Health systems and payers are rapidly adopting GenAI. This comes as a surprise since the industry is often a late adopter of new technologies (at least one investigator or clinical reviewer reading this will be interrupted by an incoming fax of medical records).

Already, industry trade organizations are abuzz with promises of GenAI handling prior authorizations, medical coding and billing, patient communication, and electronic health record generation. Ultimately, this will blur the lines when it comes to detecting fraud aided by GenAI. Will regulation or payer internal policy make declaration of GenAI written content obligatory? If so, what would the cutoff be for how much GenAI can contribute?

The good news is that old habits die hard. Fraud has a habit of remaining essentially the same even as it evolves: fraudsters will continue to make sloppy mistakes. As I’ve prompted ChatGPT to create various medical records, I have been given future dates of service. It has also repeated names that are associated with mismatching diagnoses.

It is important to remember that GenAI acts as a force multiplier rather than a total game-changer. By and large, we will still depend on investigative intellect in combination with fast, cutting-edge analytics to identify fraud successfully.

The human and AI revolution

Falling back to The Matrix, say what you want about Hollywood’s obsession with rebooting old films; my favorite part of Matrix Revolutions is the evolution of the relationship between people and AI compared to the original film from the turn of the millennium. No longer do we see ourselves pitted directly against AI machines.

In the contemporary installment, humans and AI work together – albeit toward different ends. We find ourselves in this same position in the health care fraud space. Be prepared for an arms race when it comes to the use of generative AI to both commit and detect fraud.

2 Comments

Excellent read! My team of investigators have already discovered AI created documents submitted by individuals. I am sure this will be a concern for all healthcare insurers into the future.

Excellent summary of how the "bad guys" may adopt emerging technologies! I love your creativity. But what are the "good guys" going to use generative AI to do? Can GenAI detect its own handiwork?