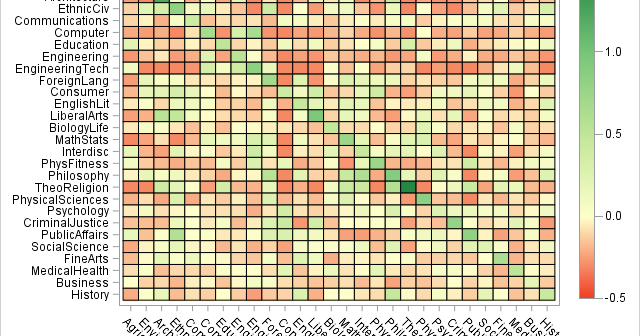

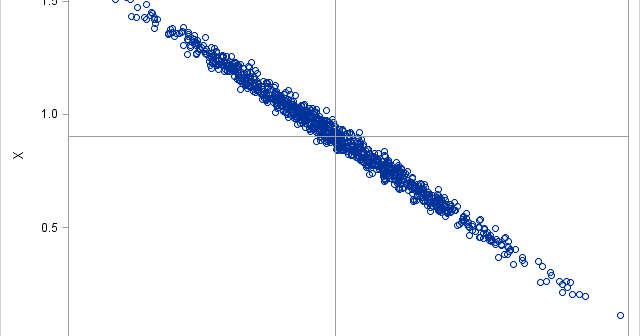

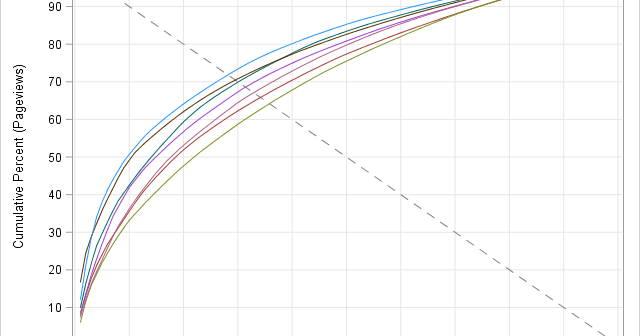

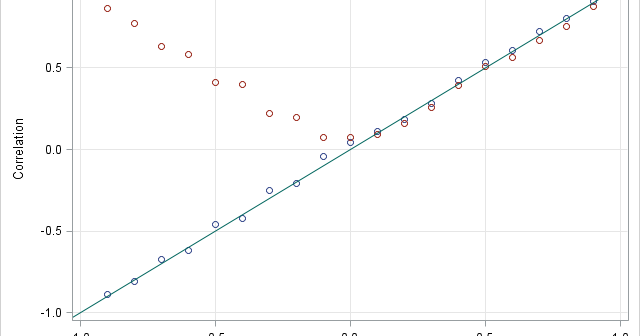

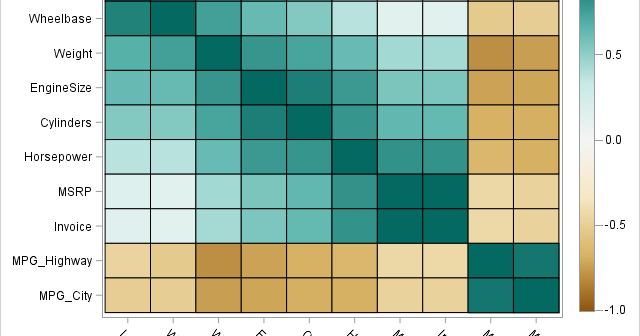

Order matters. When you create a graph that has a categorical axis (such as a bar chart), it is important to consider the order in which the categories appear. Most software defaults to alphabetical order, which typically gives no insight into how the categories relate to each other. Alphabetical order