AI doesn’t always get it right!

Source: https://www.cracked.com/article_28034_this-depixelization-ai-can-give-human-face-to-mario-but-not-to-minorities.html

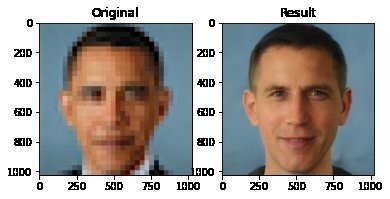

These reconstruction techniques look amazing – but AI doesn’t always get it right. Take a look at the image above. The original is a picture of Barack Obama, but the algorithm doesn’t know that, and the reconstruction is, frankly, pretty awful.

Part of this may be bias that has been inadvertently been built into the algorithm by the pictures used to train it. Perhaps it is just less good at recognising people from minority ethnic backgrounds? Whatever the reason, it is a warning to beware of relying too heavily on images reconstructed by GANs.