The Internet has been around a long time. "Things" have been around even longer. Put the things on the Internet, aka the Internet of Things (IoT), and you get so much hype that IoT is at the top of Gartner's "Peak of Inflated Expectations" – and poised for a fall into the "Trough of Disillusionment."

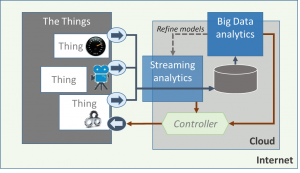

In spite of the predicted hype deflation, the things have a lot to offer the Internet and not just notions of more big data, faster. Streaming data is part of the nature of things, and event stream processing can help by analyzing those streams in-motion.

To understand why streaming analytics is so important to IoT, you have to look past the "billions and trillions" talk, and look at the architectural fundamentals of IoT. Things stretch the Internet and stress IT infrastructure because their nature is new and different. Consider these characteristics of the things in the Internet of Things:

- Things are different than servers in a data center. No clean rooms and raised floors for the things. They live out in the wild. They often have limited connectivity, power, memory, storage and processing capabilities. Some things are constrained in all of these ways.

- Things are different than UI clients. Even mobile clients. A smartphone's primary purpose in life is its onboard UI, and its interaction with humans. This isn't true for most things, even for wearable things like a FitBit. The ancestral heritage of things is machine-to-machine (M2M) not UI client-to-server.

- Things are different from each other. In the cloud era of commodity hardware, it’s easy to think of computing as being homogeneous. But things are not one-size-fit-all. IoT welcomes all things, without regard for power, processing, memory and other capacities. A thing could be inside a human body or inside a hydroelectric dam. A thing could be in a truck or driven over by a truck. IoT is inclusive and embraces this diversity. No willing thing will be left behind.

- Things can stream data from the inside, or from the outside. Many things send data from sensors, but computer vision shouldn't be overlooked. In some cases, computer vision can realize significant cost savings, as I discussed in a blog post on the cost advantages of computer vision over a sensor approach for streaming top rated tournament chess games. The voice of a thing can originate from the inside of a thing or the outside, as illustrated on the second thing in the diagram above.

- Security of things is crucial for IoT dreams.The security risks aren't just that someone might discover the usual temperature of your house or tap into a camera on your property. In the M2M tradition, things can actuate as well – e.g., move a robot arm, change a setting, even update its software. How about things telling things to do things over the Internet? That's scary. It’s a case for cyber security. Analytics can help by recognizing anomalies, such as default password compromises across a region. On the analytical consumption side, poor security creates data integrity issues – can you even trust these things?

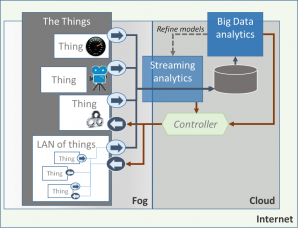

- Things have their own communication channels with other things. It's like parents discovering that their child's peers have a lot of influence on their little pumpkin. And that tweens and teenagers form their own culture based on chatter that the parents never see or hear. It isn't all thin-client communication to the parent server with these things. These things are going to do their own thing. And if trillions of devices are going to be sending zettabytes of data by 2020, then a hub-and-spoke model presents some real headaches, at a minimum. Fog computing, illustrated below, has evolved to address the need for computing at the device, and in the fog – away from distant data center bound cloud – is where the cloud meets the thing-infested ground. In the fog, streaming analytics can ensure that all those data streams can be aggregated, distilled and routed in real-time so that the cloud doesn't get grounded.

IoT is one example of computing's long trend away from the UNIVAC days of a single computer occupying an entire basement of a university building and only accessible to highly specialized technicians. These things are right where the action is. And while they contribute to the big data of a distant cloud data center, they are not themselves repositories of big data.

Things are the headwaters of streams, as previously described, and are like the many feeder streams of the Amazon River basin. The things must meld to the real, physical terrain as it exists. While they may feed into a "data lake" they aren't usually data lakes themselves. Things are like the crest of a waterfall -- they induce flow rather than contain volume.

By tapping directly into these data streams, event stream processing gives you analytics right where you need it. It complements traditional big data analytics by distilling data in-motion so that traditional analytics can be more valuable. SAS Event Stream Processing can even help actuate things in real-time and aid in detecting security anomalies or other patterns of interest. In the cloud or in the fog, SAS Event Stream Processing brings clarity to things.

1 Comment

The things can be easily replaceable or very expensive. For expensive things being able to predict upcoming failure in time fix before they break can save organizations millions. Organizing and storing the thing data for those predictions requires streamlined data for fastest analytics. While it's easier to store the data in a time series entry (datetime, value, value, value) storing the data in a (datetime value) format concentrates the focus for analytics. Complementing the event stream processing which identifies issues as they are occurring, predicting when expensive things will break is also provided by SAS in the Quality Suite.