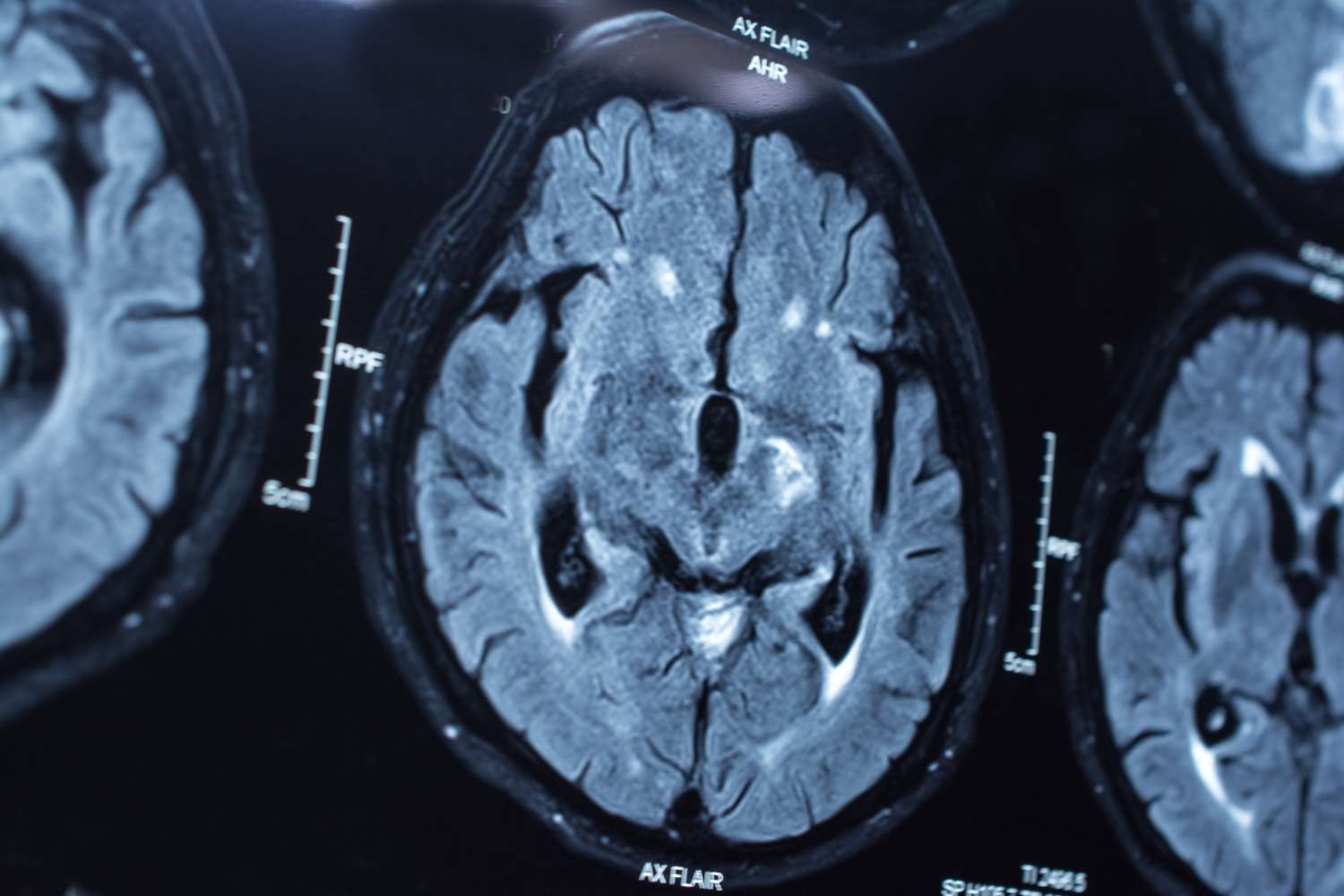

Dementia describes different brain disorders that trigger a loss of brain function. These conditions are all usually progressive and eventually severe. Alzheimer's disease is the most common type of dementia, affecting 62 percent of those diagnosed. Other types of dementia include; vascular dementia affecting 17 percent of those diagnosed, mixed dementia affecting 10 percent of those diagnosed.

Dementia describes different brain disorders that trigger a loss of brain function. These conditions are all usually progressive and eventually severe. Alzheimer's disease is the most common type of dementia, affecting 62 percent of those diagnosed. Other types of dementia include; vascular dementia affecting 17 percent of those diagnosed, mixed dementia affecting 10 percent of those diagnosed.

Dementia Statistics

There are 850,000 people with dementia in the UK, with numbers set to rise to over 1 million by 2025. This will soar to 2 million by 2051. 225,000 will develop dementia this year, that’s one every three minutes. 1 in 6 people over the age of 80 have dementia. 70 percent of people in care homes have dementia or severe memory problems. There are over 40,000 people under 65 with dementia in the UK. More than 25,000 people from black, Asian and minority ethnic groups in the UK are affected.

Cost of treating dementia

Two-thirds of the cost of dementia is paid by people with dementia and their families. Unpaid careers supporting someone with dementia save the economy £11 billion a year. Dementia is one of the main causes of disability later in life, ahead of cancer, cardiovascular disease and stroke (Statistic can be obtained from here - Alzheimer’s society webpage). To tackle dementia requires a lot of resources and support from the UK government which is battling to find funding to support NHS (National Health Service). During 2010–11, the NHS will need to contribute £2.3bn ($3.8bn) of the £5bn of public sector efficiency savings, where the highest savings are expected primarily from PCTs (Primary care trusts). In anticipation for tough times ahead, it is in the interest of PCTs to obtain evidence-based knowledge of the use of their services (e.g. accident & emergency, inpatients, outpatients, etc.) based on regions and patient groups in order to reduce the inequalities in health outcomes, improve matching of supply and demand, and most importantly reduce costs generated by its various services.

Currently in the UK, general practice (GP) doctors deliver primary care services by providing treatment and drug prescriptions and where necessary patients are referred to specialists, such as for outpatient care, which is provided by local hospitals (or specialised clinics). However, general practitioners (GPs) are limited in terms of size, resources, and the availability of the complete spectrum of care within the local community.

Solution in sight for dementia patients

There is the need to prevent or avoid delay for costly long-term care of dementia patients in nursing homes. Using wearables, monitors, sensors and other devices, NHS based in Surrey is collaborating with research centres to generate ‘Internet of Things’ data to monitor the health of dementia patients at the comfort of staying at home. The information from these devices will help people take more control over their own health and wellbeing, with the insights and alerts enabling health and social care staff to deliver more responsive and effective services. (More project details can be obtained here.)

Particle filtering

One method that could be used to analyse the IoT generated data is particle filtering methods. IoT dataset naturally fails within Bayesian framework. This method is very robust and account for the combination of historical and real-time data in order to make better decision.

In Bayesian statistics, we often have a prior knowledge or information of the phenomenon/application been modelled. This allows us to formulate a Bayesian model, that is, prior distribution, for the unknown quantities and likelihood functions relating these quantities to the observations (Doucet et al., 2008). As new evidence becomes available, we are often interested in updating our current knowledge or posterior distribution. Using State Space Model (SSM), we are able to apply Bayesian methods to time series dataset. The strength of these methods however lies in the ability to properly define our SSM parameters appropriately; otherwise our model will perform poorly. Many SSMs suffers from non-linearity and non-Gaussian assumptions making the maximum likelihood difficult to obtain when using standard methods. The classical inference methods for nonlinear dynamic systems are the extended Kalman filter (EKF) which is based on linearization of a state and trajectories (e.g. Johnson et al., 2008 ). The EKF have been successfully applied to many non-linear filtering problems. However, the EKF is known to fail if the system exhibits substantial nonlinearity and/or if the state and the measurement noise are significantly non-Gaussian.

An alternative method which gives a good approximation even when the posterior distribution is non-Gaussian is a simulation based method called Monte Carlo. This method is based upon drawing observations from the distribution of the variable of interest and simply calculating the empirical estimate of the expectation.

To apply these methods to time series data where observation arrives in sequential order, performing inference on-line becomes imperative hence the general term sequential Monte Carlo (SMC). A SMC method encompasses range of algorithms which are used for approximate filtering and smoothing. Among this method is particle filtering. In most literature, it has become a general tradition to present particle filtering as SMC, however, it is very important to note this distinction. Particle filtering is simply a simulation based algorithm used to approximate complicated posterior distributions. It combines sequential importance sampling (SIS) with an addition resampling step. SMC methods are very flexible, easy to implement, parallelizable and applicable in very general settings. The advent of cheap and formidable computational power in conjunction with some recent developments in applied statistics, engineering and probability, have stimulated many advancements in this field (Cappe et al. 2007). Computational simplicity in the form of not having to store all the data is also an additional advantage of SMC over MCMC (Markov Chain Monte Carlo).

References

[1] National Health Service England, http://www.nhs.uk/NHSEngland/aboutnhs/Pages/Authoritiesandtrusts.aspx (accessed 18 August 2009).

[2] Pincus SM. Approximate entropy as a measure of system complexity. Proc Natl Acad Sci USA 88: 2297–2301, 1991

[3] Pincus SM and Goldberger AL. Physiological time-series analysis: what does regularity quantify? Am J Physiol Heart Circ Physiol 266: H1643–H1656, 1994

[4] Cappe, O.,S. Godsill, E. Moulines(2007).An overview of existing methods and recent advances in sequential Monte Carlo. Proceedings of the IEEE. Volume 95, No 5, pp 899-924.

[5] Doucet, A., A. M. Johansen(2008). A Tutorial on Particle Filtering and Smoothing: Fifteen years Later.

[6] Johansen, A. M. (2009).SMCTC: Sequential Monte Carlo in C++. Journal of Statistical Software. Volume 30, issue 6.

[7] Rasmussen and Z.Ghahramani (2003). Bayesian Monte Carlo. In S. Becker and K. Obermayer, editors, Advances in Neural Information Processing Systems, volume 15.

[8] Osborne A. M.,Duvenaud D., GarnettR.,Rasmussen, C.E., Roberts, C.E.,Ghahramani, Z. Active Learning of Model Evidence Using Bayesian Quadrature.

1 Comment

Great article on how we can apply analytics with ESP to help combat this issue in all societies. With rising healthcare costs, we need to find ways to better diagnose and treat this disease quickly, and that benefits us all. Thanks again for the article.