Back in the day when the prison system forced inmates to perform "hard labor", folks would say (of someone in prison): "He's busy making little ones out of big ones." This evokes the cliché image of inmates who are chained together, forced to swing a chisel to break large rocks into smaller rocks. (Yes, it seems like a pointless chore. Here's a Johnny Cash/Tony Orlando collaboration that sets it to music.)

Back in the day when the prison system forced inmates to perform "hard labor", folks would say (of someone in prison): "He's busy making little ones out of big ones." This evokes the cliché image of inmates who are chained together, forced to swing a chisel to break large rocks into smaller rocks. (Yes, it seems like a pointless chore. Here's a Johnny Cash/Tony Orlando collaboration that sets it to music.)

SAS programmers are often asked to break large data sets into smaller ones. Conventional wisdom says that this is also a pointless chore, since you can usually achieve what you want (that is, process a certain subset of data) by applying a WHERE= option or FIRSTOBS=/OBS= combination. Splitting a data set creates more files, which occupy more disk space and forces more I/O operations. I/O and disk access is often the most expensive part of your SAS processing, performance-wise.

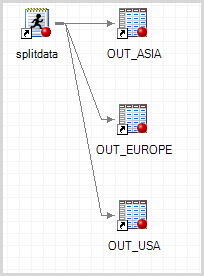

But if the boss asks for broken-up data sets, you might as well spend the least possible effort on the task. Let's suppose that you need to break up a single data set into many based on the value of one of the data columns. For example, if you need to break SASHELP.CARS into different tables based on the value of Origin, the SAS program would look like:

DATA out_Asia; set sashelp.cars(where=(origin='Asia')); run; DATA out_Europe; set sashelp.cars(where=(origin='Europe')); run; DATA out_USA; set sashelp.cars(where=(origin='USA')); run; |

I'm going to admit right now that this isn't the most efficient or elegant method, but it's something that most beginning SAS programmers could easily come up with.

Writing the above program is easy, especially since there are only 3 different values for Origin and I've memorized their values. But if there are more discrete values for the "split-by" column, the task could involve much more typing and has a high possibility for error. This is when I usually use PROC SQL to generate the code for me.

If you've read my article about implementing BY processing for an entire SAS program, you know that you can use PROC SQL and SELECT INTO to place data values from a data set into a macro variable. For example, consider this simple program:

proc sql; select distinct ORIGIN into :valList separated by ',' from SASHELP.CARS; quit; |

It creates a macro variable VALLIST that contains the comma-separated list: "Asia,Europe,USA".

But we can use SAS functions to embellish that output, and create additional code statements that weave the data values into SAS program logic. For example, we can use the CAT function to combine the values that we query from the data set with SAS keywords. The results are complete program statements, which can then be referenced/executed in a SAS macro program. I'll share my final program, and then I'll break it down a little bit for you. Here it is:

/* define which libname.member table, and by which column */ %let TABLE=sashelp.cars; %let COLUMN=origin; proc sql noprint; /* build a mini program for each value */ /* create a table with valid chars from data value */ select distinct cat("DATA out_",compress(&COLUMN.,,'kad'), "; set &TABLE.(where=(&COLUMN.='", &COLUMN., "')); run;") length=500 into :allsteps separated by ';' from &TABLE.; quit; /* macro that includes the program we just generated */ %macro runSteps; &allsteps.; %mend; /* and...run the macro when ready */ %runSteps; |

Here are the highlights from the PROC SQL portion of the program:

- SELECT DISTINCT ensures that the results include just one record for each unique value of the variable.

- The CAT function concatenates a set of string values together. Note that CATX and CATS and CATT -- other variations of this function -- will trim out white space from the various string elements. In this case I want to keep any blank characters that occur in the data values because we're using those values in an equality check.

- The program calculates a name for each output data set by using each data value as a suffix ("OUT_dataValue"). SAS data set names can contain only numbers and letters, so I use the COMPRESS function to purge any invalid characters from the data set name. The 'kad' options on COMPRESS tell it to keep only alpha and digit characters.

- The resulting program statements all end up in the &ALLSTEPS macro variable. I could just reference the &ALLSTEPS variable in the body of the SAS program, and SAS would run it as-is. Instead I chose to wrap it in the macro %runSteps. This makes it a little bit easier to control the scope and placement of the executable SAS program statements.

"By each value of a variable" is just one criterion that you might use for splitting a data set. I've seen cases where people want to split the data based on other rules, such as:

- Quantity of observations (split a 3-million-record table into 3 1-million-record tables)

- Rank or percentiles (based on some measure, put the top 20% in its own data set)

- Time span (break up a data set by year or month, assuming the data records contain a date or datetime variable)

With a small modification, my example program can be adapted to serve any of these purposes. What about you? Are you ever asked to split up SAS data sets, and if so, based on what criteria? Leave a comment and tell us about it.

52 Comments

Hey Chris,

Think it's a great technique you have shared, and I don't think it's as "pointless" as you seem to suggest.

Dataset splitting can be an I/O, cpu cycle, and disk space saving operation depending on the actual requirements of the project/task.

Using the example of the graphic provided at the head of the article, If, for example, the data subsets for Asia, Europe and USA are being provided to distinct groups/entities for their own intelligence/analysis then data splitting might be the efficient solution. If each group/entity intends to host it's own dataset, file transfer overhead and diskspace is certainly reduced in sending three subsets, compared to three complete copies of the total dataset.

Even if each entity isn't hosting its' own dataset, as the number of sub-setting where-clauses generated by each groups efforts grows, there's the potential for I/O and CPU costs to exceed the cost associated with the initial file-split.

Thanks,

Chris Amendola

Thanks for the insight, Chris. I agree that there are good use cases for splitting data, hence the proliferation of many %split macros in SAS Global Forum papers. The reasons that you cite are thoughtful and reflect what I've seen in practice.

please tell me chris where I have to run all that commands for splitting the big datasets ?

Moin, you can run the macro that I've presented here -- in your SAS environment - or select from a variety of other techniques covered in SAS Global Forum papers from years past.

I seem to remember a posting about dynamically naming the output table.... and found a reference citing Paul Dorfman aka hashman

see https://communities.sas.com/message/23522#23522

Of course we can find a cautionary note.... using a hash based method assumes the memory is available.

If I was tasked with a similar challenge and not enough resources use hash and not enough time to repeatedly read all that data I could find a way...... but no one needs it...

I am not a fan of taking one big dataset and slicing it into smaller pieces. Doing so creates a lot of clutter, and even worse tends to create orphans that don't receive updates when the original dataset is refreshed.

But sometimes it's necessary for various reasons. And when it is, this is a very good automated way of cranking them out.

However, an easy slight tweak to Chris H's code that would keep me happier, instead of spooling out a bunch of statements that resolve to

DATA out_Asia;

set sashelp.cars(where=(origin='Asia'));

run;

would be to: (1) first index the source table by the splitting variable/s, then

(2) spool out a bunch of clones of

DATA PROD.out_Asia / view=PROD.out_Asia ;

set sashelp.cars(where=(origin='Asia'));

run;

This functionally splits the master table for any users, but leaves each of the subsets dynamically connected to the master source table so they get updates, and also does not double-up on the physical storage space.

(Note that this may not be the best approach if ultimate performance is a requirement. Each subsequent read of one of these subsetting views into the Big Dataset will be marginally slower than a standard SAS "full-table-scan" of a smaller physical dataset, because the storage layout of SAS datasets and i/o in SAS are really optimized to do full-table-scans very efficiently, while grabbing records via an index imposes some additional processing and overhead.)

I would agree double storage of the data might not meet your optimization goals. However, there is a role for double storage and denormalized data.

Might I suggest, that the use of an index pre-suppose that values of ORIGIN have a high level of cardinality. If the ASIA comprised more than 15% of the data the index might likely be ignored in favor of a full-table scan. Thus only ORIGIN of low frequency would use the index.

I believe the theory goes that if a data page has, let say 100 observation, there is a good chance that an ASIA record might occur on that page. Thus using the index might end up reading all the page of the table. As such, between reading pages of the index and the pages that contain ASIA, the base enge would figure that it would be more efficient just to read through the entire table.

However, I would also agree with high cardinality... would one really want to have a table for each country potential 500? Depends on your optimization/denormalization goals.

Obviously, everyones mileage may vary. I might suggest a slight modification to your suggestion. For instance, maybe you do a count(*) by country and only create tables where count>100000, and create views where the count<100000. I might even go further to say only create tables where count>100000 and ORIGIN not in (&listOfLesserUsedCountries) and create views when not in that list.

You could rearrange it slightly and use one data step to produce many output data sets.

%let TABLE=sashelp.cars; %let COLUMN=origin; proc sql noprint; /* build a mini program for each value */ /* create a table with valid chars from data value */ select distinct cat("out_",compress(&COLUMN.,,'kad'),"(where=(&COLUMN.=",quote(&COLUMN.),"))") into :allsteps separated by ' ' from &TABLE.; quit; %put NOTE: &allsteps.; data &allsteps.; set &table; run;Thanks for sharing this improvement!

Hi John,

Any idea if my output are all empty?

Bowie, I suggest that if you're having trouble with the code and would like community help, post the question to the SAS Support Communities -- responses are always quick and helpful.

Hello, Chris.

I think your variant is not the best because you need to read the table sashelp.cars three times.

I think the better way is to split data in one read:

Nikolay,

Thank you for the comment. I agree with you, and others have pointed out similar changes that would result in one pass through the data. I was trying to keep this example simple as I really wanted to demonstrate how you can use PROC SQL to generate SAS programs based on your data values -- but I appreciate the many suggestions for improvements that I've seen here and on LinkedIn where I shared the example.

Does it really work

It really does!

Old thread but I read recently you can avoid end of file issues putting any subsetting IF before the SET line.

The preservation of blanks in the values of ORIGIN is not necessary. The code works fine with both CATT and CATS instead of CAT. To SAS, "Asia" = "Asia "

My previous comment did not translate well. The last sentence should have included trailing blanks within the quotation marks on the right side of the equals sign. The point is that SAS ignores trailing blanks when comparing two character values for equality as long as the non-blank characters are equal. That is why CATT works since it strips trailing blanks. Had there been leading blanks (which probably would not happen in this context), then CATS may not work since it strips both leading and trailing blanks.

Hi Chris,

To just add another variation I use quite often: Add the WHERE option to your target datasets instead of your Program Logic, as follows:

Data out_Asia (Where = (Origin = 'Asia')) out_Europe (Where = (Origin = 'Europe')) out_USA (Where = (Origin = 'USA')); Set SASHELP.Cars; Run;I had to quickly reduce a dataset to a 5% sample because of space restrictions, This could be adapted to split a dataset into smaller datasets. With thanks to the contributors on http://stackoverflow.com/questions/27683906/i-want-to-extract-only-last-two-numeric-values-from-a-string-variable-in-sas

data my_output (keep=myfield); SET my_input; Test_string=put(_N_,6.); temp1=compress(Test_string, , 'kd'); temp2=length(temp1); temp3=substr(temp1,temp2-1,2); final=substr(compress(Test_string, , 'kd'),length(compress(temp1))-1,2); if final = '00' or final = '20' or final = '40' or final = '60' or final = '80' then output; run;I like the ideas discussed here. I have a data set of 50K files in this case they are all .xls files. I have the logic to read the metadata they contain. It seems that my windows PC is choking on the volume. It burns up the sample sets of like 1-60 files, and does what I want in seconds. When I give it the full chore it has processed for over 3 hours and counting. In my case the observations are very random. For example if I want to cut them into 25 groups of 2K files each I think I need a counter to do what you are describing above. I am 100% sure for example my directories starting with the letter c have 40-60% of all my campus data and z does not exist. Thus a simple check of the first letter of the base dir cannot help. I don't see how to bucket the file system in a way like you describe without a “counter”. Do you have any ideas for a green horn SAS programmer? TIA -KJ

I just double checked its actually 58.7K xls files and for the fun of it I am processing another 19.4K total files from these ext (XLSX ACCDB MDB SAS7BDAT DBF SAV) for metadata but this sub set is the only one that is not finishing in a reasonable time, 30 minutes or less. -KJ

data My_list_of_files;

set list_of_all_xls;

Path_File2=Path_File;

bucket=MOD(_n_, 58);

if bucket=0 then

do;

bucket=58;

end;

run;

/*I forgot about how valuable mod arithmetic was for things like this, I now have a generally even way to bucket them into about 1K file sets, of course now that I found _n_ I could have just as easily made an actual counter field too*/

Looks like you figured it out -- great job!

Chris,

When I use your code against my data set I get the distinct items 1-58 in my list

when I go on to declare your two lets, with my data set again, and my field just fine, however on the second proc sql I get this error:

ERROR: Function COMPRESS requires a character expression as argument 1.

My output from the first proc sql is all numbers. I tried it without the compress it still didn't work for me...

I then get no error on the second SQL but errors with each running of the macro like this:

MPRINT(RUNSTEPS): ;

MPRINT(RUNSTEPS): DATA out_9;

MPRINT(RUNSTEPS): set list_of_all_clean_temp(where=(bucket='9'));

ERROR: WHERE clause operator requires compatible variables.

MPRINT(RUNSTEPS): run;

NOTE: The SAS System stopped processing this step because of errors.

WARNING: The data set WORK.OUT_9 may be incomplete. When this step was stopped there were 0 observations and 0 variables.

WARNING: Data set WORK.OUT_9 was not replaced because this step was stopped.

NOTE: DATA statement used (Total process time):

real time 0.01 seconds

cpu time 0.01 seconds

Any ideas? TIA -KJ

Is the 'bucket' variable a character type or number? The WHERE= error seems to indicate it's a number, though it's enclosed in quotes in the code (treated as a char).

number.

It looks like the fix for type number is to drop the first single quote from each of these two lines.

/*this for character type*/

"; set &TABLE.(where=(&COLUMN.='", &COLUMN.,

"')); run;") into :allsteps separated by ';'

*becomes - - - >;

/*this for number type*/

"; set &TABLE.(where=(&COLUMN.=", &COLUMN. ,

")); run;") into :allsteps separated by ';'

Sorry that was a bit silly but got it now. -KJ

Glad you got it. I guess you ran into that because your "split" var was a number column, not a char. Welcome to the world of adapting examples!

Thank you Chris, you saved me about 100 lines of code and I now have much more robust program. -KJ

Pingback: Your favorite SAS posts from 2015 - SAS Voices

Hi Chris,

Thank you for sharing the code! I used the revised code John King made (Posted February 4, 2015 at 7:51 am) and it really gets at the heart of what I want. With that said, I was wondering if you know how to incorporate a second column into the code.

For example, I am working with administrative health claims data and I want to split the master dataset by each region (I have 13 regions in all). However, I also want to split each region by the different years of data I have (2009-2015). So in the end, I want smaller datasets for each region by year (i.e. out_7_2009, out_7_2010, etc...).

Do you have any suggestions on how to do this?

Thanks for your assistance!!

Hi,

Excuse me if I ask silly questions (I'm new as a SAS user) but how come &COLUMN. is sometime resolve as Asia, Europe etc. and sometime resolve as the word origin? Can you explain me the differences?

Hi Stéphanie, in this SAS macro program the macro variable represents the name of the column ("origin") -- but as the DATA step runs the value of that column in each observation is checked -- and that's where Asia, Europe, USA come in. It's two levels of indirection: the value in the record, referenced by the name of the column, referenced by the macro variable &COLUMN.

Chris,

Thank you for the code. I had to specify length=1024 as I ran into the CAT 200 char warning. Think it had to do with my naming convention. Sorry if I am not clear. Only started using SAS again for 2 weeks after about 2 years so still little rusty.

Welcome back! Glad this helped.

Great post. I'm quite new at sas.

I think your way of doing this Chris H. is great cause the user don't have to anything manually and have to know all the distinct value of the variable (in this case origin), so it works on all datasets and variables.

My problem is, that I would like the output to be out_1, out_2, ... , out_n so I can handle all the outputs the same way in a macro which loop over the datasets out_&i.

Andreas, for that you probably need an approach that's more like my "BY grouping" tip -- use PROC SQL SELECT INTO to determine the number and names of the distinct values, and then use the number (index) as part of the data set name, while using the value as part of the filtering criteria.

Hi Chris,

where can I specify output library? Because now are all datasets in WORK.

Thank you

Easy change -- just add the libname you need to the DATA statement in the program:

select distinct cat("DATA LIBNAME.out_",compress(&COLUMN.,,'kad'), "; set &TABLE.(where=(&COLUMN.='", &COLUMN., "')); run;") length=500 into :allsteps separated by ';' from &TABLE.;Hello,

I am attempting to use your code to split up my dataset by month and year (Ex. January 2017, February 2017, etc.). I have a date column in my data, but I am having a bit of trouble tweaking your code to achieve my desired result. Any help would be appreciated!

You can achieve this by comparing FORMATTED values and applying a Month-Year format in the comparison. The code is a bit klunkier, but here's something that works.

can you explain how can I create multiple data set without using fun. suppose there is no extra digit and spaces in column name so I. e. we are assuming only.

because I am getting confuse with fun behaviour here. so I can get idea. how to implement. without using fun.

I'm don't understand the question -- or what you mean by "fun" (function?) Do mean macro functions? Certainly there are other ways -- some in the comments and you can find more by asking at our SAS Support Communities.

SOooo Good, Thank You

This is very useful, how to include 2 variable split for example %let COLUMN1=date; and %let COLUMN2=origin;

Hanika, a little more complex. One approach is to use PROC FREQ to generate all of the combinations of the two values, and then use that data to generate WHERE clauses for each target data set.

Thanks Chris, will try it! :)

Pingback: Splitting a data set into smaller data sets - SAS Users

Chris, this is amazing and thank you! Do you have code that will export the resulting datasets to excel? Thanks Again!

Hi Eva,

You could use PROC EXPORT for each resulting data set to add to an XLSX, and use the SHEET= option to add each data set to its own sheet in the workbook. You can run PROC EXPORT multiple times for the same XLSX file, and it will modify things at the sheet level.

Thanks for the response! I am splitting my datasets into several workbooks each with about 5 tabs. I have tried to adjust the export code in a similar manner, but each export overwrites the previous export. Hope I am making sense. I have 5 datasets I split out the way you indicate above and then try to split them out in a similar manner with PROC EXPORT. I can't figure out why the sheets are overwriting.

Also, on another note...what does the 'kad' mean that you put after the &column macro?

Thank you again!!

/*Export*/

proc sql;

/* build a mini program for each value */

/* create a table with valid chars from data value */

select distinct

cat('PROC EXPORT DATA=DELQ_', compress(&COLUMN.,,'kad'),

' FILE = ', '"~/shared_data/120day/avn/&USER_LC./export_folder/Delq_Notice_Rpt_',

compress(&COLUMN.,,'kad'),'_YYYY-MM.XLSX"', ' DBMS=XLSX REPLACE'),

cat("; SHEET = ", '"OPEN_PO_AND_DELQ"', "; RUN;")

into :prcexprt separated by ';'

from &TABLE.;

quit;

/* macro that includes the program we just generated */

%macro runsteps;

&prcexprt.;

%mend;

/* and...run the macro when ready */

%runSteps;

I think the thing that's getting you is the REPLACE in your PROC EXPORT. You might need to check (and delete) for the XLSX file before you begin, and then use this process to run PROC EXPORT for each value, adding each sheet as it goes.

The 'kad' Keeps just the Alpha and Decimal characters in the name, removing special characters that can't be in a SAS file name.