It suddenly seems as if artificial intelligence (AI) is all around us. It is already having a huge impact in a wide range of sectors, including health care. We are now starting to see it move into the manufacturing sector as an important part of Industry 4.0, the digitisation of manufacturing and digital transformation.

Use cases

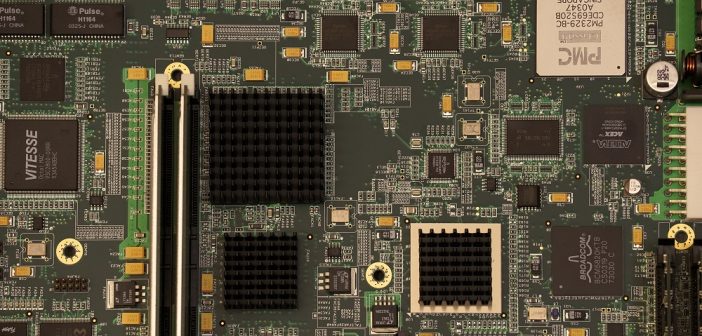

For example, an innovative quality control model has been developed in a microchip manufacturing facility in Taiwan using the full potential of AI. The microchip industry is the backbone of the digital age. In the digital printing of components, one failure in a billion operations does not seem like a negative statistic. When you consider, however, that factories are turning out up to 50 million jobs per second, it becomes clear that this low-sounding failure rate actually equates to one defect every 20 seconds.

With that kind of volume, it is clearly not going to be possible for anyone to examine every microchip to check for production defects. Enter AI. This provides two techniques that can be used to monitor the quality of the products: image recognition and the IoT with edge analytics. Together these techniques analyse whether microchips contain anomalies or peculiarities that make them defective or noncompliant. Preventive analysis on data from streaming sensors and images of the microchips play a key role in finding possible defects and removing those microchips so they are not passed on to customers.

Understanding the process

The process starts by training the machine to identify a good “wafer.” Wafers are thin slices of semiconductor material used in electronics for the manufacture of integrated circuits. Each wafer is defined by over 90,000 measurements of its surface. A good quality wafer has a uniform and smooth surface and the same pixel values on the whole surface. Deviations from those basic pixel values are caused by holes and peaks that create an uneven surface. This results in poor wafer quality.

Using image processing, 40 types of defects were identified in the factory. Once the AI system knows what types of defects exist, it can use pattern-detection techniques to match new wafers to defect types and automatically detect faulty wafers. This allows the immediate (real-time) identification of small defects in wafers, many of which would not be detectable to the human eye, but which would lead to problems in use.

This approach, however, is basically reactive: There is a problem, the system detects it, and it can be put right. However, when this technique is combined with edge analytics, new options open up. Edge analytics is the use of analytics at the source, in this case the production machines. We can use machine learning models to detect anomalies actually inside those machines, and then put them right on the spot. This is particularly helpful in industries with enormous amounts of data – for example, microchip manufacturing. The data is considered and analysed immediately, and only the information about anomalies needs to be retained and analysed further. The rest can be discarded, requiring less data storage capacity.

These two AI techniques of image recognition and IoT sensors coupled with edge analytics mean that it becomes possible to move from reactive to proactive detection of production defects. This gives greater control over production and increases the quality of products.

The cost of (poor) quality

Achieving quality through the use of AI and advanced analytics is potentially expensive, both in terms of money and time. Manufacturers are concerned about the cost of establishing this type of intelligent system. Studies show, however, that 6 percent of sales revenue from most companies is spent covering scrap and reworking. It can be up to five times more expensive to solve a problem once the product has left the factory. What’s more, failure to address quality problems places companies at risk of losing sales and eroding their brand reputation. The cost of poor quality for an average company is around 20 percent of sales.

A cost-benefit balance

In other words, the cost of poor quality is likely to be significantly greater than the upfront cost of the systems to improve quality. The benefits of using AI systems in this way are likely to hugely outweigh the costs of their implementation, both in time and money. It therefore seems highly likely that AI will play an increasingly large role in production processes across the manufacturing sector and around the world, allowing better efficiency and higher quality of products.