Considering the growing amount of data available and the rise of edge computing, we can expect rapid growth for AI and decisions on the edge (addressed in AI on the edge will kick IoT market adoption, not 5G). All of this influences how we can cope with the speed of adoption of AI everywhere and still have in place the guarantees that the decisions taken, suggested or implied by AI models/algorithms respect human values and comply with all the ethics and principles subjacent to the domain where those algorithms are performing.

How can we, the society, be sure that AI is ethically used?

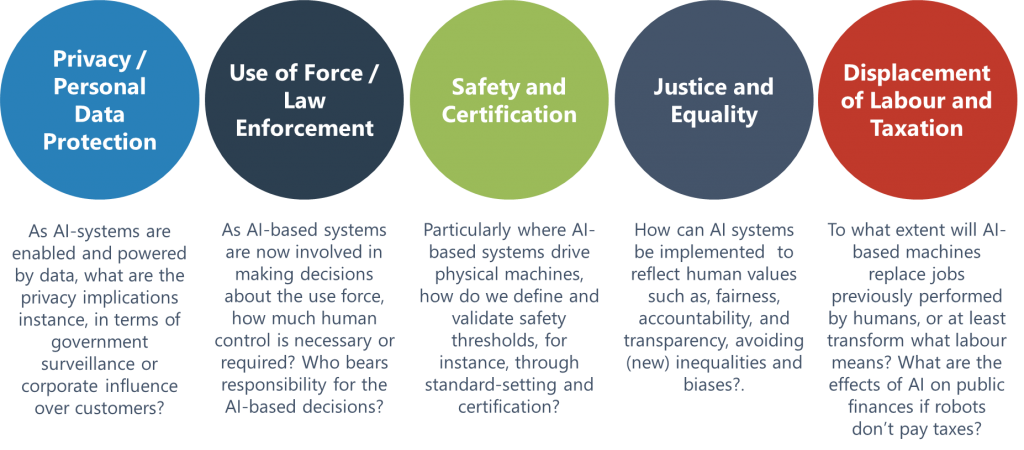

The mass use of AI, especially towards the edge (AI@Edge) will touch on core issues and questions where AI applications either lead to new challenges or amplify pre-existing concerns and pressure points:

So being sure that AI is ethically used is, maybe, a utopia. However, in my opinion, we must put in place all possible relevant mechanisms to test, monitor and validate the entire life cycle of AI model deployment having an impact, direct and indirect (directly derived from/consequences of), on our daily lives.

Data ethics framework

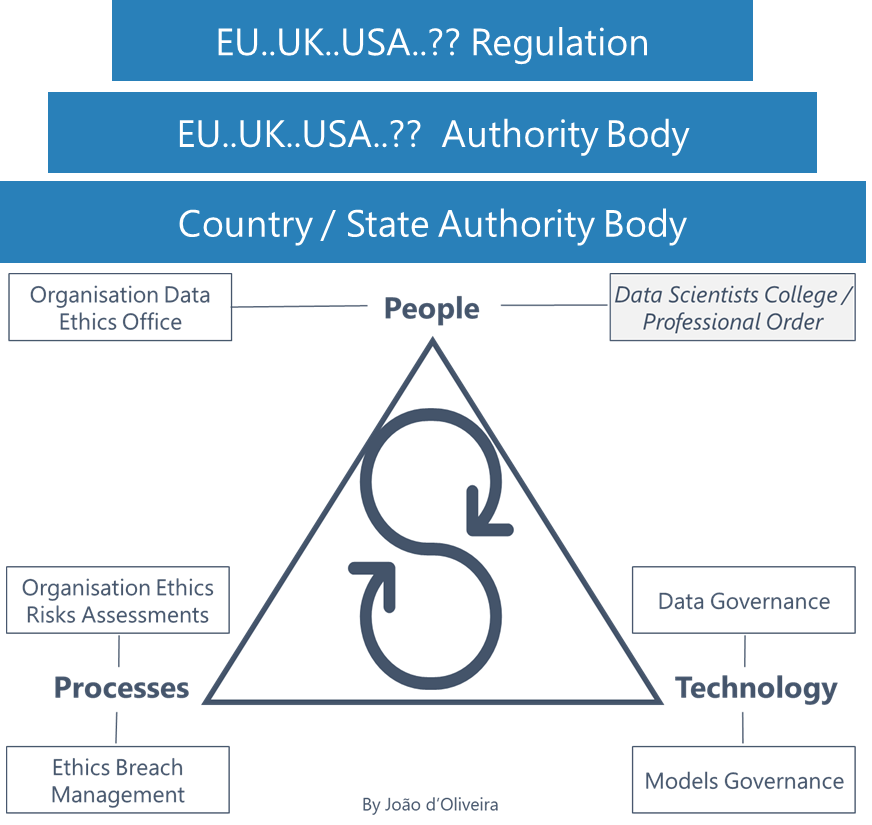

Having a proper AI ethics practice goes far beyond technology. It involves people, processes and technology and only works when this triangle gets an infinite loop, when technology is implemented in a governed way by auditable processes on which people are fully engaged/embedded.

Thus, my view and proposition are that we as society should implement a framework having two big aspects, the organisational and the legal/regulatory:

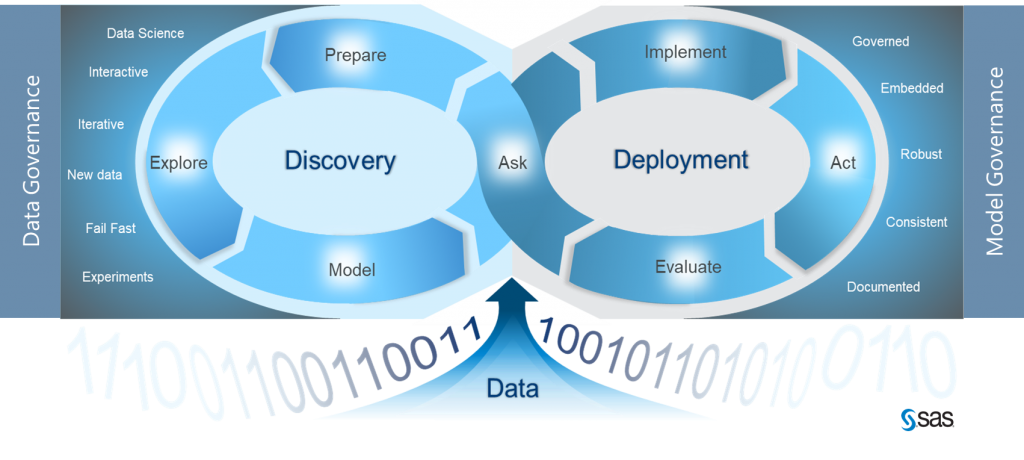

- Data governance and AI model governance are key and foundational for proper AI ethics; therefore, these must be in place to support the practice of governing the entire AI life cycle.

- Data scientist as a regulated profession should establish a code of professional conduct presupposing a rigorous evaluation of data scientists, not only of their professional capabilities, but also psychological readiness. Thus, all professionals will be accountable and eventually subject to liabilities for misuse or bad behaviour.

- Data ethics offices should be implemented by all organisations, which will be responsible for designing and implementing ethics risk assessments, including the idea, concept, process design phase, development, tests (especially how the samples for training the models were obtained), deployment in production and execution audits (as highlighted in Is Big Data a Big Ethics Problem). These assessments, as part of a regulatory mandate (having force of law), must be auditable, and noncompliance should be heavily penalised.

- Overarching authority body and regulation. The data science regulatory framework could well mimic the European Union GDPR framework and architecture, meaning the EU draws and approves the overall regulation. The EU authority would enforce the regulation’s implementation in each member state, which must have its national regulatory authority respecting the directives elaborated in the overarching regulation and law. So at first it would be up to the national/member state authority to ensure lawful implementation and handle the inspections, validation, conflicts; pursue lawsuits on eventual ethical violations; cooperate with the national college of data scientists or ethical committee for data science; report to the overarching EU authority; etc. The role of the overarching authority would be to manage conflicts involving multinational organisations and provide a body of appeal for ultimate decisions and penalties enforcement, plus cooperate with peers from other geographies.

So an AI data ethics violation would first be assessed internally by the organisation’s data ethics office and, eventually, reported to the college of data scientists/ethical committee for data science, and, ultimately, to the regulatory authority.

Having a proper #AIethics practice goes far beyond technology. It involves people, processes and technology and only works when this triangle gets an infinite loop. #ai Click To TweetMaking AI models/algorithms validation and impact assessment work at scale

The mass use of AI means also that the volume of AI-driven applications, mainly running at the edge, will be so big that validating them must be a primary responsibility for the organisations producing them (for example, incorporating decision rules based on human values on those models). But even with the framework in place, THE question is:

How is it possible to validate and assess ethics compliance and impacts of AI models/algorithms in a manner that will not block innovation and business/economic activity?

The answer is automation. Making AI ethics validations at scale isn’t possible without automating the processes. In my opinion, the solution passes by implementing a system combining AI contextual models and decision management rules, having as minimum inputs from the models, subject to assessment:

- The purposes, goals, objectives, etc., as well their outcomes/decisions triggered.

- The criteria used for selecting the data samples used for development, test and pre-production.

- If the model has incorporated decision rules based on human and/or social values, those should be also provided.

- The model execution audit logs (at least, test and pre-production).

Having the above as parameters the AI ethics assessment system will check the model-triggered decisions and their social impacts versus a hierarchy of social values, starting with core human values. The systems should pass based on their goodness for overall society, impacts on the community and so forth. Therefore, we would have an understandable explanation for the people why the decisions triggered by the AI models were considered as socially fair.