My Brush With Glory

The 2012 Summer [quadrennial international sporting event that isn't the World Cup] in London is just around the corner, and it's sure to be an exciting time for all. I had the good fortune to be living in the great state of Utah during the 2002 Winter [quadrennial international sporting event that isn't the World Cup]in Salt Lake City, and it was a thrill being surrounded by all the international visitors and attending the events.

The 2012 Summer [quadrennial international sporting event that isn't the World Cup] in London is just around the corner, and it's sure to be an exciting time for all. I had the good fortune to be living in the great state of Utah during the 2002 Winter [quadrennial international sporting event that isn't the World Cup]in Salt Lake City, and it was a thrill being surrounded by all the international visitors and attending the events.

I spent six years in Utah and loved it. Utah is a friendly and kind-spirited place, where the fresh air, sunshine, and beautiful scenery make all the world's evils seem not quite so bad. But don't be naive -- there were still pockets of evil.

We heard rumors of a terrible plot uncovered just prior to the 2002 games. Apparently the militant wing of the Mormon Tabernacle Choir was planning to disrupt opening ceremonies by purposely singing out of tune.

We heard rumors of a terrible plot uncovered just prior to the 2002 games. Apparently the militant wing of the Mormon Tabernacle Choir was planning to disrupt opening ceremonies by purposely singing out of tune.

Oh my heck, I'm sure glad that was prevented!

Security during the event was understandably tight. At one point I was asked to remove my shoes so they could be "sniffed" for explosives. And this wasn't even at the airport or an event venue, it was by a shabbily-dressed gentleman who approached me in the city park.*

Forecasting Medals

Forecasting the outcome of sporting events is not just of interest to gamblers, but to a fair number of academics as well. In 2010 the venerable International Journal of Forecasting dedicated an entire issue (Vol. 26, #3) to Sports Forecasting.

I asked myself: Why are we wasting the world's best forecasting minds on such trivial matters? Shouldn't our research be focused on real-world problems of importance, like forecasting the need for medical services in impoverished countries, or who will win the Oscars?

What we learn from this issue of IJF is that the time-series methods we are accustomed to in business forecasting are of little interest or use to sports forecasters. They aren't trying to forecast weekly demand for potato chips in order to plan production and inventory. Instead, they are attempting to forecast the winner of a match (between two contestants or teams) or race (with multiple contestants).

The particular metrics used to evaluate sports forecasting can differ from the usual metrics of business forecasting as well. For example, an article on forecasting medal totals by nation reported the R2 between actual and predicted medals. However, Scott Armstrong warns explicitly in The Principles of Forecasting that:

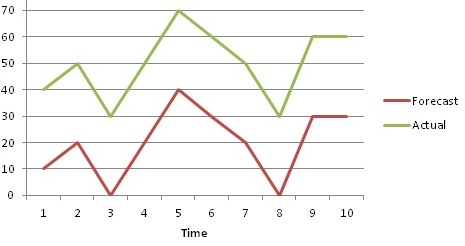

R2 should not be used for time-series forecasting... For one thing, it overlooks bias in the forecasts. A model can have perfect R2, yet the values of the forecasts could be substantially different from the [actual]values... (p. 457, illustration reconstructed below)

So we would not use R2 to evaluate our weekly forecasts of potato chips. (Read more from Scott and others on the Forecasting Principles website.)

Another area of difference is the naive forecast for a sports event. As an ultimate point of comparison, it is proper to ask how well our forecasting method does compared to doing nothing, and just using the naive forecast. (A naive forecast is at the heart of Forecast Value Added (FVA) analysis. See more discussion of this in "The Science of Forecasting" on the Institute of Business Forecasting blog.)

In our business forecasting potato chip example, a naive forecast for this week's sales would be whatever we sold last week (this is known as the random walk or "no change" model). We don't need a fancy system or process to create the naive forecast, so any investment in forecasting sure as heck better perform better than the naive model or we are wasting our resources!

The sports forecasting models tended to be evaluated against the betting market. Would a betting strategy based on the method be profitable? Perhaps the closest thing to a random walk model would be to pick your winner in a 2-contestant match based on who won the last time they competed. (The naive model for a multi-contestant race is less clear.)

Overall, there may not be a lot to learn from sports forecasting that can be applied to our standard business forecasting challenges. As the editors of this special issue stated, the value of these proposed methods is NOT that would make profitable betting strategies (many appeared that they wouldn't be profitable), but in the development of new techniques that may some day improve forecasting.

Find related discussion of the analytics behind selecting the NCAA Basketball Tournament teams in "Analytics and the NCAA Dance Card - A method to the madness."

----------------------------

*I've now come to suspect that he wasn't a security official at all, but just a lonely guy with a foot fetish.

3 Comments

Mike, per this comment: "Overall, there may not be a lot to learn from sports forecasting that can be applied to our standard business forecasting challenges" as you look at the betting markets, couldn't those be compared to Prediction Markets that can be used today in std biz forecasting? Studies have shown that the chance to "win" and insert extrinsic qualitative judgment to the forecasting (as you would suspect is done in sports betting) can improve the forecast quality. Perhaps the learnings would be separating the purely emotional and "superstitious" or loyalty based elements from the sports betting or selecting the most successful sports betting patterns to analyze...

Emily, I think you are correct -- Prediction Markets are a good analogy. Although not yet widely adopted, and definitely not for large-scale automated forecasting applications, PMs have a place in business forecasting. It will be interesting to follow their advance as more companies try them.

Pingback: The Objectives of Forecasting: Narrow and Broad - The Business Forecasting Deal