Webinar October 4, 1:10 pm ET: What is Your Product Forecastability???

Thanks to Rich Gendon and the Chicago APICS chapter for hosting me last week at their professional development dinner meeting. I always enjoy evening speaking gigs, as they provide a chance to break out some of my nightclub-worthy material. (With a street-hardened Chicago audience, you know the G-rated stuff is not going to work.) Without having to go too blue, we had a good discussion on why forecasts are wrong, worst practices, and forecast value added analysis. And I wasn't arrested.

Rich has invited me back to give a follow-up webinar next Tuesday, October 4 (1:10 pm ET), delving more into the evaluation of a product's "forecastability," and how FVA concepts can be applied to forecasting performance objectives. Please join us by registering for the APICS-Chicago Lunchtime Webinar.

Yes, the webinar does start at 1:10 ET, and will end by 1:55.

A Different Twist on the Value of a Statistical Forecast

FVA is defined as the change in a forecasting metric that can be attributed to a particular step or participant in the forecasting process. So the "value" in a process activity is amount of improvement in the forecast due to that activity. (Of course, there can always be negative value added, when the activity makes the forecast worse!)

In the guest blog below, Snurre Jensen provides a different twist on the value a statistical forecast can provide. Snurre considers a situation where the statistical forecasting is showing a downward trend, that sales are falling. With this information on the future direction of sales, the organization can take demand changing action -- lowering price, increasing promotional activity, etc. -- thereby sending future sales back up.

As Snurre shows, the original statistical forecast (not accounting for the organization's demand changing activity) turned out to be poor -- since it forecast well below what the actual sales turned out to be. Yet this wasn't such a bad thing. The original statistical forecast got management's attention to do something proactive about the downward trend.

Usually when discussing the value of statistical forecasting with companies who are yet to implement statistical forecasting into their forecast process I am asked if I can “prove” the value. This quickly becomes a request to do a proof of concept where the aim is to compare the company’s current forecast accuracy with the forecast accuracy that is attainable by the using the statistical forecast models in SAS Forecast Server on the customers own data.

While this “show me yours and I’ll show you mine” approach certainly generates a lot of numbers, few people realize that the numbers generated during such a proof of concept can be of little use in evaluating the pros and cons of adopting statistical forecasting.

What people sometimes forget is that forecasting is a process and as every other process it requires input in order to generate output. The statistical forecast is the input to the forecast process and the output is a forecast that has been evaluated and augmented using information that is not incorporated into the statistical forecast. Trying to compare the two is like comparing apples and oranges. Only in cases where the forecast hasn’t been tinkered with does it make sense to compare the two – but then again, who doesn’t tinker with the forecast?

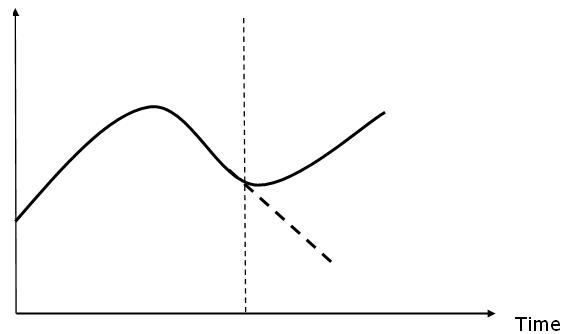

Actually, there are even cases where poor statistical forecast accuracy is a good thing and a sign of a well-functioning forecast process! Look at the graph below – the part to the left of the vertical dashed line represents history while the area to the right represents the forecast period. The statistical forecast shown as the dashed line highlighted the fact that sales were expected to decline. To counter this, the business implemented several measures (that weren’t incorporated into the statistical forecast) resulting in a reversal of the declining trend.

Did the statistical forecast turn out to be accurate? No way! Did it add value? You better believe it!

Did the statistical forecast turn out to be accurate? No way! Did it add value? You better believe it!

The way to evaluate the value of statistical forecasting is not by just looking at the end result – the statistical forecast. You need to measure what value the statistical forecast adds to the entire process. If you’re not familiar with how to do this I’d recommend googling “forecast value added”

3 Comments

I think marketing activities create lot of impact on forecasting. Then modern practice like S&OP encourage sales to validate forecast to reflect current market situation. Anyway, statistical forecast, in my opinion, is still useful as a baseline for better decision.

Pingback: Why forecasting is important (even if not highly accurate)

Pingback: Why forecasting is important (even if not highly accurate) - supplychain.com