This article is a follow-on to a recent post from Jeff Owens, Getting started with SAS Containers. In that post, Jeff discussed building and running a single container for a SAS Viya runtime/IDE. Today we will go through how to build and run the full SAS Viya stack - visual components and all - in Kubernetes. Step 1 is building the container images and Step 2 is running the containers. For both steps, you can go to the sas-container-recipes GitHub repo for more detail and to obtain the tools needed to accomplish this task. An in-depth guide and more information is located on the wiki page in the repository.

The project development team at SAS has done an incredible job of making this new and intuitive way to dynamically create large collections of containers easy and foolproof, despite my long-winded explanation...

Building the Container Images

Keeping with the recipes theme, we are going to need to prepare a few ingredients to make this work. Of course, you will need a valid SAS_Viya_deployment_data.zip file containing your ordered products.

Build Machine

First, you need a Build Machine. This can be a lightweight server, but it needs to be running Linux. The build machine in this example is 2cpu x 8GB RAM, running RHEL 7.6. Hint – 2 cores is the minimum but the more you use for the build the better (faster). I have installed Docker version 18.09.5 here and I have a 100GB volume attached to my docker root (by default this is /var/lib/docker but you can easily change the location in your /etc/docker/daemon.json file).

You can review full system requirements in the GitHub repository here. This article covers the "multiple" or "full" deployment types so focus on that column in the table.

This build machine is going to execute the build script which builds each one of your containers, push them to your Docker Registry, and create the corresponding Kubernetes manifests files needed to launch your deployment.

Make sure you have cloned the sas-container-recipes repository to this machine.

Docker Registry

You will need access to a Docker registry. Your build machine must be able to push images into it, and your Kubernetes machines must be able to pull images from it. Prior to building, make sure you runt the docker login myregistry.com command using the build uid. This docker login will ensure a file is present at /home/.docker/config.json. This is a requirement whether you secure the registry with a form of authentication, or not. Note, if your registry does not respond to pings you will need to add the --skip-docker-url-validation parameter to the build command.

Mirror Repo (Optional)

Similar to the single containers build, it is a good idea to create a mirror repository to host your SAS rpms. A local mirror gives you consistent performance during installation and a consistent build. However, if your containers are able to connect to ses.sas.download then you can skip the mirror step. Beware of the network implications and the fluid nature of these repos.

LDAP

Just like any other SAS Viya environment, all users/groups/authentication/authorization are managed by connecting to an external LDAP. This could be a quick-and-dirty OpenLDAP server we stand up ourselves, or a corporate Active Directory server. Regardless, we will have to be able to make this connection if we want to use SAS Viya's visual interfaces. The easiest and best way to handle this connection is with a sitedefault.yml file. Below is a sample sitedefault.yml that would hypothetically connect to host.com's corporate LDAP. You need to construct your own sitedefault file using values for your LDAP. Consult SAS documentation (linked above) for further information.

config:

application:

sas.logon.initial.password: sasboot

sas.identities.providers.ldap.connection:

host: myldap.host.com

port: 368

userDN: 'CN=ldapadmin,DC=host,DC=com'

password: ldappassword

sas.identities.providers.ldap.group:

baseDN: OU=Groups,DC=host,DC=com

sas.identities.providers.ldap.user:

baseDN: DC=host,DC=com

sas.identities:

administrator: youruserid |

Additionally, we will need to make sure a few of our containers have "host integration" with this same LDAP (specifically, the CAS container and the programming container). The way we do that is with a standard sssd.conf file. You should hopefully be able to track down a valid sssd.conf file for your site from an administrator. Hint – it may be necessary to add homedir (/home/%u) and default shell (/bin/bash) overrides to this file depending on your LDAP configuration.

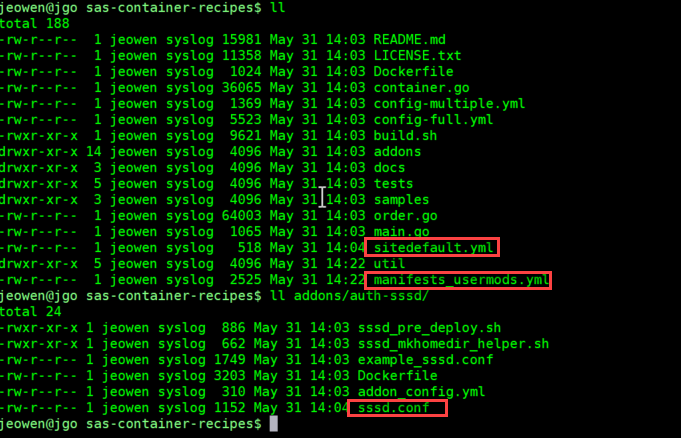

The way one would apply these two files here is:

- place

sssd.confin theadd-ons/auth-sssddirectory and include the--addons/auth-sssdoption when you runbuild.sh, as we do in the example later. - place

sitedefault.ymlin the top level of sas-container-recipes. If the recipe sees asitedefault.ymlfile here, it will base64 encode it and embed it as a value in theconsul.ymlconfig map. If you didn't do this beforehand, you can add yoursitedefault.ymlfile later. Remember the step below is optional, post-build. This is necessary if you did not includesitedefault.ymlpre-build.cat sitedefault.yml | base64 --wrap=0

Next, copy and paste the output into your

consul.ymlconfigmap (by default you can find this inbuilds/full/manifests/kubernetes/configmaps/consul.yml). You want to add a new key/value similar to the following:consul_key_value_data_enc: Y29uZmlnOgogICAgYXBwbGlj......XNvZW1zaXRlLERDPWNvbQo=[

Ingress

Ingress is a crucial component to make this come together because the only way to access your SAS Viya environment is through your Ingress. The recipe gives us an Ingress resource (one of the generated Kubernetes manifests files); however, an Ingress resource is simply an internal HTTP routing rule. We will need to make sure we have manually installed a valid Ingress controller inside of our Kubernetes environment which can be a little tricky if you are new to Kubernetes. The Ingress controller reads and applies routing rules (Ingress resources) such as the ones created by the recipes.

Traefik and Ngnix are the two most popular industry options. Or you might use native Ingresses offered by AWS, Azure, or GCP if you are running your Kubernetes cluster in the cloud. But to reiterate, you will need an Ingress controller up and running.

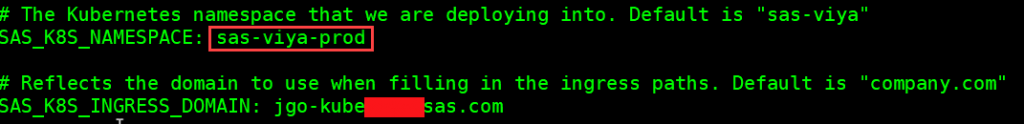

Once your Ingress controller is up, you need to edit the provided manifests_usermods.yml. You should set SAS_K8S_INGRESS_DOMAIN to be the DNS name that resolves to a Kubernetes node that can reach your Ingress controller. And while you have this file open you can also set a unique name for the Kubernetes namespaces you want these resources to deploy (the default is "sas-viya"). This manifests_usermods.yml file is available in the util/ directory, so if you are going to use this then you will first make a copy of that file in the top-level sas-container-recipes directory and edit it there.

Build.sh

With all this in place we are ready to build. To summarize, the “pre-build” config needed here are the files we touched in this sas-container-recipes project:

So, we can go ahead and launch the build script. I prefer using environment variables for easier readability along with copying and pasting when things change - new registries, mirrors, tags, etc.

SAS_VIYA_DEPLOYMENT_DATA_ZIP=/path/to/SAS_Viya_deployment_data.zip MIRROR_URL=mymirror.com/myrepo #optional DOCKER_REGISTRY_URL=myregistry.com SAS_RECIPE_TYPE=full DOCKER_REGISTRY_NAMESPACE=viya SAS_DOCKER_TAG=prod ./build.sh --type $SAS_RECIPE_TYPE \ --mirror-url $MIRROR_URL \ #optional --docker-registry-url $DOCKER_REGISTRY_URL \ --docker-registry-namespace $DOCKER_REGISTRY_NAMESPACE \ --zip $SAS_VIYA_DEPLOYMENT_DATA_ZIP \ --tag $SAS_DOCKER_TAG \ --addons "addons/auth-sssd" |

Once complete:

- We store container images (30-40 of them depending on the software you have ordered) locally in the build host's docker images directory.

- All these images also are tagged and pushed to our Docker Registry. For your organizational reference, the naming convention used is:

$DOCKER_REGISTRY_URL/$ DOCKER_REGISTRY_NAMESPACE/<SAS_K8S_NAMESPACE>-<containername>:$SAS_DOCKER_TAG

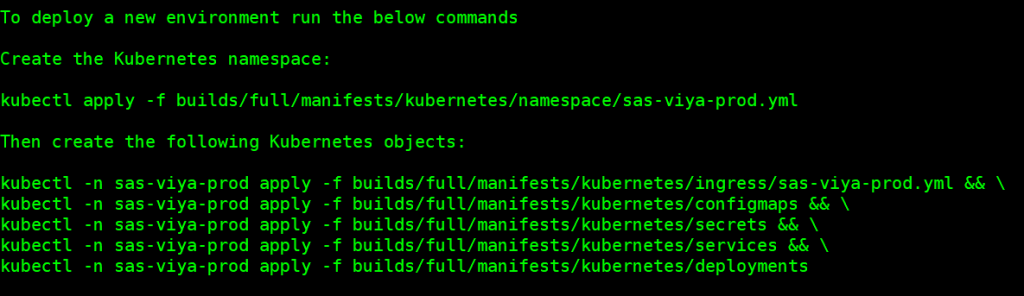

- All our Kubernetes manifests files are available on the build machine in

sas-container-recipes/builds/full/manifests/kubernetes. These fully configured manifest files are ready to use. They reference the images we have built and pushed. - The build log gives us instructions for how to apply these resources to Kubernetes. These are simple commands you should be able to copy and paste to standup our Viya environment.

- For the curious

- The list below is what happened during the build process. Feel free to skip this section, you do not need to know how any of this works to use the recipes:

- You, the builder invokes

build.sh. This is a wrapper script around the greater build framework. This script created a "builder container." Check out the Dockerfile in the top level of the recipes directory. This builder container builds from a golang base image as the build process, written in a few Go files (new as of April 2019). Several files from the sas-container-recipes project copy into this container, including said Go files.- Note, we did not have to install Go on our build machine since Go is running inside a container.

- If you are interested in seeing what the builder container looks like, you can run this command:

docker run -it --rm --entrypoint /bin/bash sas-container-recipes-builder:$SAS_DOCKER_TAG. - A 'sas' user is created inside of this container - this user has the same uid as the user who invoked build.sh on the host.

build.shalso created a new subdirectory on the host called 'builds/<buildtype>-<timestamp>'. This will contain logs, manifests, and various templates used during this specific build.build.shthen runs that builder container and the real work gets underway. The entry point for the builder is:go run main.go container.go order.go. All those arguments you specified when invoking build.sh pass right into this Go program. Also, the newly created "builds" directory mounts into the container at/sas-container-recipes/builds.- The host's

/var/run/docker.sockfile mounts into this container - this allows the builder container to run docker (docker in docker)

- The host's

- This Go program then:

- Generates a playbook from your deployment data file (SOE zip) using the [sas-orchestration tool](https://support.sas.com/en/documentation/install-center/viya/deployment-tools/34/command-line-interface.html).

- Creates Kubernetes manifests for the images set to build.

- Gathers sets of Ansible roles to install in each container, based on the entitlement of your software order.

- Generates a Dockerfile for each container, where each applicable Ansible role installs in a new Docker layer

- Creates a "build context" for each container with the generated Dockerfile and the Ansible role files.

- Starts a

docker buildprocess for each container. The Dockerfile installs ansible and executes the playbook "locally" (inside of each container). - Pushes these images into your registry as each build finishes.

- Note, this happens inside of containers, and the builds execute concurrently. Recall this build machine has 2 cores, so only 2 containers build at a time and it took several hours. If we used a 16-core machine, this whole build would go faster. In another terminal, look at

docker statsduring the build. Another significant “performance” impact is the network bandwidth between your build machine and your registry.

- You, the builder invokes

Running the Containers

We are going to run these containers inside of a Kubernetes environment. Here are the finishing touches needed to give us a completely containerized SAS Viya environment running in Kubernetes. Note, that by default this deploys into a new namespace inside of your Kubernetes cluster and isolates the resources from anything else running.

Kubernetes Environment

Since we built the full stack, we'll need to make sure we have sufficient resources to run all of these containers at the same time. We'll need a minimum of 8 cores and 80GB RAM available. Remember CAS is a multithreaded, in-memory runtime, so the more cores and RAM you provide, the more horsepower you'll have for doing actual analytical work with SAS and CAS.

Kubectl

Hopefully, if you've gotten this far you are familiar with kubectl, which is the client tool/interface used with a Kubernetes cluster. Consider it a cli wrapper around the Kubernetes API. But for thoroughness, you will launch your SAS Viya deployment from whatever machine from where you are running kubectl. If this happens to be the same machine you built on, then you can stay inside of the sas-container-recipes directory you started in, and copy and paste those kubectl apply -f... commands. Or you can copy your manifest files somewhere else and modify those commands accordingly. In either instance, once those commands run, your environment is up, and you should be able to access SAS Environment Manager and other SAS web apps. If you added your userid as an administrator in the sitedefault.yml file, then you can log in as yourself with admin access.

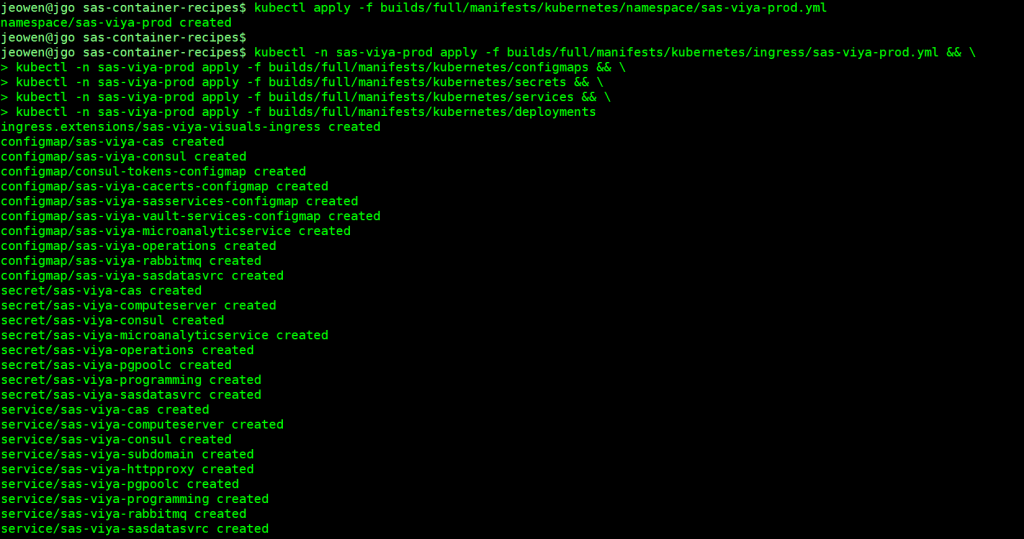

Apply the manifests:

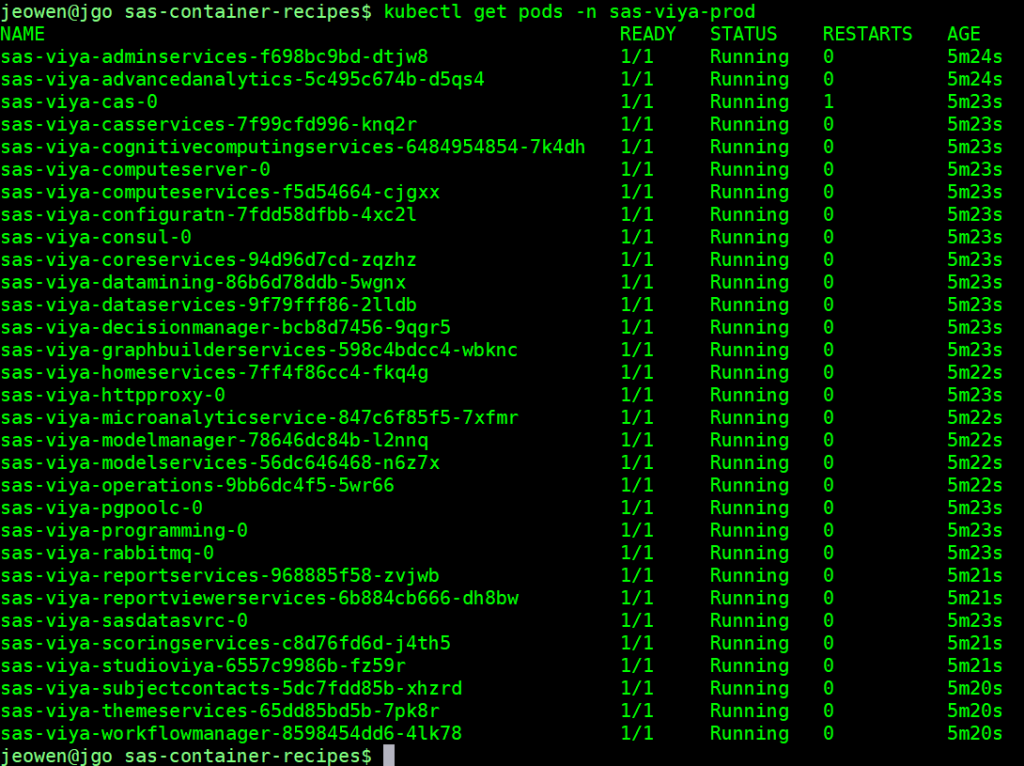

And after a few minutes your pods should be up (first time takes the longest since images must be pulled). Note that the pod running doesn’t mean all your SAS Viya services are running. It may take up to 30 minutes for all services to be up and stabilized.

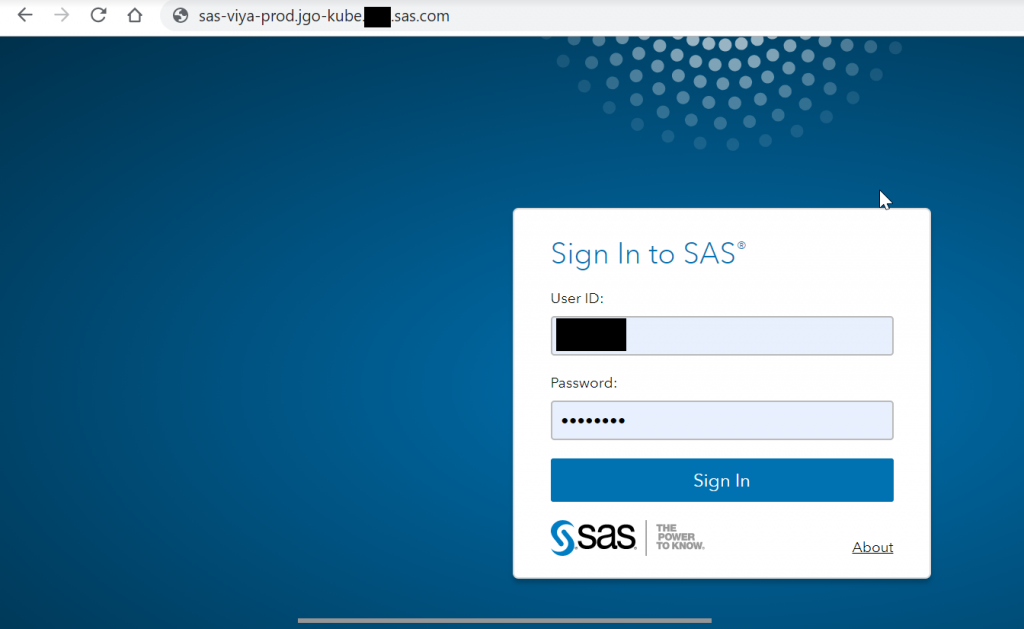

With your Ingress and DNS rules set up correctly, you should be able to reach your environment:

Based on properly configured sitedefault.yml and sssd.conf files, you should be able to log in as an LDAP user.

Miscellaneous Notes

Scaling

Once your SAS Viya environment is up and running in Kubernetes, the following kubectl command adds CAS worker nodes to scale out the capacity of our CAS server.

kubectl scale deployment sas-viya-cas-worker --replicas=5 -n sas-viya-prod |

Note, there isn’t any value in adding any more workers than you have physical nodes in your cluster.

Performance

SAS is a powerful programming language designed to handle heavy workloads on large data. General hardware performance has historically been a chief concern to customers implementing SAS. Containers bring a whole new wrinkle to the concept of performance given the general notion of hardware abstraction. One performance related question is: how can we ever guarantee the IO provided by the underlying filesystem (SASWORK, CAS_DISK_CACHE)? Like Kubernetes and Storage/State in general, no easy answer exists. It falls back on the Kubernetes operator to make high performance filesystems (i.e. local SSD) available on all nodes a SAS programming or CAS container(s) might land on, and manually edit the corresponding manifest files to leverage those host disks. Alternatively, we can try to limit the burden on these scratch disk spaces. For CAS, this means ensuring we have more RAM available than data in use.

Amnesia

See the summary section below for a caveat about this deployment methodology – this is not quite a complete implementation for “production” types of environments. At least not without the understanding customer configuration requirements. You should have a discussion with your SAS account team about some of these details. But please be aware building/deploying as we did here leaves us with an “Amnesiac Viya” (this useful term coined by an astute SAS employee). That is, there is no state here. If and when you take your environment down, or scale pods to 0 across services, this will yield a "brand new" or "fresh" environment once brought back up. The good news is this also means if we run into any issues, we can easily delete the whole namespace and restart. If you want to persist any user data, config, reports, code, etc. you will have to manually attach storage to a few locations.

Full vs Multiple

Note, here we used SAS_DEPLOYMENT_TYPE=full. This built the entire Viya stack, visual interfaces, microservices and all. Alternatively, if we set the deployment type to "multiple" we get three container images – programming, httpproxy, and cas. This would be all we need if we wanted to write SAS code, whether we wanted to use SAS Studio or an external IDE like Jupyter. And we could still scale out our CAS cluster the same way as we did in our full environment.

Summary

Just like everyone else, the SAS container strategy is quickly evolving. SAS Viya, as a scalable, highly available services-oriented architecture, is a perfect fit to run in containers inside of the Kubernetes orchestration framework. Kubernetes brings tremendous operational benefits to the table for this type of software. Smoother deployments, higher uptime, instant scale, much more efficient hardware usage to name a few.

As you will see in the build log when running the recipe, this is an "EXPERIMENTAL" deployment process. The recipes are an excellent way to get your hands on a Kubernetes version of SAS Viya early. Future releases of SAS Viya will be fully "containerized" and "kubernetes-ized" so customers likely won’t be building their own containers in this manner. Rather, SAS will provide a Helm chart or something similar to customers which will pull container images straight from SAS and apply them into their Kubernetes environments appropriately. Further, many aspects of SAS Viya’s infrastructure will be redesigned to be more "Kubernetes native," but the general feel of this model is what sysadmins/operators should see from SAS going forward.

5 Comments

Hi Joe,

Do you know when we will be able to see a similar Blog on Viya 4 and Kubernetes?

Thanks

Casey

Hi Casey,

I'm checking with the SME behind this blog post, Jeff Owens, to see if an updated post is in the works. For now I'd recommend referring to the multiple SAS Viya 2020.1 repositories on GitHub. Particularly looking at viya4-deployment and viya4-monitoring-kubernetes.

Thanks,

Joe

Hi Joe,

I followed your guide but even specifying type "full" as parameter of the build.sh I'm still getting only three containers built (proxy, cas, programming) as in the multiple setup. Could it be a licensing issue?

KR

Hi Joe

Thanks for this article. Can we add MAS node on the flow to scale out the capacity of MAS, same as CAS worker nodes.

Hi Sanket,

I checked with Jeff Owens and he indicated scaling MAS deployment will indeed add more pods. He's not sure whether or not those new pods register with Consul properly and become usable in the way you may expect, but it's definitely worth trying out.