As data volumes continue to surge (with no signs of slowing down), the cost to handle all that data can have a very real impact on IT budgets.

But for all the talk of the growing value of data, the cost side of the equation is often overlooked. After all, it not only takes a lot of drives to hold all that data, it requires significant computing resources to handle it. The costs quickly add up, forcing IT leaders to make tough choices about where to make their IT investments.

This is as true for SAS users as it is for any other IT organization. We work closely with our customers and know that the cost of our analytics software is only part of the budget puzzle – total cost of ownership (TCO) casts a long shadow over their budget decisions. So we were as curious as they were when Intel released its Intel® OptaneTM DC Persistent Memory.

What is persistent memory?

Data written to persistent memory remains accessible using memory instructions even after the process that created or last modified them is gone. In this aspect, persistent memory is like a hard disk drive (HDD). But the speed at which the data can be accessed from persistent memory is considerably faster than the speed delivered by an HDD.

Of course, as a longtime Intel collaborator, we worked closely together to optimize SAS software for this innovation. But how would it work in real-life applications outside of the labs? Would the Intel Optane DC Persistent Memory - SAS® Viya® combination have a real impact, or would it just represent yet another incremental improvement – nice, but not enough to change the IT investment-performance equation?

Testing confirms performance

There was only one way to find out, of course: Test it.

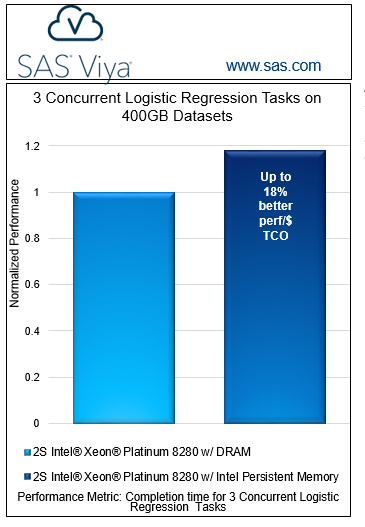

So that’s what we did. In collaboration with Intel, we ran SAS Viya 3.4 on Intel Optane DC Persistent Memory.  More specifically, the testing compared performance of a two-socket second-generation Intel® Xeon® Platinum 8280 processor with DRAM to a two-socket second-generation Intel Xeon Platinum 8280 processor with Intel Optane DC persistent memory. (See editor's note below for details.)

More specifically, the testing compared performance of a two-socket second-generation Intel® Xeon® Platinum 8280 processor with DRAM to a two-socket second-generation Intel Xeon Platinum 8280 processor with Intel Optane DC persistent memory. (See editor's note below for details.)

We didn’t hold back, either – we ran multiple instances of a large model containing 400 gigabytes of data, concurrently. All these jobs finished in a matter of minutes. Just as important, the costs of achieving performance in a persistent memory environment with SAS Viya, when compared to DRAM and SAS Viya, make their own compelling case.

Intel Optane persistent memory achieves more than 95 percent on-par performance with DRAM, at a lower cost per performance rate of approximately 18 percent. With second-generation Intel Xeon Scalable Processors and Intel Optane DC persistent memory in Memory Mode, it’s clear that users can take advantage of the benefits of systems with larger capacity memory, with little or no performance degradation.

Greater memory capacity = substantial cost savings

But performance may not even be the most interesting part of this story – not when you compare it to the cost benefits of running in this environment. In short, compared with DRAM – equal capacity memory, equal CPU, and so on – using Intel Optane DC Persistent Memory leads to a reduction in costs of 20 percent or more. That’s a tangible, meaningful impact for any IT budget, allowing CIOs to invest more heavily to address other pressing needs. By contrast, system costs increase dramatically with higher DRAM capacity.

Plus, it may be possible in the future to use the memory module in different modes – an opportunity we’re currently testing in non-volatile random-access memory (NVRAM) mode with the goal of allowing applications to take advantage of this benefit.

How does this combination of persistent memory tools and SAS Viya contribute to faster speeds and lower costs? The massive scale of data typically handled by SAS customers can be handled by fewer servers, because each can now have a much greater memory capacity. This equation can translate into a significant reduction in the total cost of ownership.

There are a lot of factors, many of which are unique to your organization, that go into reducing the total cost of ownership – so it goes without saying that your mileage may vary. Regardless, this is a conversation your organization needs to be having right now, if it hasn’t already. We’re happy to answer your questions about running SAS Viya together with Intel Optane DC Persistent Memory.

Want a deeper dive? | See the video on Intel Optane DC Persistent MemoryEditor's Note: Specs for this test included:

- SAS® Viya® In-memory Analytics: SAS® Viya 3.4 VDMML application.

- Workload: 3 concurrent logistic regression tasks each running on 400GB datasets.

- Testing by: Intel and SAS completed on February 15, 2019.

- Baseline hardware for comparison: 2S Intel® Xeon® Platinum 8280 processor, 2.7GHz, 28 cores, turbo and HT on, BIOS SE5C620.86B.0D.01.0286.011120190816, 1536GB total memory, 24 slots / 64GB / 2666 MT/s / DDR4 LRDIMM, 1x 800GB, Intel SSD DC S3710 OS Drive + 1x 1.5TB Intel Optane SSD DC P4800X NVMe Drive for CAS_DISK_CACHE + 1x 1.5TB Intel SSD DC P4610 NVMe Drive for application data, CentOS Linux* 7.6 kernel 4.19.8.

- New hardware tested: 2S Intel® Xeon® Platinum 8280 processor, 2.7GHz, 28 cores, turbo and HT on, BIOS SE5C620.86B.0D.01.0286.011120190816, 1536GB Intel Optane DCPMM configured in Memory Mode(8:1), 12 slots / 128GB / 2666 MT/s, 192GB DRAM, 12 slots / 16GB / 2666 MT/s DDR4 LRDIMM, 1x 800GB, Intel SSD DC S3710 OS Drive + 1x 1.5TB Intel Optane SSD DC P4800X NVMe Drive for CAS_DISK_CACHE + 1x 1.5TB Intel SSD DC P4610 NVMe Drive for application data, CentOS Linux* 7.6 kernel 4.19.8.)