This blog post was also written by SAS' Bari Lawhorn.

We have had several requests from customers who want to use SAS® software to automate the download of data from a website when there is no application programming interface (API) to do it. As an example, the Yahoo Finance website recently changed their service to decommission their API, and this generated an interesting challenge for one of our customers. This SAS programmer wanted to download historical stock price data "unattended," without having to click through a web page. While working on this case, we discovered that the Yahoo Finance website requires a cookie-crumb combination to download. To help you automate downloads from websites that do not have an API, this blog post takes you through how we used the DEBUG feature of PROC HTTP to achieve partial automation, and eventually full automation with this case.

Partial automation

To access the historical data for Apple stock (symbol: AAPL) on the Yahoo Finance website, we use this URL: https://finance.yahoo.com/quote/AAPL/history?p=AAPL

We click Historical Data --> Download Data and get a CSV file with historical stock price data for Apple. We could save this CSV file and read it into SAS. But, we want a process that does not require us to click in the browser.

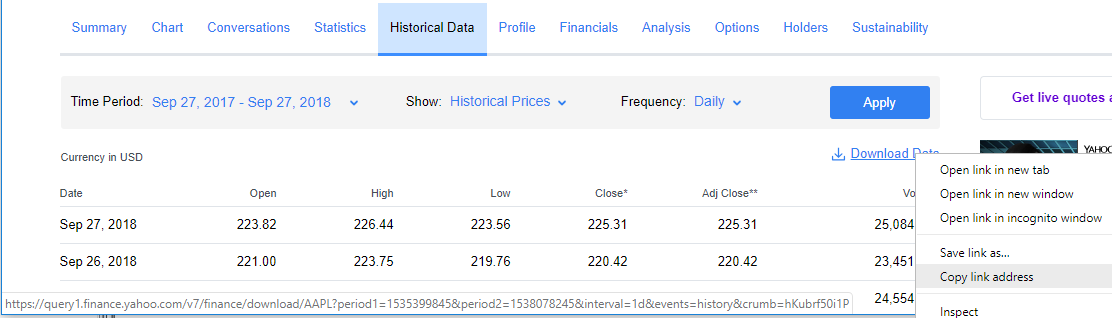

Because we know the HTTP procedure, we right-click Download Data and then select Copy link address as shown from a screen shot using the Google Chrome browser below:

Note: The context menu that contains Copy link address looks different in each browser.

Using this link address, we expect to get a direct download of the data into a CSV file (note that your crumb= will differ from ours):

filename out "c:\temp\aapl.csv"; proc http url='https://query1.finance.yahoo.com/v7/finance/download/AAPL?period1=1535399845&period2=1538078245&interval=1d&events=history&crumb=hKubrf50i1P' method="get" out=out; run; |

However, the above code results in the following log message:

NOTE: PROCEDURE HTTP used (Total process time): real time 0.25 seconds cpu time 0.14 seconds NOTE: 401 Unauthorized |

When we see this note, we know that the investigation needs to go further. The PROC HTTP DEBUG statement (available as of in SAS 9.4 Maintenance 5) helps us get more information in the log about the failure to connect. Here's how we use that statement in the code:

filename out "c:\temp\aapl.csv"; proc http url='https://query1.finance.yahoo.com/v7/finance/download/AAPL?period1=1535399845&period2=1538078245&interval=1d&events=history&crumb=hKubrf50i1P' method="get" out=out; debug level=3; run; |

When we run the code, here's what we see in the log (snipped for convenience):

> GET /v7/finance/download/AAPL?period1=1535399845&period2=1538078245&interval=1d&events=history&crumb=h Kubrf50i1P HTTP/1.1 > User-Agent: SAS/9 > Host: query1.finance.yahoo.com > Accept: */* > Connection: Keep-Alive > Cookie: B=fpd0km1dqnqg3&b=3&s=ug > < HTTP/1.1 401 Unauthorized < WWW-Authenticate: crumb < Content-Type: application/json;charset=utf-8 …more output… < Strict-Transport-Security: max-age=15552000 …more output… { "finance": { "error": { "code": "Unauthorized", "description": "Invalid cookie" } }} NOTE: PROCEDURE HTTP used (Total process time): real time 0.27 seconds cpu time 0.15 seconds NOTE: 401 Unauthorized |

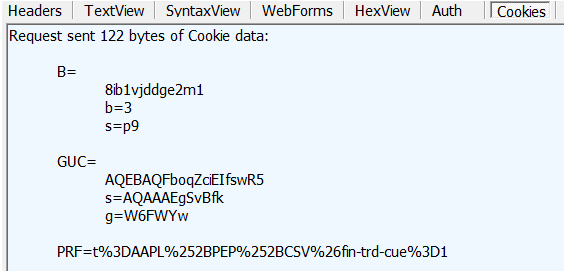

The log snippet reveals that we did not provide the Yahoo Finance website with a valid cookie. It is important to note that the response header for the URL shows crumb for the authentication method (the line that shows WWW-Authenticate: crumb. A little web research helps us determine that the Yahoo site wants a cookie-crumb combination, so we need to also provide the cookie. But, why did we not need this step when we were using the browser? We used a tool called Fiddler to examine the HTTP traffic and discovered that the cookie was cached when we first clicked in the browser on the Yahoo Finance website:

Luckily, starting in SAS® 9.4M3 (TS1M3), PROC HTTP will set cookies and save them across HTTP steps if the response contains a "set-cookie: <some cookie>" header when it successfully connects to a URL. So, we try this download in two steps. The first step does two things:

- PROC HTTP sets the cookie for the Yahoo Finance website.

- Adds the DEBUG statement so that we can obtain the crumb value from the log.

filename out "c:\temp\Output.txt"; filename hdrout "c:\temp\Response.txt"; proc http out=out headerout=hdrout url="https://finance.yahoo.com/quote/AAPL/history?p=AAPL" method="get"; debug level=3; run; |

Here's our log snippet showing the set-cookie header and the crumb we copy and use in our next PROC HTTP step:

…more output… < set-cookie: B=2ehn8rhdsf5r2&b=3&s=fe; expires=Wed, 17-Oct-2019 20:11:14 GMT; path=/; domain=.yahoo.com …more output… Initialized"},"account-switch-uh-0-AccountSwitch":{"status":"initialized"}}},"CrumbStore":{"crumb": "4fKG9lnt5jw"},"UserStore":{"guid":"","login":"","alias":"","firstName":"","comscoreC14":-1,"isSig |

The second step uses the cached cookie from Yahoo Finance (indicated in the "CrumbStore" value), and in combination with the full link that includes the appropriate crumb value, downloads the CSV file into our c:\temp directory.

filename out "c:\temp\aapl.csv"; proc http url='https://query1.finance.yahoo.com/v7/finance/download/AAPL?period1=1535399845&period2=1538078245&interval=1d&events=history&crumb=4fKG9lnt5jw' method="get" out=out; run; |

With the cookie value in place, our download attempt succeeds!

Here is our log snippet:

31 32 proc http 33 out=data 34 headerout=hdrout2 35 url='https://query1.finance.yahoo.com/v7/finance/download/AAPL?period1=1534602937&peri 35 ! od2=1537281337&interval=1d&events=history&crumb=4fKG9lnt5jw' 36 method="get"; 37 run; NOTE: PROCEDURE HTTP used (Total process time): real time 0.37 seconds cpu time 0.17 seconds NOTE: 200 OK |

Full automation

This partial automation requires us to visit the website and right-click on the download link to get the URL. There’s nothing streamlined about that, and SAS programmers want full automation!

So, how can we fully automate the process? In this section, we'll share a "recipe" for how to get the crumb value -- a value that changes with each transaction. To get the current crumb, we use the first PROC HTTP statement to "screen scrape" the URL and to cache the cookie value that comes back in the response. In this example, we store the first response in the Output.txt file, which contains all the content from the page:

filename out "c:\temp\Output.txt"; filename hdrout "c:\temp\Response.txt"; proc http out=out headerout=hdrout url="https://finance.yahoo.com/quote/AAPL/history?p=AAPL" method="get"; run; |

It is a little overwhelming to examine the web page in its entirety. And the HTML page contains some very long lines, some of them over 200,000 characters long! However, we can still use the SAS DATA step to parse the file and retrieve the text or information that might change on a regular basis, such as the crumb value.

In this DATA step we read chunks of the text data and scan the buffer for the "CrumbStore" keyword. Once found, we're able to apply what we know about the text pattern to extract the crumb value.

data crumb (keep=crumb); infile out recfm=n lrecl=32767; /* the @@ directive says DON'T advance pointer to next line */ input txt: $32767. @@; pos = find(txt,"CrumbStore"); if (pos>0) then do; crumb = dequote(scan(substr(txt,pos),3,':{}')); /* cookie value can have unicode characters, so must URLENCODE */ call symputx('getCrumb',urlencode(trim(crumb))); output; end; run; %put &=getCrumb.; |

Example result:

102 %put &=getCrumb.; GETCRUMB=PWDb1Ve5.WD

We feel so good about finding the crumb, we're going to treat ourselves to a whole cookie. Anybody care for a glass of milk?

Complete Code for Full Automation

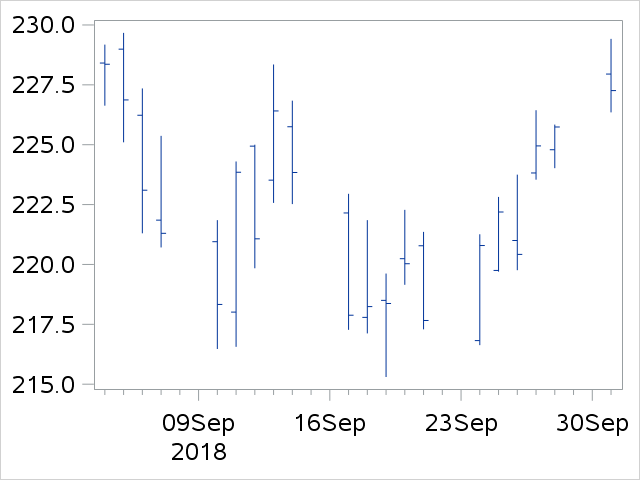

The following code brings it all together. We also added a PROC IMPORT step and a bonus highlow plot to visualize the results. We've adjusted the file paths so that the code works just as well on SAS for Windows or Unix/Linux systems.

/* use WORK location to store our temp files */ filename out "%sysfunc(getoption(WORK))/output.txt"; filename hdrout "%sysfunc(getoption(WORK))/response1.txt"; /* This PROC step caches the cookie for the website finance.yahoo.com */ /* and captures the web page for parsing later */ proc http out=out headerout=hdrout url="https://finance.yahoo.com/quote/AAPL/history?p=AAPL" method="get"; run; /* Read the response and capture the cookie value from */ /* the CrumbStore field. */ /* The file has very long lines, longer than SAS can */ /* store in a single variable. So we read in <32k chunks. */ data crumb (keep=crumb); infile out recfm=n lrecl=32767; /* the @@ directive says DON'T advance pointer to next line */ input txt: $32767. @@; pos = find(txt,"CrumbStore"); if (pos>0) then do; crumb = dequote(scan(substr(txt,pos),3,':{}')); /* cookie value can have unicode characters, so must URLENCODE */ call symputx('getCrumb',urlencode(trim(crumb))); output; end; run; %put &=getCrumb.; filename data "%sysfunc(getoption(WORK))/data.csv"; filename hdrout2 "%sysfunc(getoption(WORK))/response2.txt"; proc http out=data headerout=hdrout2 url="https://query1.finance.yahoo.com/v7/finance/download/AAPL?period1=1535835578%str(&)period2=1538427578%str(&)interval=1d%str(&)events=history%str(&)crumb=&getCrumb." method="get"; run; proc import file=data out=history dbms=csv replace; run; proc sgplot data=history; highlow x=date high=high low=low / open=open close=close; xaxis display=(nolabel) minor; yaxis display=(nolabel); run; |

Disclaimer: As we've seen, Yahoo Finance could change their website at any time, so the URLs in this blog post might not be accurate at a later date. Note that, as of the time of this writing, the above code runs error-free with Base SAS 9.4M5. And it also works in SAS OnDemand for Academics!

9 Comments

PROC HTTP is a powerful statement. When it works, it grabs data quick and makes my life easy for automation. However, if the button does not have an option to get "Copy link address" (because website is designed that way – button for a script to run) then what other SAS option do we have?

Thanks for this blog post. It's helpful.

Ash,

PROC HTTP has to have an endpoint to access, so if there is not one made available to the browser / end user, it won't be available to SAS. If you reach out to the web hosting team for the site in question, please ask them if there is an API or other means for you to access the data and we can assist you further.

Thanks for reading the blog!

Thanks for sharing the knowledge. Very informative and clearly explained!

Dude nice!! I love this.

Thank you, Adam!! Very helpful!

Asim,

Does the following explanation and sample code help with the automation you're seeking?

The dates in the url are listed as period1 and period2. Note that these are not normal dates, but instead they are epoch* numbers. *The time in seconds from 1/1/1970.

https://query1.finance.yahoo.com/v7/finance/download/&stock.?period1=1535835578%str(&)period2=1538427578%str(&)interval=1d%str(&)events=history%str(&)crumb=&getCrumb."

SAS doesn’t natively convert epoch time to a date or vice versa, so we will need to convert these ourselves.

SAS calculates dates as the number of dates from 1/1/1960. Knowing this we can convert a SAS date to an epoch number. To do this we can start by creating some date macro variables and write a simple data step for the conversion.

%let begDate=01aug2018;

%let endDate=26aug2019;

data dates;

call symput('period1', "&begDate"d * 86400 - 315569260);

call symput('period2', "&endDate"d * 86400 - 315569260);

run;

What this is doing is:

1. Getting the amount of days of the defined date from 1/1/1960

2. We then multiply it by the number of seconds in a day (86,400)

3. Then subtract 315,569,260 seconds from the total. (We do this because there is a 10-year gap from 1/1/1960 and 1/1/1970. 315,569,260 is the average amount of seconds for 10 years *365.242 days per year*)

4. The new values are then stored in new macro that we can then add to the url

After the conversion and setting of the new macro variables, we can substitute the url as follows:

https://query1.finance.yahoo.com/v7/finance/download/&stock.?period1=&period1.%str(&)period2=&period2.%str(&)interval=1d%str(&)events=history%str(&)crumb=&getCrumb.

Hi Adam. I was able to make your code work with updated link for VIX. Great help!!!.The only modification I made was to add the following and it worked:

proxyhost="&proxy_host."

proxyport=&port

However, do you know how I can determine the period2 dynamically? We plan to extract the data every month for the whole history so while period1 is constant, period2 is changing. Is there a logic to figure it out ?

This was a good tutorial on grabbing connection parameters during the automation step. We have SAS Viya, and I found this page looking for a way to schedule running a SAS program like this, maybe with SAS Data Management or another process. Is that something that you've had success with, because the automation describe above still requires (I think) for someone to run the program. If I missed it, please let me know (I apologize in advance). Any thoughts are greatly appreciated. Nice work!

Thanks Adam. Very informative!!