One of the great things that the new Data Mart will do for you is combine data from all the machines found in a multi-machine deployment into one storage area, where it is used to create many of the reports found in the Report Center. This capability began with the 14w41 release (M2) of SAS 9.4. The SAS Environment Manager Data Mart (hereafter called the “data mart”) is part of the new Service Architecture Framework, and it is the part that provides forensic analysis of system usage and capacity, resource allocations, and more. The APM (Audit, Performance, Measurement) part of the data mart contains many of the same functions as the older APM package from previous SAS releases.

One of the great things that the new Data Mart will do for you is combine data from all the machines found in a multi-machine deployment into one storage area, where it is used to create many of the reports found in the Report Center. This capability began with the 14w41 release (M2) of SAS 9.4. The SAS Environment Manager Data Mart (hereafter called the “data mart”) is part of the new Service Architecture Framework, and it is the part that provides forensic analysis of system usage and capacity, resource allocations, and more. The APM (Audit, Performance, Measurement) part of the data mart contains many of the same functions as the older APM package from previous SAS releases.

A previous blog by Gilles Chrzaszcz described how to initialize the Service Architecture Framework and various components of it. That is also described in the document that comes with the installation, also found at this link: SAS_Environment_Manager_Service_Architecture_Quickstart.pdf.

But, to combine data from more than one machine into one data mart requires a little fiddling. First, you have to set up file mounts to be able to read the files from the other machines. The files needed are the log files generated by all the SAS servers, from all the different machines in a deployment. Of course it’s assumed that the machines can all see each other on the network, via the domain names.

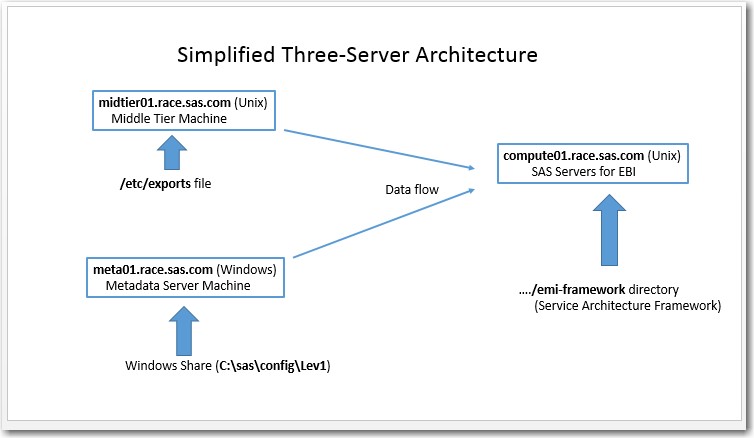

The example shown here is a 3-server configuration: SAS Metadata server is on a Windows machine; SAS Compute Tier is on a Linux machine; and the SAS middle tier is on another Linux machine. The first thing to realize is that on any multi-machine setup, the Service Architecture Framework will be installed on the compute tier, that is, the machine that is guaranteed to have Base SAS with SAS workspace/stored process servers. Note that this is often not the same machine that has the SAS EV Server, nor it is necessarily the same machine that has the WIP Data Server. The reason for this is that the Data Mart is created via a series of SAS jobs, so it must have Base SAS available.

Here’s a map of this setup:

In this case, our “client” (the compute machine, right side in the diagram), is a Linux box that needs to read the external files from the other two machines. It contains the installation of the Service Architecture Framework (the /emi-framework directory), and it needs log files from 1) its own config directory, 2) the middle tier machine (Linux), and from the metadata server machine (Windows). Since these logs reside within the SAS configuration directories on all three machines, that is the directory that we will be making available on all machines.

We will use the Linux mount command to access the directories that are not local, but first we have to set up these directories as shareable on the other two machines.

First, Linux (middle tier machine):

- Add the following line to the file /etc/exports: (as root user)

/opt/sas/config/Lev1 *(rw,sync)

- From the shell issue the following command: (constructs a new export list, refreshes export list into file /var/lib/nfs/etab)

exportfs -r

- Last, make sure the NFS (Networked File System) service is running.

service nfs start

(You can issue ‘service nfs status’ to check first)

- To set up the nfs service to always start on bootup, issue this command:

chkconfig --level 345 nfs on

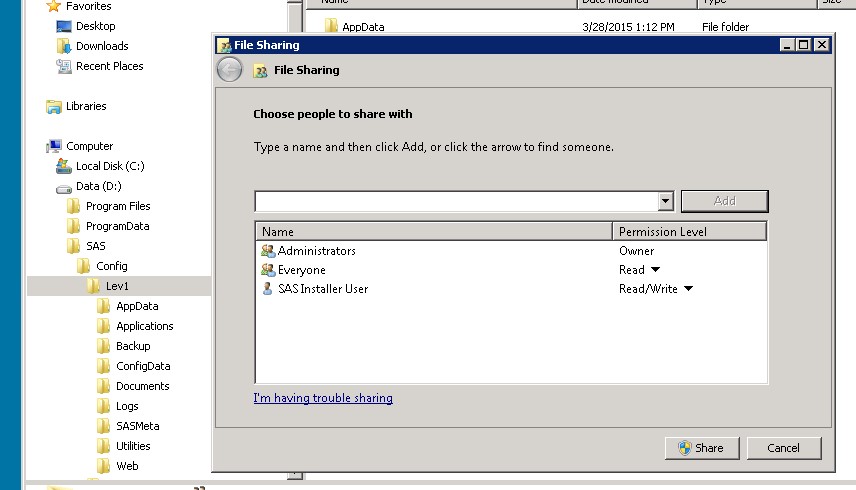

Next, Windows (the metadata server machine) :

Here we are creating an ordinary Windows share:

1. Select the SAS configuration directory in Windows Explorer (C:\sas\config\Lev1), right mouse->Share With..Specific People

2. Select the sasinst user (SAS install userID) for Read/Write Access.

Here are a couple of screen shots to illustrate:

Last, we go to the “client” machine (compute tier), which will need to access those other two machines.

1. First create the two directories where the other machines’ files will “reside.” You will need to change to the root user to do this:

mkdir /vaserverdir

mkdir /sasbidir

The first will point to the Linux machine, and second will point to the Windows machine.

2. Edit the /etc/fstabfile, and add the following two new lines, the first for the Linux machine, the second for the Windows machine: (as root user)

midtier01.race.sas.com:/opt/sas/config/Lev1 /vaserverdir nfs tcp,hard,intr,suid,ro 1 1 meta01.race.sas.com:/Lev1 /sasbidir cifs guest,tcp,hard,intr,suid,ro 1 1

(By the way, nfs stands for Networked File System, and cifs stands for Common Internet File System)

3. Before proceeding, make sure the apm_init.sh script has been run to initialize the APM portion of the data mart, which will create the configuration file log_definitions.json (found in (/opt/sas/config/Lev1/Web/SASEnvironmentManager/emi-framework/Conf/). Then bring the file into an editor, and modify so that the entries look like the illustration below. This file tells the Data Mart’s APM ETL process where to find the log files from the various servers that it needs to create the APM portion of the data mart. Here’s a portion of that file, illustrating one entry from each of the machines in this configuration (middle tier machine, compute tier machine, and metadata server machine):

[

{

“name”: “SASVA – Logical Workspace Server”,

“hostname”: “midtier01.race.sas.com”,

“hostLevRoot”: “/opt/sas/config/Lev1″,

“levRoot”: “/vaserverdir”,

“contextName”: “SASVA”,

“logLocation”: “WorkspaceServer/PerfLogs”,

“baseFilename”: “arm4_(.+)”,

“operation”: “Move”

},

{

“name”: “SASApp – Logical SAS DATA Step Batch Server”,

“hostname”: “compute01.race.sas.com”,

“hostLevRoot”: “/opt/sas/config/Lev1″,

“levRoot”: “/opt/sas/config/Lev1″,

“contextName”: “SASApp”,

“logLocation”: “BatchServer/PerfLogs”,

“baseFilename”: “arm4_BatchServer”,

“operation”: “Move”

},

{

“name”: “SASMeta – Logical Metadata Server (Audit)”,

“hostname”: “meta01.race.demo.sas.com”,

“hostLevRoot”: “C:\\sas\\config\\Lev1″,

“levRoot”: “/sasbidir”,

“contextName”: “SASMeta”,

“logLocation”: “MetadataServer/AuditLogs”,

“baseFilename”: “Audit_SASMeta_MetadataServer”,

“operation”: “Move”

},

Notice the double back-slashes specifying the paths on the Windows machine, and notice that on the compute tier server, the log files being accessed are local to that machine, so the “levRoot” parameter is not one of the mounted drives, it’s just a local pathname. This example shows only three entries for illustration; your actual file will have one entry for each SAS server that is deployed–mine had about 15 entries all together between the three machines.

4. Finally, issue the mount commands (as root) from the “client” machine (the compute tier machine):

mount /vaserverdir

mount /sasbidir

To test whether the mounts are working properly, simply CD into the new directories and you should see all the files and subdirectories from the other machines.

Your Data Mart is now ready to be built. You can run the ETL processes either manually or allow them to run on the default schedules, and they will now be able to pick up the data from all the SAS log files, on all machines.

[Note: to read Linux files from a Windows platform, you need Samba or equivalent, which is beyond the scope of this blog]

Let me thank my colleagues Mark Thomas and Gilles Chrzaszcz for their expertise in helping me with this work.