JSON is a popular format for data exchange between APIs and some modern databases. It's also used as a way to archive audit logs in many systems. Because JSON can represent hierarchical relationships of data fields, many people consider it to be superior to the CSV format -- although it's certainly not yet universal.

I learned recently that newline-delimited JSON, also called JSONL or JSON Lines, is growing in popularity. In a JSONL file, each line of text represents a valid JSON object -- building up to a series of records. But there is no hierarchical relationship among these lines, so when taken as a whole the JSONL file is not valid JSON. That is, a JSON parser can process each line individually, but it cannot process the file all at once.

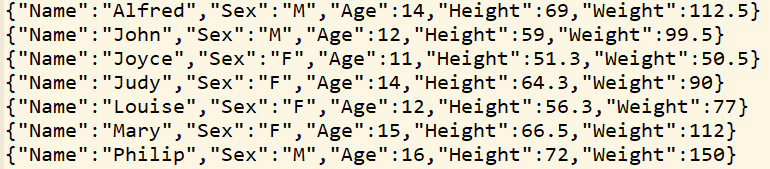

RECOMMENDED READING | How to read JSON data in SAS (SAS Community)In SAS, you can use PROC JSON to create valid JSON files. And you can use the JSON libname engine to parse valid JSON files. But neither of these can create or parse JSONL files directly. Here's a simple example of a JSONL file. Each line, enclosed in braces, represents valid JSON. But if you paste the entire body into a validation tool like JSONLint, the parsing fails.

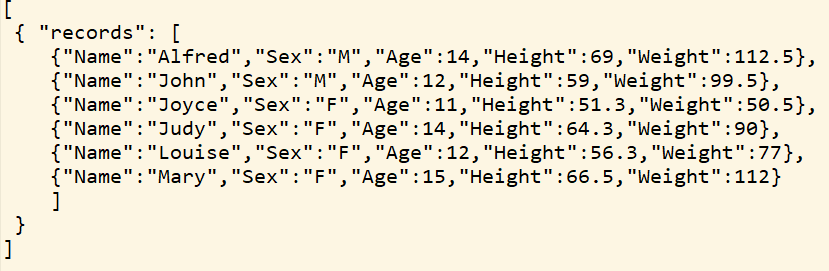

If we needed these records to be true JSON, we need a hierarchy. This requires us to set off the rows with more braces and brackets and separate them with commas, like this:

Creating JSONL with PROC JSON and DATA step

In a recent SAS Support Communities thread, a SAS user was struggling to use PROC JSON and a SAS data set to create a JSONL file for use with the Amazon Redshift database. PROC JSON can't create the finished file directly, but we can use PROC JSON to create the individual JSON object records. Our solution looks like this:

- Use PROC JSON to read each record of the source data set and create a new JSON file. DATA step and CALL EXECUTE can generate these steps for us.

- Using DATA step, post-process the collection of JSON files and append these into a final JSONL file.

Here's the code we used. You need to change only the output file name and the source SAS data set.

/* Build a JSONL (newline-delimited JSON) file */ /* from the records in a SAS data set */ filename final "c:\temp\final.jsonl" ; %let datasource = sashelp.class; /* Create a new subfolder in WORK to hold */ /* temp JSON files, avoiding conflicts */ options dlcreatedir; %let workpath = %sysfunc(getoption(WORK))/json; libname json "&workpath."; libname json clear; /* Will create a run a separate PROC JSON step */ /* for each record. This might take a while */ /* for very large data. */ /* Each iteration will create a new JSON file */ data _null_; set &datasource.; call execute(catt('filename out "',"&workpath./out",_n_,'.json";')); call execute('proc json out=out nosastags ;'); call execute("export &datasource.(obs="||_n_||" firstobs="||_n_||");"); call execute('run;'); run; /* This will concatenate the collection of JSON files */ /* into a single JSONL file */ data _null_; file final encoding='utf-8' termstr=cr; infile "&workpath./out*.json"; input; /* trim the start and end [ ] characters */ final = substr(_infile_,2,length(_infile_)-2); put final; run; |

From what I've read, it's a common practice to compress JSONL files with gzip for storage or faster transfers. That's a simple step to apply in our example, because SAS supports a GZIP method in SAS 9.4 Maintenance 5. To create a gzipped final result, change the first FILENAME statement to something like:

filename final ZIP "c:\temp\final.jsonl.gz" GZIP;

The JSONL format is new to me and I haven't needed to use it in any of my applications. If you use JSONL in your work, I'd love to hear your feedback about whether this approach would create the types of files you need.

5 Comments

It is probably going to be easier to just generate the JSON file and then convert it to JSONL format. As long as the individual lines generated by PROC JSON are not too long it is a pretty simple DATA step to read and convert them.

filename json temp;

filename jsonl temp;

proc json out=json nosastags pretty;

export sashelp.class(obs=5);

run;

data _null_;

infile json truncover;

file jsonl;

input line $32767.;

if line in ('[',']') then return;

if line in ('}','},') then put '}';

else put line @;

run;

I need to send a JSON file to a team to upload into redcap and they are requiring the data be formatted in a specific way and I'm wondering if there is an option to get proc JSON to perform this task? The in statement we're using in the testing stage is below but I have many observations and many more variables to add in production.

in='{"projectId":"999", "data": "[{\"record_id\", \"test91\"},{\"record_id\": \"test57\"}]","serverName":"EXTERNAL"}'

PROC JSON has a lot of flexibility for writing individual objects as well as nested data. I suggest posting your case to the SAS Community and I'm sure someone will have an example for you.

Informative post. I just got my introduction to jsonl, the format some of the NIH tools return data. I realize that this is mostly machine readable, but I find formatting helpful. The following was legal in the website you provided:

[ { "records": [ { "Name" : "Alfred" , "Sex" : "M" , "Age" : 14 } , { "Name" : "John" , "Sex" : "M" , "Age" : 12 } ] } ]I find it curious that many programmers put the comma at the end of a line, where one has to find it with more effort and cannot be sure that it was not supposed to be part of the value instead of a delimiter (metacharacter), instead of the front. I find aligning it to the parenthesis or bracket helpful, too. I write my macro parameters in this fashion.

Kind regards,

Kevin

I know many people who feel as you do about JSON and SQL formatting; they prefer to see the commas at the start of the line. However, the world of "pretty print" code formatters that are built into IDEs or on web sites -- they don't seem to agree. You can read the back-and-forth saga in this VS Code issue topic. I like to use the code formatters in VS Code (or SAS Studio or SAS Enterprise Guide), even if it means a bit of a compromise on the styling. It's just too convenient.