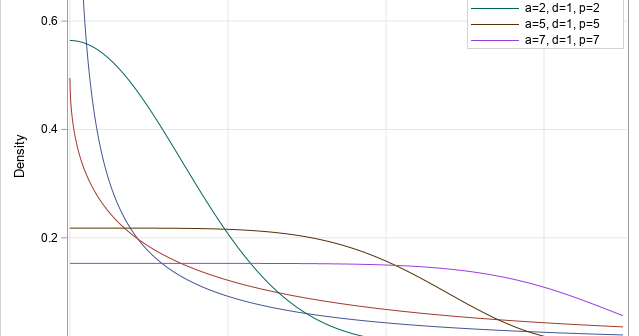

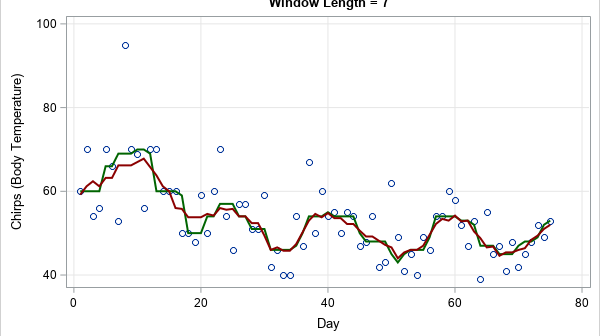

When data contain outliers, medians estimate the center of the data better than means do. In general, robust estimates of location and sale are preferred over classical moment-based estimates when the data contain outliers or are from a heavy-tailed distribution. Thus, instead of using the mean and standard deviation of