"We could have a session on a new 'cool' machine learning technique."

A while ago, when discussing sentiment analysis and how individuals phrase things differently, a colleague of mine dryly noted, "For Jason, everything is cool. The day Matthew describes something as cool, then I'll take notice." In my defence, I think "cool" is a subjective thing. I think that Sebastião Salgado's photography is cool, as is cognitive psychology and 1990s trip hop music. A "cool" day for me can be one spent pottering in my garden. But I'll admit it's not a word I use much in a professional context.

Are machine learning techniques cool?

Back to the starting quote. I might be a bit of an outlier amongst my data science colleagues, but I don’t personally find machine learning techniques or algorithms cool. For me they are essential tools of our trade, to be applied to SAS customer data to allow us to train models. I love photography but have never understood a photographer being more interested in the camera than the photograph. Similarly, I'm more interested in the business decision, rather than the algorithm.

I don’t know whether Paul Malley, another of my SAS colleagues, thinks algorithms are cool. But as he explains in this webinar, to realise value from artificial intelligence programs, the focus should be on the ModelOps process to operationalise analytics into a business's decision making.

Defining the business question

I believe the most important part of the analytics life cycle is defining the business question you are asking:

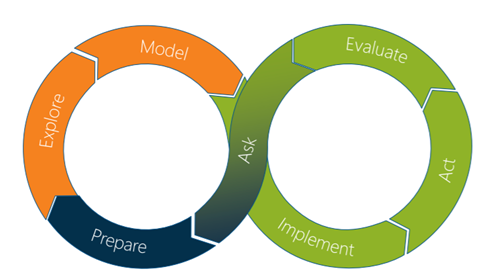

This is a key step in the life cycle – to "Ask" and formulate the business problem. It happens before we start to analyse data or translate the business question into a mathematical representation of the problem that AI can solve. But the knowledge we gain from this step means (1) we maximise our chances of success and (2) lower the risk of developing a model that can't be embedded into operational decisions.

I have always loved this quote by Jiddu Krishnamurti, a philosopher interested in the nature of inquiry: "If we can really understand the problem, the answer will come out of it, because the answer is not separate from the problem.”

The key dimensions of any solution

At its simplest, formulating the business problem involves, asking a lot of questions, considering the answers and then asking more questions. I tend to base my questioning around the four key dimensions of any solution:

- Business Strategy

- Processes

- People / Organisation / Culture

- Information Technology / Data landscape

The specific questions will of course be very dependent on the required use case, but there are several common objectives, I tend to consider whilst I'm doing this:

1. SMART

The ultimate problem definition you want to agree upon needs to be SMART (Specific, Measurable, Attainable, Realistic and Timely). How will a model improve the decision? What business value will this deliver? What is the impact of wrong decisions? The definition can be developed through the process. Don’t be pressured into starting analysis until the problem is well defined, but equally if the problem is not clear then a good data science team will help the business refine the problem until it is.

2. Subject matter experts

Work with your businesses Subject Matter Experts (SMEs) to define and research the problem; the knowledge you can obtain from these conversations is essential. What is the issue? How is it measured? What are potential causes? I have been fortunate enough to work across an incredibly diverse range of problems, including army logistics, marketing recommendation engines, collections strategy development, complaints root cause analysis, health and safety analysis, and analysis of cardiology patient records (that was cool). In every scenario, the expert’s knowledge has been key to the project. Doing this, before you start to analyse the data, will always be beneficial to provide context to the analysis. And an ongoing relationship with the SMEs will be critical as you start to uncover findings.

3. Process and people

Discuss the business process and people around the decision to be improved. What happens before and after the decision? How will a "better" decision impact this? Are factors like remuneration or people/cultural issues likely to impact the decision?

4. Check hypothesis

Discuss any hypotheses in detail with the SMEs. In my experience, it’s a big red flag if SMEs don’t have any hypothesis about the business problem. Scenarios where the brief to the data science team is "we have lots of data, just analyse it and tell us something about our business that we don’t know" are to be avoided.

5. Is it new?

Is the project new or is the requirement to evolve an existing solution? If the latter, what information is already available, both from the original solution and any monitoring that has been done of its performance? If the existing solution is not being monitored, then consider doing this using the SAS Model Manager framework or SAS Open Model Manager before going further.

6. Consider the data

Alongside defining the business problem, you can start to consider the data landscape available and whether there is enough relevant data in a useable format. Where does the data reside? What is the latency of the data when the decision is made? What data quality issues exist? Can any additional relevant data be sourced? Post-decision, what additional relevant outcome data is available? At this stage, you will also want to conduct some initial exploratory analysis of the data. If it’s a computer vision problem, then look at some images. If it's a natural language processing (NLP) problem, then read some documents. Discuss both with the SMEs.

7. Consider the model

When the business problem is clear, then you can start to consider:

- How will the model impact the business decision? Is it a totally automated scenario where the model will make the decision or an advisory decision with a human making the ultimate decision considering the insight from a model?

- How a model would be used to improve the decision. It's worth examining the business impact of wrong decisions. Assess the impact of both false positives and false negatives.

8. FATE

As discussed by my colleague Iain Brown, the ethical FATE framework should be considered.

9. Commitment

How committed are stakeholders to applying data science to solve this problem? What does the impact vs. effort for the solution look like?

10. Consider reframing

Finally, always consider whether reframing the problem differently might help. You might start with a business requirement for a churn model to reduce the number of customers leaving an organisation. But is there a risk that if you target customers to ask them to stay, this might have a negative impact on churn? Instead, maybe consider a model to predict the likelihood of customers that will positively respond to an offer to stay or some root cause analysis to examine why these customers are leaving.

Have a look at the SAS AI Pathfinder for more information on how to plan for and implement AI in your own organisation. Ultimately if the problem is formulated well, then this will increase the chances of our models being able to be operationalised as part of a ModelOps process. If this happens, our data science deliverables will impact real business decisions.

Now that’s cool.

1 Comment

Coudnt agree more Matthew. You have summarised this brilliantly. Often the hype of fancy techniques is oversold while understanding the business problem, using commercial acumen in formulating a solution continues to remain popular with many business users.

I am waiting to read about articles where folks have predicted covid 19 well in advance.