Companies are increasingly recognising that getting value from the Internet of Things (IoT) requires analytics on the edge – specifically, artificial intelligence-powered analytics. It is, however, not as simple as that. Getting the most out of analytics on the edge requires the right platform. Based on my experience with analytics, AI and/or decision models on the edge, I have outlined a number of important requirements for your platform.

Data management

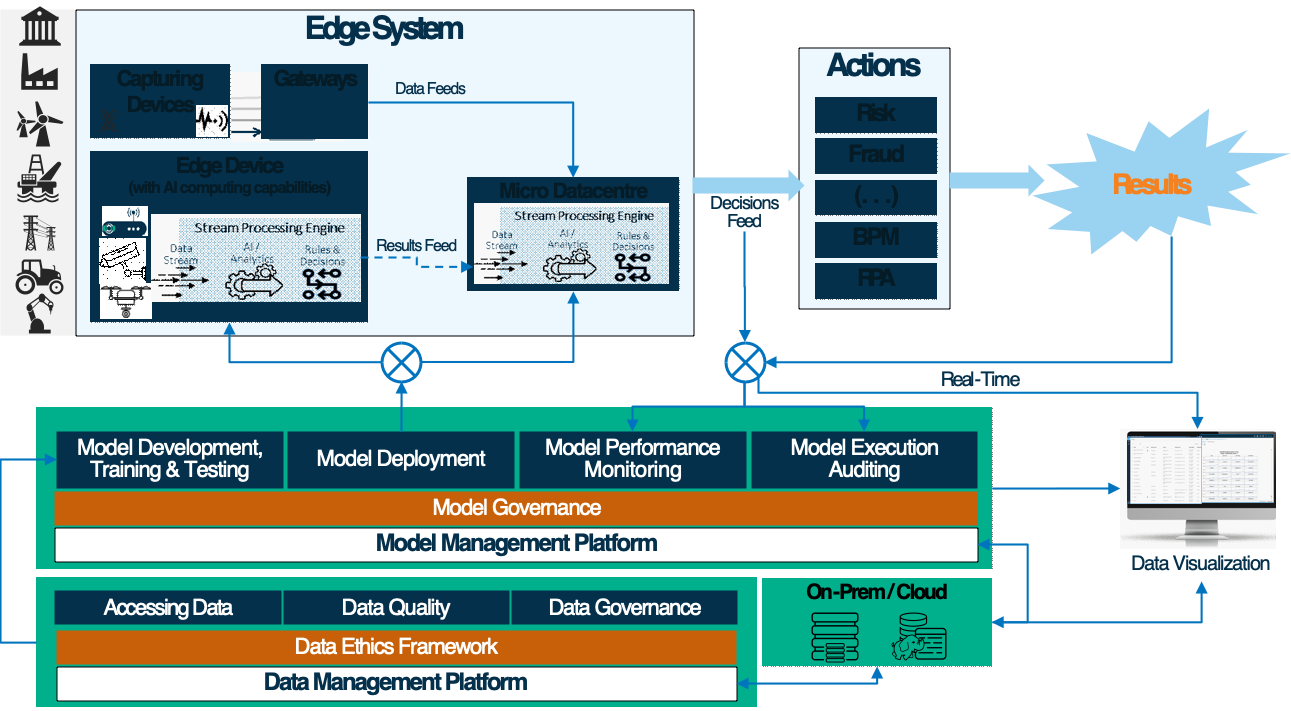

Delivering trustable decisions requires good data management. This includes accessing or capturing data, data quality (including cleansing, standardisation and remediation), and the overall governance of the data. This means good data security, data (object) lineage, data stewardship, data compliance, and traceability. All of these also need to be supported in a data ethics framework.

Model development, training and testing

An important part of using AI algorithms is selecting the data sets for development, training and testing. The models need to perform ethically, and as we all know, garbage in means garbage out. The design, teaching and testing models, initially based on past data, need to be continuously adjusted using the results obtained during their everyday use. Ideally, the models should be able to self-learn and self-adjust on the edge. But this needs to be auditable to ensure that they are still doing the right thing.

Model governance and deployment

Strong central governance with remote deployment capabilities is critical. Organisations can eventually expect to have thousands of analytical/AI or decision models and thousands (if not millions) of edge points. Many of these are likely to be in remote locations. And one or more models will almost certainly be embedded in an event stream processing flow, performing in parallel on the same edge. Governance is key to being able to trust the results from AI-based systems and to ensure auditability and accountability.

Model performance monitoring

It is also necessary to be able to monitor model performance centrally. Organisations must be able to change the model, either because business requirements have changed or because the model is no longer responding appropriately to the current situation. This is key for delivering trustable decisions, whether or not the model is self-learning. Model execution data should be captured centrally for future development and for auditing decisions.

Model execution auditing

AI/analytic models will be triggering decisions that might affect individuals, communities or society more generally. It is therefore essential to make sure that we have ethical AI. All models should be auditable so that it is possible to identify the input data, the given score and all the constraints used to model the decision, as well as what actions were triggered. This closes the loop feeding back to model performance and governance and ensures that decisions can be trusted.

Subsecond event stream processing

Running at the edge requires handling data in motion, or event stream data. A single event is typically not informative, but a story emerges when it is combined with other events. These events could be a potential machine failure, a case of fraud, a cyberattack, an opportunity to drive an existing sale or a chance to provide a better customer experience. The event stream processing engine must be able to capture events, assess them, score them and then trigger decisions – and all this in less than a second.

Real-time data visualization

When processing automated decisions at the edge, real-time monitoring and reporting is recommended. This supports model performance monitoring and model execution auditing, and also means that when an anomaly is highlighted, it is possible to drill down to understand the details, and particularly the root causes. This might be regionally deployed by mini data centres, for example, which would feed a central system and/or all edge points with AI computing capacity, sending the relevant data directly to a central system. At the central data centre, whether in the cloud or on-premises, data can be combined with other data sources to augment the business value and better understand the business moments and therefore the deployed models.

Getting it right

There is much to consider about getting value from deploying AI and analytics at the edge to manage IoT data. However, getting the right platform is unquestionably the most important element. From this, everything else will follow.

To learn more, see Edge Computing: Delivering a Competitive Boost in the Digital Economy and download the free research papers How Streaming Analytics Enables Real-Time Decisions and Understanding Data Streams in IoT.

1 Comment

Good post. I learn something new and challenging on blogs

I stumbleupon everyday. It will always be interesting to read through content from other writers and practice

something from other websites.