SAS Administrators are frequently asked to log and report on which users are accessing SAS tables. This functionality is often requested by companies who, for regulatory compliance, need to track who is accessing data. In my next couple of blogs I will show how you can audit data access. In this first blog we will look at logging which users have accessed SAS tables.

The obvious first step in this process is to capture the relevant information. In the simplest case we would want to record the user, the table, and the date and time that the table was accessed. Fortunately, we have at our disposal the SAS Logging Facility. The facility is a flexible, configurable framework that you can use to collect, categorize, and filter events and write them to a variety of output devices.

A little background on the SAS Logging Facility. The facility is controlled using XML files that contain the logging configuration. Each SAS Server points to a logging configuration file (using the LOGCONFIGLOC= option), and the content of the logging configuration file defines what events are logged and the format and destination of the event output.

The configuration is made up of a set of loggers and appenders.

- A logger identifies a category of message which you would like to record.

- An appender is an output destination for a log message, for example a file, database, the console etc.

The Logging facility includes a logger for auditing access to SAS Libraries which supports the ability to “log” who has accessed data in a SAS Library, including SAS tables and database tables accessed via a SAS libname. The AUDIT.DATA.DATASET logger will record who has opened, deleted or renamed a table.

In this blog I will show you how to modify the logging configuration of a stored process server to configure a logger to capture when a user edits, deletes or renames a SAS table, and an appender to format the message and send it to a log file. The SAS Stored Process Server is used as an example; a complete auditing setup would require this configuration for each IOM server.

In the configuration directory for the stored process server <configdir>/Lev1/SASApp/StoredProcessServer/ open the sasv9.cfg and the sasv9_usermods.cfg file and check what file the logconfigloc= option is referencing. This is the file we will edit. In the following example the Environment Manager service architecture framework is configured so the logging configuration points to logconfig.apm.xml.

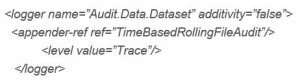

Open the file logconfig.apm.xml and between the <logging:configuration> tags add a new logger. The new logger will route Audit.Data.Dataset messages with a diagnostic level of TRACE and above to the appender named TimeBasedRollingFileAudit. We will define the appender in the next step. (NOTE the message could also be sent to an existing appender). TRACE is the level associated with Audit.Data messages. The levels output by SAS processes from lowest to highest, are TRACE, DEBUG, INFO, WARN, ERROR, and FATAL. Care should be taken when enabling TRACE logging as it can generate a large volume of output to the log which can have performance implications.

Additivity=false means that the event will only be logged to the appender defined here, rather than the appenders associated with the loggers higher up in the hierarchy (e.g. Audit.Data and Audit). For information on all logger parameters click here.

Now we will define the appender. The appender definition determines where the logger messages are written and what format is used to output the messages. The RollingFileAppender class of appenders write messages to a log file whose name contains the current date (for example,SASApp_STPServer_2015-09-02_compute01_25195.log). When the system date changes, messages roll over to a new log file whose name contains the new date (for example, SASApp_STPServer_2015-09-03_compute01_25195.log). The name of the file is determined by the FIleNamePattern parameter. The appender name matches the name specified in the appender-ref tag of the logger definition (TimeBasedRollingFIleAudit).

Click here for the appender xml configuration overview.

The Conversion pattern in the layout tag provides the format for the messages in the log.

When you are done adding the new logger and appender to the XML files, restart the Object Spawner and in SAS Management Console validate that the Stored Process Server is still operational. The log will now track when a user accesses a SAS table. The conversion pattern results in the following line in the log. The log messages shows the date and time that the user meta01\barbara opened the dataset OSSALES.ORDER_FACT, the dataset was opened in INPUT mode which means it was read and not updated.

2015-09-03T18:00:44,311 TRACE [00000004] barbara@meta01 – LOGGER=Audit.Data.Dataset.Open Libref=OSSALES Engine=META Member=ORDER_FACT MemberType=DATA Openmode=INPUT Path=/opt/OrionSales/data

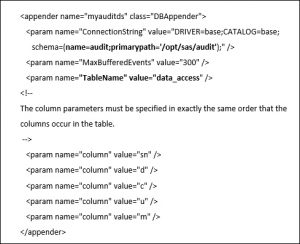

An alternative to writing to a log is to write to a SAS table or database table. This would require a new appender, and for the logger to also refer to the new appender. The xml below defines an appender for a SAS dataset data_access, which will be stored in a library audit which points to the path /opt/sas/audit. The SAS dataset must already exist, and the column parameters in the appender must be the same order that the columns occur in the table.

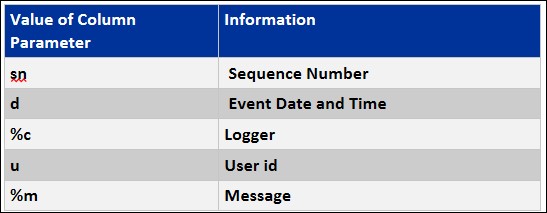

The column parameter values define what is written to the dataset. These are the same as were input to the conversion pattern for the log file.

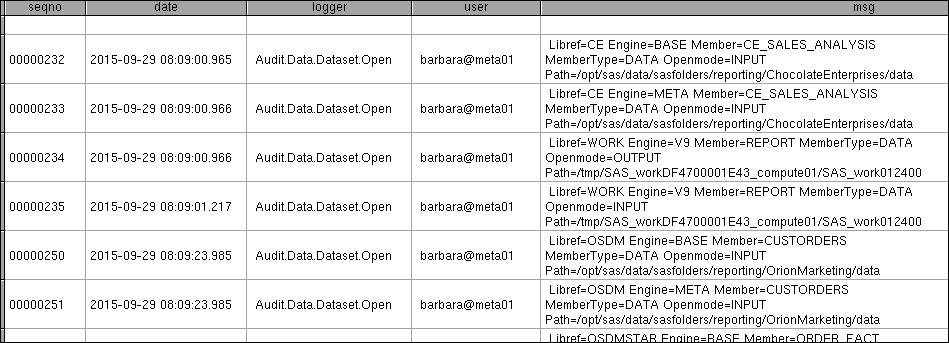

The SAS table would looks like this.

One issue with this approach is that the server locks the table, as a result you cannot read the SAS table while the server is running. You can get around this either by pointing to a table in a SAS/Share library, or by appending to a database.

In the next blog I will examine some methods of reporting on this data.

15 Comments

Hi All,

Just thinking, will this also capture odbc connections that are made using SQL pass-thru, for instance:

proc sql;

CONNECT TO odbc as cdwaasrc (dsn=CDWxxx user=CDW_xx pw='xxxxx); create table livepolicy as select * from connection to cdwaasrc ( select sourcesystemcode, contractnumber from contract where type in ('P','L') and status='GI' and terminationdate is null and trunc(creationdate)<sysdate-395 and trunc(maintenancedate)<sysdate-395

);

disconnect from cdwaasrc;

quit;

in above instance will it populate anything for CDWxxx ?

Hi Mahesh,

It has been a while since I looked at this but from memory it does not support odbc connections. It does support "audit access to SAS libraries, including database tables that have been assigned by a LIBNAME statement". The doc includes a note:

Audit messages are not available for in-database tables, filerefs, or SAS cubes.

https://go.documentation.sas.com/doc/en/pgmsascdc/9.4_3.5/logug/n0t22zxpm45zpyn16235oxno43u2.htm

Gerry

Hi, what would be the minimum logging level (trace, debug, info etc.) to catch CRUD operations done by users on SAS Datasets?

Unfortunately you cannot really achieve the auditing of all CRUD operations with this logger. As noted in the blog "the AUDIT.DATA.DATASET logger will record who has opened, deleted or renamed a table." So we are missing created and updated.

Hi Gerry,

I am looking to use this on the workspace server also. I am wanting to output this into a data set as you have suggested above. You mention it will be stored in a library audit which points to the path /opt/sas/audit. Where is this path being specified? I want to make sure I am creating it in a readable area so may want to repoint this but cannot see where I would do this.

Peter, looks like I have an error in the xml. The path should be included in the schema as in the corrected version below.

Sorry about that.

Gerry

<appender name="myauditds" class="DBAppender"> <param name="ConnectionString" value="DRIVER=base;CATALOG=base; schema=(name=audit;primarypath='/opt/sas/audit');" /> <param name="MaxBufferedEvents" value="300" /> <param name="TableName" value="data_access" /> <!-- The column parameters must be specified in exactly the same order that the columns occur in the table. --> <param name="column" value="sn" /> <param name="column" value="d" /> <param name="column" value="c" /> <param name="column" value="u" /> <param name="column" value="m" /> </appender>Hi Gerry, is it just limited to stored process server or I could implement it at the Workspace server ?

Yes, you can do the same thing for a workspace server.

Hi all,

Many thanks for all the information. One question, I am wondering if I can use SAS facility logging with my ucrrent SAS installation. Can I use the same license?

Regards,

John.

You are welcome John. You do not need any additional license to use the SAS logging facility.

This is a very interesting subject for me. I had a task which involved this.

I setup an Appender in logconfig.xml in order to write events to an Oracle Table.

I created a table with 4 rows in Oracle and after I restart the metadata server no events are loaded in Oracle. In metadata log I see no error message, even so no data are loaded in the table.

In what log can I check more details?

Hi Gerry,

This is an excellent article. I just have one doubt. Is it possible to configure a logger to capture when a user accesses a dataset also. What changes should be done on the configuration file.

Gerry, keep on going with those next posts I am very interested.

There is something bothering me, that is:

-

"4.Access to and security of the data is a responsibility of the data layer, and not managed by applications" SAS is an application when used with a rdbms/hadoop.

- You already made a note on carefully checking the application-server settings for the ARM settings. Seeing user to bypass those and instead of those of the SASapp using the sashome/Foundation one. The use of predefined scripts (not servers) is hard to accept

- Using more sources like a RDBMS (or hadoop) with another interface than the lockedin by SAS is bypassing all SAS auditing options. The requirement is normally to have a full guaranteed access logging for auditing/traceability.

Thanks for your comment.

You make a good point, I did not really address the ability to bypass the auditing settings.

To prevent that you would need a combination of approaches including a robust operating system

security model and setup, database security, metadata security and perhaps some other SAS

features such as "lockdown".

Excellent answer wiht the correct order Gerry.

First all that mostly classified at those difficult things as OS, database, sas metdata than those other specific options.

It is very frustrating that order is often ignored (too difficult to be executed) and immediately proceeding to those features as lockdown metadatabound. The result can be a functionally disorder for analytics users.

I can see the value of those lockdown / metadatabound when SAS is setup in a DMZ zone for public access in a very restricted way for information consumers.

That is the distribution part wen the analytics in house is working fine.