A challenge for you – do a Google search for “Hadoop Security” and see what types of results you get. You’ll find a number of vendor-specific pages talking about a range of projects and products attempting to address the issue of Hadoop security. What you’ll soon learn is that security is quickly becoming a big issue for all organizations trying to use Hadoop.

A challenge for you – do a Google search for “Hadoop Security” and see what types of results you get. You’ll find a number of vendor-specific pages talking about a range of projects and products attempting to address the issue of Hadoop security. What you’ll soon learn is that security is quickly becoming a big issue for all organizations trying to use Hadoop.

Many of you may already be planning or involved in Hadoop deployments involving SAS software. As a result, technical architects at SAS often get questions around security, particularly around end-point security capabilities. While there are many options for end-point and user security in the technology industry, the Hadoop Open Source Community is currently leading with a third-party authentication protocol called Kerberos.

Four key practices for securing a SAS-Hadoop environment

To access to a fully operational and secure Hadoop environment, it is critical to understand the requirements, preparation and process around Kerberos enablement with key SAS products. There are four overall practices that help ensure your SAS-Hadoop connection is secure and that SAS performs well within the environment. Today’s post is the first in a series that will cover the details of each of these practices:

- Understand the fundamentals of Kerberos authentication and the best practices promoted by key Hadoop providers.

- Simplify Kerberos setup by placing SAS and Hadoop within the same topological realm.

- Ensure Kerberos prerequisites are met when installing and configuring SAS applications that interact with Hadoop.

- When configuring SAS and Hadoop jointly in a high-performance environment, ensure that all SAS servers are recognized by Kerberos.

What is Kerberos?

Kerberos authentication protocol works via TCP/IP networks and acts as a trusted arbitration service, enabling:

- a user account to access a machine

- one machine to access different machines, data and data applications on the network.

Put in the simplest terms, Kerberos can be viewed as a ticket for a special event that is valid for that event only or for a certain time period. When the event is over or the time period elapses, you need a new ticket to obtain access.

How difficult is Hadoop and Kerberos configuration?

Creating a Kerberos Key Distribution Center is the first step in securing the Hadoop environment. At the end of this blog post, I’ve listed sources for standard instructions from the top vendors of Hadoop. Following their instructions will result in creating a new Key Distribution Center, or KDC, that is used to authenticate both users and server processes specifically for the Hadoop environment.

For example, with Cloudera 4.5, the management tools include all the required scripts to configure Cloudera to use Kerberos. Simply running these scripts after registering an administrator principal will result in Cloudera using Kerberos. This process can be completed in minutes after the Kerberos Key Distribution Center has been installed and configured.

How does Kerberos authenticate end-users?

A non-secure Hadoop configuration relies on client-side libraries to send the client-side credentials as determined from the client-side operating system as part of the protocol. While users are not fully authenticated, this method is sufficient for many deployments that rely on physical security. Authorization checks through ACLs and file permissions are still performed against the client-supplied user ID.

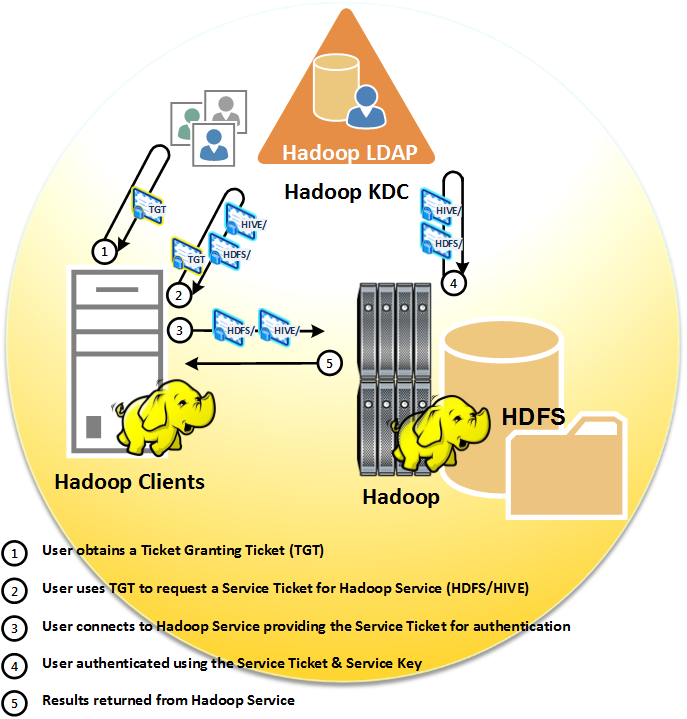

Once Kerberos is configured, Kerberos authentication is used to validate the client-side credentials. This means the client must request a Service Ticket valid for the Hadoop environment and submit this Service Ticket as part of the client connection. Kerberos provides strong authentication where tickets are exchanged between client and server and validation is provided by a trusted third party in the form of the Kerberos Key Distribution Center.

Step 1. The end user obtains a Ticket Granting Ticket (TGT) through a client interface.

Step 2. Once the TGT is obtained, the end-user client application requests a Hadoop Service Ticket. These two steps don’t always occur in this sequence because there are different mechanisms that can be used to obtain the TGT. Some implementations will require users to run a kinit command after accessing the machine running the Hadoop clients. Other implementations will integrate the Kerberos configuration in the host operating system setup. In this case, simply logging into the machine running the Hadoop clients will generate the TGT.

Step 3. Once the user has a Ticket Granting Ticket, the client requests the Service Ticket (ST) corresponding to the Hadoop Service the user is accessing. The ST is then sent as part of the connection to the Hadoop Service.

Step 4. The corresponding Hadoop Service must then authenticate the user by decrypting the ST using the Service Key exchanged with the Kerberos Key Distribution Center. If this decryption is successful the end user is authenticated to the Hadoop Service.

Step 5. Results are returned from the Hadoop service.

Where to find more information about Hadoop and Kerberos

Providers such as Cloudera and Hortonworks have general guidelines around the role of Kerberos Authentication within their product offerings. You can access their suggested best practices via some of the key technology pages on their websites:

11 Comments

Love this article it says a lot to learn. Online Ed is very important cause all of the topics are going to spread on social sites. keep doing!

Nice Post! Thank you for sharing.......

Nice article. Content is clear

Thanks for sharing

Good Post, I am a big believer in posting comments on sites to let the blog writers know that they ve added something advantageous to the world wide web.

Keeping SAS and Hadoop in save Realm is a good Idea to simplify configuration and setup, but there are other important considerations as well.

Performance is one important consideration. As your cluster grows, volume of AS and TGS interaction will also grow, consider this volume while making a decision to use KDC for hadoop and SAS. Troubleshooting process around Kerberos related issues will need to engage both Hadoop and SAS admin teams. Another alternative may be to have local KDC for both and use cross realm trust. Haven't tried this and not sure about complexities around but it may be a good option if works. I do also explore things and have written about implementing Kerberos in Hortonworks cluster at http://bit.ly/1LLAUJZ

Very nice article. Here are my 2 cents.

Kerberos strong authentication relies on encryption. Different strengths of encryption can be used with the Kerberos tickets. Enabling Java to process the 256-bit AES TGT requires the Java Cryptography Extension (JCE) Unlimited Strength Jurisdiction Policy Files.The Unlimited Strength Jurisdiction Policy Files are required by the SAS Private Java Runtime

Environment. This environment is used by SAS Foundation for issuing a LIBNAME statement to the secure Hadoop environment.

One of the most popular platforms for Big Data processing is Apache Hadoop. Originally designed without security in mind, Hadoop’s security model has continued to evolve. Its rise in popularity has brought much scrutiny, and as security professionals have continued to point out potential security vulnerabilities and Big Data Security risks with Hadoop, this has led to continued security modifications to Hadoop. There has been explosive growth in the “Hadoop security” marketplace, where vendors are releasing “security-enhanced” distributions of Hadoop and solutions that compliment Hadoop security. This is evidenced by such products as Cloudera Sentry, IBM InfoSphere Optim Data Masking, Intel's secure Hadoop distribution, DataStax Enterprise, DataGuise for Hadoop, Protegrity Big Data Protector for Hadoop, Revelytix Loom, Zettaset Secure Data Warehouse, and the list could go on. At the same time, Apache projects, such as Apache Accumulo provide mechanisms for adding additional security when using Hadoop. Finally, other open source projects, such as Knox Gateway (contributed by HortonWorks) and Project Rhino (contributed by Intel) promise that big changes are coming to Hadoop itself. https://intellipaat.com/

I would like to add that a security specialist for 17 years, information security provisioning and hardening for all information systems is probably one of the most important best practices to be implemented for ensuring system safety and security. From Windows Servers to Linux Distributions, Apache and IIS web servers – and much more – these platforms need to have vendor supplied defaults removed, insecure services disabled, along with not having shared ID’s for privileged access. Securing systems is so incredibly important for regulatory compliance, especially for PCI DSS and HIPAA, and other compliance mandates, so keep this in mind also.

Pingback: SAS and secure Hadoop: 3 deployment requirements - SAS Users

Pingback: SAS and Hadoop—living in the same house - SAS Users