If you have been following this thread for a while, you may notice a theme that I keep bringing up: data virtualization. I'm trying to rectify a potential gap in the integration plan involving understanding the performance requirements for data access (especially when the application and database services are expected

Author

To a great extent, the data manipulation layer of our multi-tiered master data services mimics the capabilities of the application services discussed in the previous posting. However, the value of segregating the actual data manipulation from the application-facing API is that the latter can be developed within the consuming application’s

Last time we started to discuss the strategy for applications to transition to using master data services. At the top of our master data services stack, we have the external- or application-facing capabilities. But first, let’s review the lifecycle of data about entities, namely: creating a new entity record, reading

In the last two series of posts we have been discussing the challenges of application integration with a maturing master data management (MDM) repository and index, and an approach that attempts to easily enable existing applications to incrementally adopt the use of MDM. This approach involves developing a tiered architecture

Last time we discussed two different models for syndicating master data. One model was replicating copies of the master data and pushing them out to the consuming applications, while the other was creating a virtual layer on top of the master data in its repository and funneling access through a

In the past few posts we examined opportunities for designing a service layer for master data. The last note looked at the interfacing requirements between the master layer and the applications lying above it in the technology stack. Exposing accessibility to master data through the services approach opens new vistas

In a tiered approach to facilitating master data integration, the most critical step is paving a path for applications to seamlessly take advantage of the capabilities master data management (MDM) is intended to provide. Those capabilities include unique identification, access to the unified views of entities, the creation of new entity

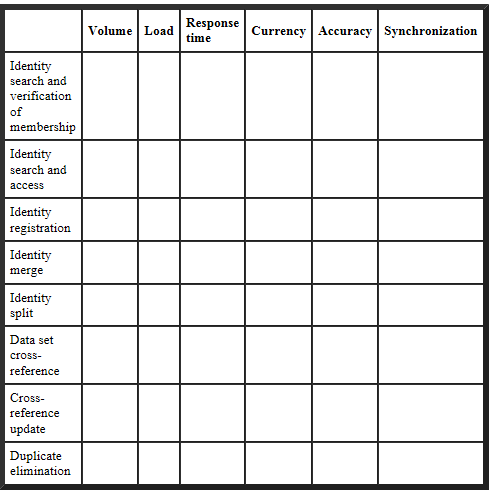

In my last series of posts, we looked at one of the most common issues with master data management (MDM) implementation, namely integrating existing applications with a newly-populated master data repository. We examined some common use cases for master data and speculated about the key performance dimensions relevant to those

Over the past few posts we've looked at developing an integration strategy to enable the rapid alignment of candidate business processes with the services provided by a master data environment. As part of a preparatory step, it is valuable to at the very least understand the implementation requirements to meet the

Let’s say that you have successfully articulated the value proposition of incorporating a master data capability into a customer’s business application. Now what? If you are not prepared to immediately guide that customer in an integration process, the probability is that a home-brew solution will be adopted as a “temporary”