“Data is a precious thing and will last longer than the systems themselves.” –Tim Berners-Lee, inventor of the World Wide Web

Last year, the International Institute for Analytics (IIA) and SAS published the research report, “Big Data in Big Companies,” written by Tom Davenport and Jill Dyché. For this report, they conducted extensive interviews with 20 companies to learn how big data is changing their analytics strategies, as well as the impact it’s having on their business.

In the report, one concern on the mind of executives was the return on investment (ROI) for big data. "Sometimes our ability to be more nimble as a business isn't easily quantifiable in hard dollars," says SAS Best Practices Vice President, Jill Dyché. "Sure, you can quantify the cost savings and revenue uplift as a result of faster speed-to-market, but enhancing a company's ability to innovate is a little squishier to put into numbers. In our survey, though, it was, in fact, the ability to innovate that compelled many of the executives we interviewed to invest in big data."

For years, we’ve been calculating the ROI for our traditional data warehouse and analytical systems by focusing on the costs of hardware/software acquisition, licensing and maintenance. How does big data impact this model?

A Big Data Best Practice for Investment

While hardware, software, licensing and maintenance costs continue to be important considerations, we need to go one step further for big data: Calculate ROI by focusing on new development processes, organizations and skills.

Here’s why: Big data is disruptive.

We can plan on big data to disrupt our current business development processes, how we function as an organization, and our current pool of skills. And we cannot ignore the intangible costs – or the cost savings – of this disruption. Seek to understand these changes and capitalize on the real benefits of the big data journey.

If this still sounds a bit “squishy,” then you may be interested in the TCOD framework.

The TCOD Framework Helps with Big Data ROI

What is the TCOD framework? TCOD stands for "total cost of data," and the purpose for this framework is to help organizations estimate the total cost of a big data solution for an analytic data problem. It considers two major platforms for implementing big data analytics – the enterprise data warehouse (EDW) and Hadoop – and helps you figure out which platform to use for each data problem.

One of big data’s dirty little secrets is that Hadoop is not the answer to all your big data requirements. The truth is that your existing EDW/analytics infrastructure may be the most cost-effective solution for a given problem. The beauty of the TCOD framework is that it helps you figure it out – and put dollar figures to it.

The TCOD framework was developed by Richard Winter and his team at WinterCorp, a consultancy focused on large scale data management challenges. You can learn more about the framework, and download the accompanying report and spreadsheet here: “We need Hadoop to keep our data costs down.”

Key Takeaways for Marketers

- You’ve been here before: “Show me the ROI for [fill-in-the-blank].” You know the drill. Take advantage of these creative skills when talking about big data ROI.

- If you can figure out how to quantify the “ability to innovate” and market it, you could quit your day job. And become a consultant.

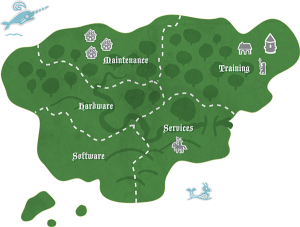

- Make friends with the Knight in the Services territory of the island. He’s a busy guy, but he has a few time-saving and cost-saving secrets to share.

- Just because it’s “free,” don’t underestimate the hard and soft costs of open source software, a primary component in many big data solutions.

This is the 9th post in a 10-post series, “A marketer’s journey through the Big Data Archipelago.” This series explores 10 key best practices for big data and why marketers should care. Our final stop is the Strategy Isle, where we’ll talk about using big data to achieve community and global goals.

3 Comments

Pingback: Stop #9 in the Big Data Archipelago Journey: The Investment Isle | The Cyberista Says

Learning to work with Big Data means retraining your team. New systems always come with a learning curve and plenty of initiatives fail because the users don't see the value in really using them. It's hard to prove ROI and justify the investment if no one uses it!

So true, Pat! When Cindi Howson (of BI Scorecard) started doing surveys on BI adoption rates over a decade ago, the adoption rate started out around 10% and inched its way up to the mid-20's - and the dial really hasn't moved much for several years. It will be interesting to see whether the addition of "big data" increases (or decreases) tool adoption, or whether it will continue to remain flat.