If you have taken a SAS Deployment exam in the past 9-12 months, you may have wondered why there are pre-test test questions. While it might seem like I am providing warmer-upper questions out of the goodness of my heart, these pre-test tests are actually called candidate surveys. Their purpose? To draw a correlation between a candidate’s perceived level of their own preparedness and how well the candidate actually does on the exam.

Pre-test surveys are a fairly common practice and of course here at SAS, we jump at the chance to draw statistical correlations. So what does the data tell us? Let’s look at two examples*.

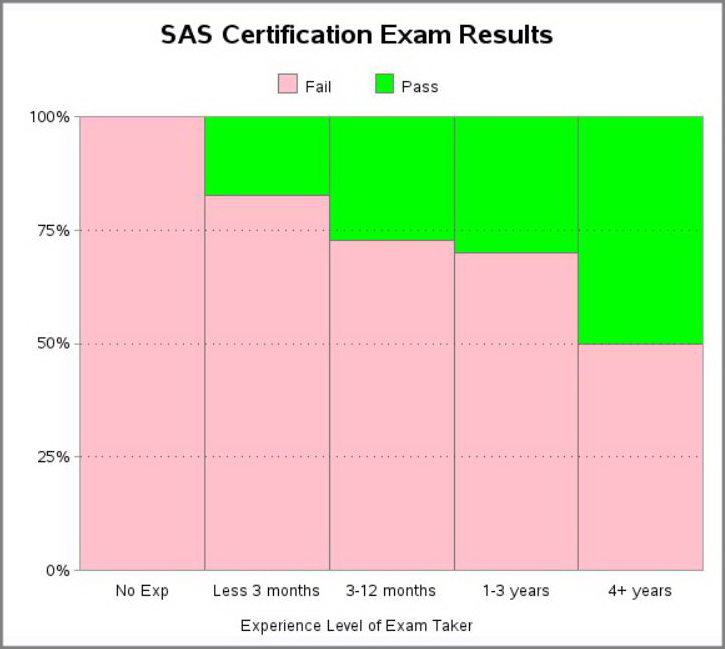

Survey Question #1: How much experience do you have working with the SAS software covered on this exam?

The Experience Level chart indicates the more experience a candidate has, the greater the likelihood of passing this exam.

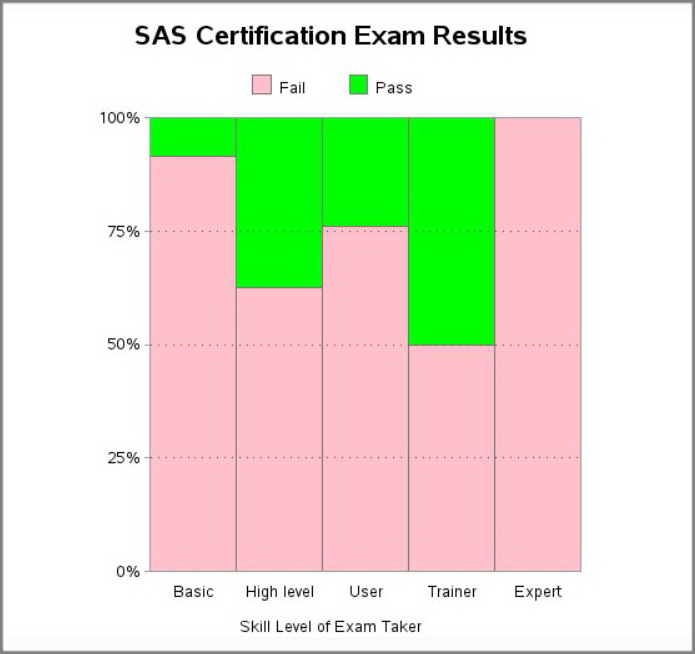

Survey Question #2: On a scale from 1 -5**, how would you rate your current level of expertise with this software?

(**Scale details are provided to candidates.)

The Skill Level chart shows some mixed results. Typically the higher the skill level, the greater the likelihood of a candidate passing the exam. However when the survey options are somewhat subjective in nature (for example, “high level” might mean something different to one candidate vs. another), confounding results can occur such as someone reporting an Expert skill level failing the exam.

When I review pre-test survey data, I keep two things in mind. First, the data is subjective in nature and based on a candidate’s perception of their experience (having software installed on their computer for 6 months is not the same as actually USING the software for 6 months). Second, there can be data outliers (a candidate who rates themselves an “Expert” with “No Experience”). However, enough data normalized across a pool of candidates can provide a strong indication of the candidate type who is more likely to pass an exam.

Why are correlating experience and skill level to an exam important? Because part of exam development best practices include properly defining the job role and profile of a minimally qualified candidate. If an exam prerequisite recommends at least 1 year of product experience, then the exam should be designed to meet that standard.

Pre-test surveys can provide insight into a candidate’s level of preparedness and help validate the exam’s target audience is indeed hitting the mark. The surveys can also provide an important feedback loop into training and preparation methodologies. So the next time you are faced with a test before the test, just know your answers are very important. It’s one part of the test you’ll be sure to pass!

*Survey questions are actual. Data provided for illustrative purposes only. Chart formats provided by Robert Allison.

________________________

Beyond the Credential, a blog from The SAS Learning Post, goes beyond the impersonal and sometime sterile world of technical exams and shines the light on the more human side of testing.

2 Comments

Hi Susan,

Thanks for providing such interesting graphics. That seems like a seriously high failure rate. That 75% of people with 3 years or less experience fail the exam, and 50% with 4 or more years fail is pretty surprising. Do you know how this compares to other certification exams? And with such a high failure rate, how is the "cut score" determined?

Thanks Michelle for your comment. The graph showing the high failure rate is for illustrative purposes in this article. However there are some areas where candidates should have extensive experience in order to pass a high-stakes certification. It just depends on how the "minimally qualified candidate" is defined when the job role is created during the Job Task Analysis. There are also a variety of ways to establish a cut score, including publishing the exam in beta format and collecting data, the Angoff scoring method, or even bookmarking. The good news about using a robust exam development process like SAS uses is there are plenty of checks and balances to ensure passing scores are set correctly and that pass rates meet target candidate requirements.