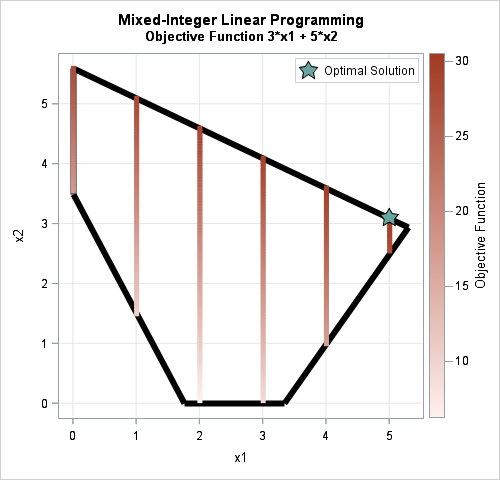

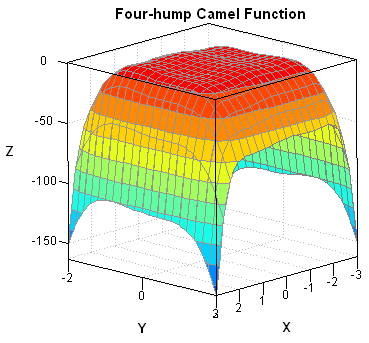

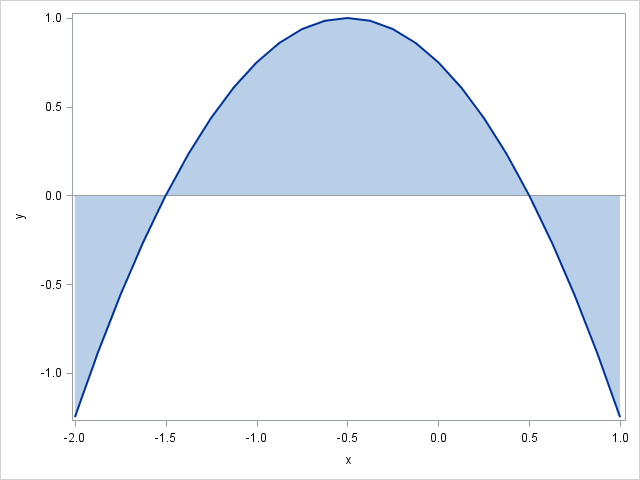

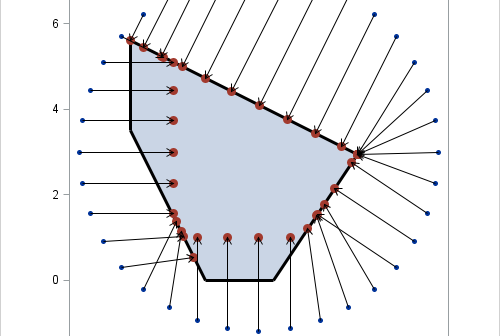

Most numerical optimization routines require that the user provides an initial guess for the solution. I have previously described a method for choosing an initial guess for an optimization, which works well for low-dimensional optimization problems. Recently a SAS programmer asked how to find an initial guess when there are