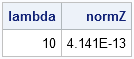

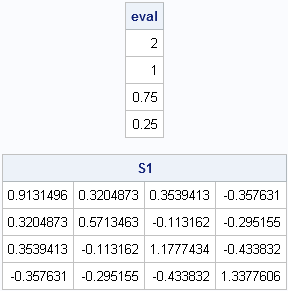

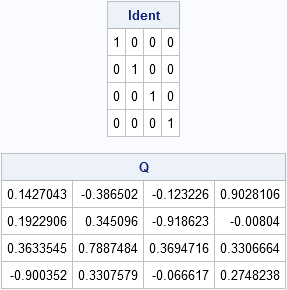

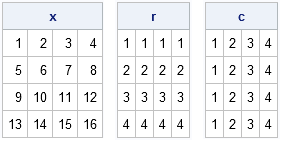

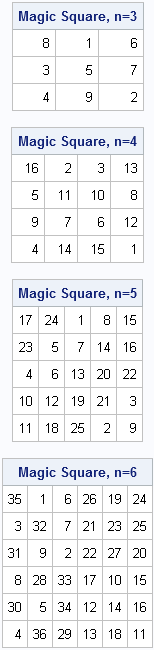

Magic squares are cool. Algorithms that create magic squares are even cooler. You probably remember magic squares from your childhood: they are n x n matrices that contain the numbers 1,2,...,n2 and for which the row sum, column sum, and the sum of both diagonals are the same value. There are many