Over the past few years, and especially since I posted my article on eight tips to make your simulation run faster, I have received many emails (often with attached SAS programs) from SAS users who ask for advice about how to speed up their simulation code. For this reason, I am writing a book on Simulating Data with SAS that describes dozens of tips and techniques for writing efficient Monte Carlo simulations.

Over the past few years, and especially since I posted my article on eight tips to make your simulation run faster, I have received many emails (often with attached SAS programs) from SAS users who ask for advice about how to speed up their simulation code. For this reason, I am writing a book on Simulating Data with SAS that describes dozens of tips and techniques for writing efficient Monte Carlo simulations.

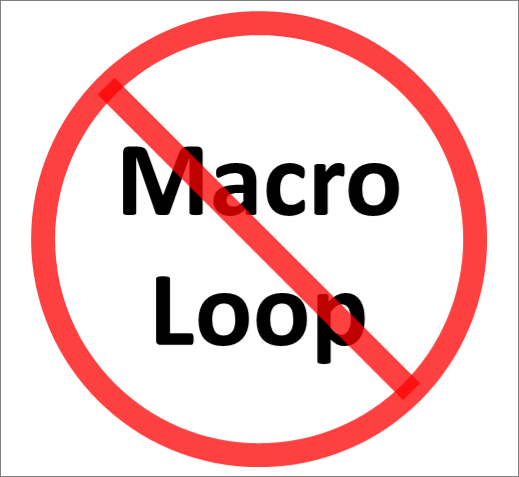

Of all the tips in the book, the simplest is also the most important: Never use a macro loop to create a simulation or to perform a bootstrap computation. I estimate that this is the root cause of inefficiency in 60% of the SAS simulations that I see.

The basics of statistical simulation

A statistical simulation often consists of the following steps:

- Simulate a random sample of size N from a statistical model.

- Compute a statistic for the sample.

- Repeat 1 and 2 many times and accumulate the results.

- Examine the union of the statistics, which approximates the sampling distribution of the statistic and tells you how the statistic varies due to sampling variation.

For example, a simple simulation might investigate the distribution of the sample mean of a sample of size 10 that is drawn randomly from the uniform distribution on [0,1].

You can program this simulation in two ways: the slow way, which uses macro loops, or the fast way, which uses the SAS BY statement.

Macro loops lead to slow simulations

It is understandable that some programmers look at the simulation algorithm and want to write a macro loop for the "repeat many times" portion of the algorithm. A first attempt at a simulation in SAS might look like this example:

/****** DO NOT MIMIC THIS CODE: INEFFICIENT! ******/ %macro Simulate(N, NumSamples); options nonotes; /* prevents the SAS log from overflowing */ proc datasets nolist; delete OutStats; /* delete this data set if it exists */ run; %do i = 1 %to &NumSamples; /* repeat many times (the dreaded macro loop!) */ data Temp; /* 1. create a sample of size &N */ /* some people put 'CALL STREAMINIT(&i)'; here for reproducibility */ do i = 1 to &N; x = rand("Uniform"); output; end; run; proc means data=Temp noprint; /* 2. compute a statistic */ var x; output out=Out mean=SampleMean; run; /* use PROC APPEND to accumulate statistics */ proc append base=OutStats data=Out; run; %end; options notes; %mend; /* call macro to simulate data and compute statistics */ %Simulate(10, 1000) /* 4. analyze the sampling distribution of the statistic */ proc univariate data=OutStats; histogram SampleMean; run; |

For each iteration of the macro loop, the program creates a data set (Temp) with one sample that contains 10 observations. The MEANS procedure runs and creates one statistic, the sample mean. Then the APPEND procedure adds the newly computed sample mean to a data set that contains the means of the previous samples. This process repeats 1,000 times. When the macro finishes, PROC UNIVARIATE analyzes the distribution of the sample means.

I'm sure you'll agree that this is just about the World's Simplest Simulation. The data simulation step is trivial, and computing a sample mean is also trivial. So how long does this World's Simplest Simulation take to complete?

About 60 seconds! This code is terribly inefficient!

Would you like to perform the same computation more than 100 times faster? Read on.

Improve the performance of your simulation by using BY processing

The key to improving the simulation is to restructure the simulation algorithm as follows:

- Simulate many random samples of size N from a statistical model.

- Compute a statistic for each sample.

- Examine the union of the statistics, which approximates the sampling distribution of the statistic and tells you how the statistic varies due to sampling variation.

To implement this restructured (but equivalent) algorithm, insert an extra DO loop inside the DATA step and use a BY statement in the procedure that computes the statistics, as shown in the following example:

/* efficient simulation that calls a SAS procedure */ %let N = 10; %let NumSamples = 1000; data Uniform(keep=SampleID x); do SampleID = 1 to &NumSamples; /* 1. create many samples */ do i = 1 to &N; /* sample of size &N */ x = rand("Uniform"); output; end; end; run; proc means data=Uniform noprint; by SampleID; /* 2. compute many statistics */ var x; output out=OutStats mean=SampleMean; run; /* 3. analyze the sampling distribution of the statistic */ proc univariate data=OutStats; histogram SampleMean; run; |

The first step is to simulate the data. You already know how to write statements that simulate one random sample, so just add a DO loop around those statements. The second step is to compute the statistics for each sample. You already know how to compute one statistic, so just add a BY statement to the procedure syntax. That's it. It's a simple technique, but it makes a huge difference in performance. (Remember to use the NOPRINT option so you don't get a "Log Window full" message!)

How long does the BY-group analysis require? It's essentially instantaneous. You can use OPTIONS FULLSTIMER to time the operations. On my computer, it takes about 0.07 seconds to simulate the data and the same amount of time to analyze it.

Why BY-group processing is fast and macro processing is slow

The first published description of this technique that I know of is the article "A Remark on Efficient Simulations in SAS" by Ilya Novikov (2003, J. RSS). Novikov mentions that the macro approach minimizes disk space, but that the BY-group technique minimizes time. (He also thanks Phil Gibbs of SAS Technical Support, who has been teaching SAS customers this technique since the mid-1990s.) For the application in his paper, Novikov reports that the macro approach required five minutes on his Pentium III computer, whereas the BY-group technique completed in five seconds.

You can use a variation of this technique to do a bootstrap computation in SAS. See David Cassell's 2007 SAS Global Forum paper, "Don't Be Loopy: Re-Sampling and Simulation the SAS Way" for a general discussion of implementing bootstrap methods in SAS. For more tips and programs, see "The essential guide to bootstrapping in SAS."

So why is one approach so slow and the other so fast? Programmers who work with matrix/vector languages such as SAS/IML software are familiar with the idea of vectorizing a computation. The idea is to perform a few matrix computations on matrices and vectors that hold a lot of data. This is much more efficient than looping over data and performing many scalar computations.

Although SAS is not a vector language, the same ideas apply. The macro approach suffers from a high "overhead-to-work" ratio. The DATA step and the MEANS procedure are called 1,000 times, but they generate or analyze only 10 observations in each call. This is inefficient because every time that SAS encounters a procedure call, it must parse the SAS code, open the data set, load data into memory, do the computation, close the data set, and exit the procedure. When a procedure computes complicated statistics on a large data set, these "overhead" costs are small relative to the computation performed by the procedure. However, for this example, the overhead costs are large relative to the computational work.

The BY-group approach has a low overhead-to-work ratio. The DATA step and PROC MEANS are each called once and they do a lot of work during each call.

So if you want to write an efficient simulation or bootstrap in SAS, use BY-group processing. It is often hundreds of times faster than writing a macro loop.

34 Comments

I ran into a problem where the dataset was too big to work with efficiently using BY and using a macro with smaller portions of the dataset ended up being faster.

Basically, you may run into computational limits with BY groups based on the dataset size, RAM and HD space.

Do you remember what procedure you were using? Most (or all?) procedures compute with each BY group in sequence, so that the whole data set is not held in memory. I'd be interested in hearing more if you recall any details.

I tested this advice out and had similar results to Fareeza:

%let n=1000; /* number of iterations */

%let m=1000000; /* size of one iteration */

%macro calculate_means();

options nonotes;

proc datasets nolist;

delete outstats;

run;

%do i=1 %to &n;

data temp;

do i=1 to &m;

x=rand("uniform");

output;

end;

run;

proc means data=temp noprint;

var x;

output out=out mean=samplemean;

run;

proc append base=outstats data=out;

run;

%end;

options notes;

%mend;

%calculate_means;

data test;

do sampleid=1 to &n;

do i=1 to &m;

x=rand("uniform");

output;

end;

end;

run;

proc means data=test noprint;

by sampleid;

var x;

output out=outstats mean=samplemean;

quit;

For small values of m, the 'macro' option was much faster: at m=1000 n=1000 the macro method took 16 seconds compared to 0.45 seconds using BY.

But when I increase m to 100000 the difference became negligible: 33 seconds for macro vs 34 for BY.

At m=1000,000 the macro method was substantially faster: 187 seconds, vs 473 seconds for BY. Note that the BY approach would be creating a file on the order of 3 gigabytes here, which may cause its own overhead.

Thanks for the feedback. I think you meant to say "For small values of m, the 'macro' option was much SLOWER."

My main point is that every time you call a SAS procedure you incur overhead costs. You want to make sure that the work that the procedure does is substantial compared with those costs. For most simulation studies, the sample size (my &N, your &m) is small, because for large samples you can use asymptotic results to get standard errors and confidence intervals. I'd guess that 99% of simulations that I've seen are done for samples sizes less than 1,000.

As the sample sizes get large, other factors come into play. You report that m=100,000 is about the size at which the two methods become comparable for your hardware and that the BY-group approach is slower for huge samples. You have essentially rediscovered the reason behind the "hybrid method" proposed by Novikov and Oberman (2007), which combines the macro and BY-group approaches when doing massive simulations. In their paper, they note that both approaches are "non-optimal with respect to computing time for large simulations." [emphasis added] See their paper for discussion, examples, and a SAS macro that combines the two methods.

Yes, that should have been "macro is slower for small values of m".

FWIW, in my job a data set with 30k-100k observations is unremarkable, and some run well into the millions, so that may have coloured my views on what counts as a "small" data set. The fact that we deal with data sets of that size is a big part of why we're using SAS in the first place...

"Performance," broadly spoke, it a tradeoff. The characteristics that most impact it are CPU speed, memory size, disk space, data transmission speed, and user effort.

In simulations, with relatively simple data structures, the BY approach generally works better as disk space is often a non-issue, even with large numbers of replications. Bootstrapping, on the other hand, can have both wide and long data, as well as more replicates; then the disk space and data transmission speed become parameters that need to be considered. Most SAS procedures are designed to minimize memory usage (not putting all of the data in memory at once), but there are a few that try; the individual procedure documentation helps here.

I included "user effort" in my tradeoff list because simple code can make a real difference in maintenance and understanding.

Doc Muhlbaier

Duke

Pingback: Using macro loops for simulation - The DO Loop

Could you please show an example where many data sets are created from a standard multivariate normal distribution? Examples of storing the generated data would be helpful, also.

Pingback: Turn off ODS when running simulations in SAS - The DO Loop

Pingback: Using simulation to estimate the power of a statistical test - The DO Loop

I can't tell you how much of a help this post was! I was doing the wrong way by using macros. It took over 24 hours for my simulation to complete. I ended up cutting my number of samples from 500 to 100 just to get it to run in about an hour. When I implemented your method, the whole thing ran in 33 seconds! Thank you so much for this time-saving information!

Great post @Rick. The simulation you describe is embarrassingly parallel. As such, is there a way in SAS 9.3 to distribute your multiple BY statement tasks across different cores of your PC so that the code could run even faster? Something like parallel() or foreach() in R? A piece of example code would be greatly appreciated as I can't seem to find any such reference on the web.

This simulation runs in a fraction of a second, so you don't need to parallelize it. In fact, if I run the hundreds of programs in my 300-page book Simulating Data with SAS, the cumulative time is only a few minutes, with the longest-running program requiring only about 30 seconds. By using the techniques in my book, you can write efficient programs that run quickly.

That said, many SAS procedures have been multithreaded since SAS 9.2, and will automatically use multiple cores on your PC. For simulations in which you are varying one or more parameter (e.g., power computations), there are various schemes for distributing computations. Do a web search that includes the terms "sas grid doninger" and you'll get dozens of hits.

Pingback: SAS author’s tip: the basic structure of efficient simulations in SAS - The SAS Bookshelf

How to simulate sample data in the interval [0,1]? I mean simulated data include value zero and value one and also values between 0 and 1 using SAS package

I would advise you not to worry about whether 0 and 1 are possible values that are returned from a random number generator. The uniform distribution on the closed unit interval (U[0,1]) and on the open interval (U(0,1)) have the same cumulative distribution. Consequently, they are identical distributions. Therefore use the RAND("uniform") function in SAS to sample from the uniform distribution as described in this article on generating uniform random numbers.

Dear All

I Need Simulation codes for Location based routing protocols for under water sensor networks.

please help me

Pingback: Simulate many samples from a logistic regression model - The DO Loop

I have multiple data sets named

dsnAC16 dsnAC17 dsnAC18 dsnAC19 dsnAC110.........dsnAC125

dsnAC26 dsnAC27 dsnAC28 dsnAC29 dsnAC210.........dsnAC225

.

.

.

dsnAC56 dsnAC57 dsnAC58 dsnAC59 dsnAC510.........dsnAC525

I want to combine all above data set and I am using following code but getting some errors pls guide

I want to do this task without macro loop

%let i=5; %let pat=20;

data combineAC;

set

do %let k = 1 to &i;

do %let h= %eval(1+&i) to %eval(&pats+&i);

dsnAC&k&h

end;

end;

run;

To get them all, use the colon wildcard:

set dsnAC:;

Hi Rick,

Thank you for these tips! I had started my simulations using a macro, and have updated my code as much as possible (using the "by" statement) and it runs much faster! I have a question about figuring out where "warnings" occur, though ... my log is giving warnings that look like in about 15 or so of the 500 simulations I'm running, the logistic model is not converging (this is expected). But, it doesn't say which simulation numbers these warnings are occurring at (and the output dataset has values for all simulations, so it doesn't help to look there). Any way I could have the simulation number written to in the log for those warnings when I've used the "by" command?

Thanks!

Kat

The log contains NOTES that give the BY group information, but that's not what you want to use. Probably you are using the OUTEST= option on the PROC LOGISTIC step to get the parameter estimates. The OUTEST= data set contains a variable called _STATUS_ which has values like "0 Converged" or "1 Warning." Thus if you only want to analyze the parameter estimates for which the model converged, you can say

WHERE _STATUS_ = "0 Converged";

or if you want to see the runs that failed

WHERE _STATUS_ ^= "0 Converged";

For more details, see my blog post about logistic simulation.

Great, thanks so much for the quick reply!

Pingback: Monitor convergence during simulation studies in SAS - The DO Loop

Thank you for the posting. This is very helpful. My questions, when running 1000 simulations for negative binomial regression, there is one simulation having some extreme value and run into ERROR: Floating Point Overflow. And the whole procedure will be terminated. And there will be no output. Is there anyway we can skip this particular simulation and still do rest of the all?

Yes. Identify the statement that is overflowing and trap the condition before it overflows. For example, if the overflow is in calling the EXP function, use the CONSTANT function to make sure that the argument never exceeds LOGBIG. For details and an example, see the article "Constants in SAS".

Hi Rick,

I have been using the BY-group processing for running simulations, which has worked well and saved me lots of time up to this point! However, I need to increase the "sample size" per simulation as well as the number of simulations I'm running, and now SAS is giving me errors in that it is "unable to allocate sufficient memory". (For example, I need 5,000 observations per simulated dataset, and am running 3,000 simulations -- so the base dataset has 15 million observations. Then, I'm repeating that by 12 different outcomes, so there would be 180 million observations.) I have tried increasing the memory used, but that did not help. My only other thought is to create multiple datasets (each as large as possible) and repeat the BY-group processing on each dataset. Would you have any other solutions/suggestions?

Thanks!

Kat

You've done all the right things. 180 million observations is not huge by modern standards, so you might be running out of space in WORK. Your SAS administrator might be able to help you allocate more space for the WORK directory. Or you can use a permanent libref, but remember to delete those files when you are finished!

Your idea to break up the problem into smaller ones is quite reasonable. That is one of the tips I give for running huge simulations in Chapter 6 of Simulating Data with SAS (particularly section 6.4.5).

Pingback: Compute a bootstrap confidence interval in SAS - The DO Loop

Pingback: Coverage probability of confidence intervals: A simulation approach - The DO Loop

Pingback: Simulate many samples from a linear regression model - The DO Loop

Pingback: An easy way to run thousands of regressions in SAS - The DO Loop

Pingback: Simulate multivariate correlated data by using PROC COPULA in SAS - The DO Loop

Pingback: The essential guide to bootstrapping in SAS - The DO Loop